Comments (1)

CompTIA PenTest+ Certification Training

This is very informative and interesting for those who are interested in the blogging field.

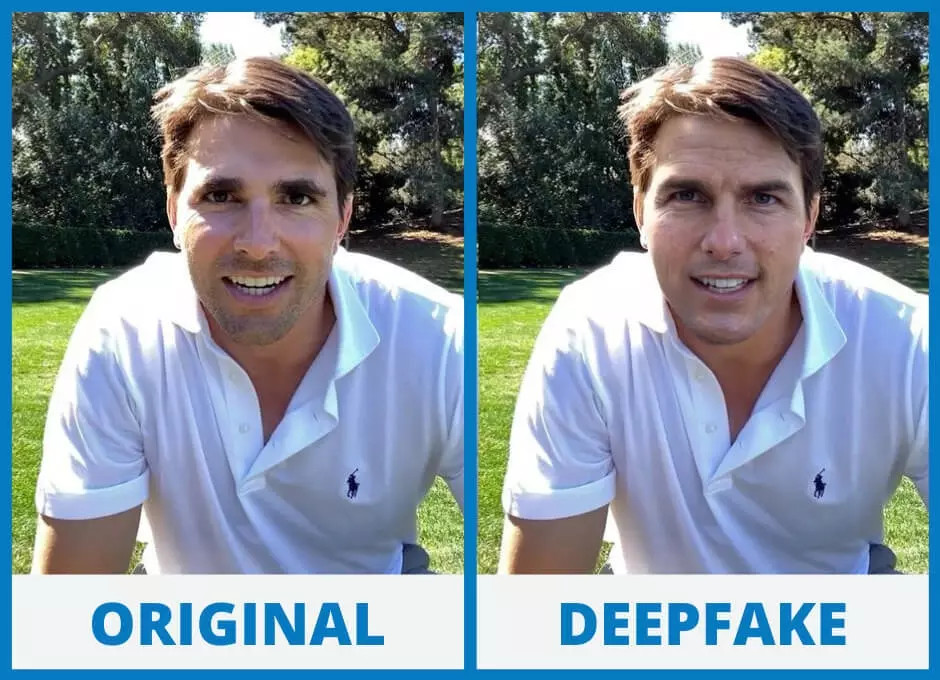

Deepfakes have garnered mainstream attention for their uses in celebrity videos, fake news, hoaxes, and financial fraud.

Deepfake is the manipulation of deep learning algorithms, which teach themselves how to solve problems when given large sets of data, to swap faces in video and digital content to make realistic-looking fake media.

There are numerous methods for creating deepfakes, but the most common relies on the use of deep neural networks involving autoencoders that employ a face-swapping technique.

Generative Adversarial Networks (GANs) are also used to detect and improve any flaws in the deepfake within multiple rounds, making it harder for deepfake detectors to decode them.

Deepfake is enabling all sorts of high-tech frauds as a narrow, dull, dumb, deepfake AI, which is blindly doing what it is trained to do by some biased developers.

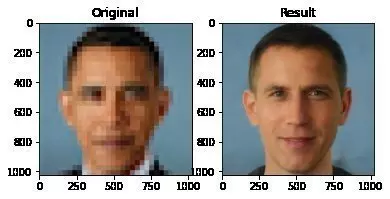

A deepfake algorithm is trained to reconstruct human faces from pixelated images – and that is what it will try to do even when the original image was not human at all.

In recent years, criminals have made huge progress in the field of [fake] AI, and particularly through deep learning algorithms. These algorithms have been around for many years, but are now becoming much more useful thanks to the exponential growth in both data and computing power to handle them.

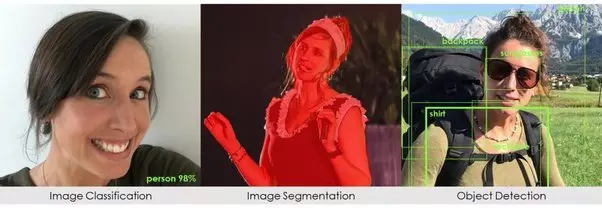

Deep learning algorithms are particularly useful for analysing images. For example, to classify the first image as a person, segment the second into person/background, and recognise objects in the third.

The main machine learning methods used to create deepfakes are based on deep learning and involve training generative neural network architectures, such as autoencoders or generative adversarial networks (GANs). These use two deep learning networks: the first generates new images, and the second distinguishes the new (fake) from real images.

Deepfakes (audio, picture, video and text formats) are used in celebrity pornographic videos, revenge porn, fake news, hoaxes, and financial fraud.

Deepfake is illegal in some countries. As of now deepfake is not outlawed in some U.S. states. A large amount of deepfake softwares can be found on GitHub, a software development open source community. Some of these apps are used for pure entertainment purposes — which is why deepfake creation isn't outlawed — while others are far more likely to be used maliciously. Deepfake technology is being weaponised against women, children, celebrities and politicians.

Deepfakes are not limited to just videos. Deepfake technology can also create convincing but entirely fictional photos from scratch. Deepfake audio is a fast-growing field that has an enormous number of applications.

Realistic audio deepfakes can now be made using deep learning algorithms with just a few hours or minutes of audio of the person whose voice is being cloned, and once a model of a voice is made, that person can be made to say anything.

There are several indicators that give away deepfakes:

Governments, universities and tech firms are all funding research to detect deepfakes. Some companies are building detection platforms that are akin to an antivirus for deepfakes that alerts users via email when they're watching something that bears telltale fingerprints of AI-generated synthetic media.

Social media platforms are also banning deepfake videos that are likely to mislead viewers.

Ironically, artificial intelligence (AI) may be the answer.

AI is a great thing of great use, the best strategic general purpose technology with a general intelligence power.

But our reality is different. Nothing non-existing could be useless by its nature. There is no computer intelligence, machine intelligence, artificial intelligence or computational intelligence in existence. It has an honorable status of the greatest human dream, highest ever goal, still residing in the imaginary world of researchers, developers, engineers, artists and sci-fi promoters.

To be discovered, computer’s intelligence, as the greatest invention ever, should pass all the emerging technology stages, as to be: conceived, modelled, designed, developed, deployed and widely distributed.

One might believe that AI is already around us, it is embedded in every part of our life, in every aspect of modern society.

40 Google researchers raised serious issues around machine learning approaches to a wide-range of problems. They used an example of modelling an epidemic to illustrate their concerns. The image below shows two epidemic curves predicted by a machine learning model, but based on different assumptions about disease spread. Both models are equally good, according to the algorithm, but they make very different predictions about the eventual size of an epidemic.

How two different epidemic models (blue and red) with very different assumptions can both fit the existing data but give very different predictions.

"All models are wrong, but statistical ML/DL/ANI models are badly wrong."

The machine-learning models we use today can't tell if they will work in the real world or not—and that’s a big problem.

All we need is a real world AI, as a causal machine intelligence and learning, as outlined in [Causal Learning vs. Deep Learning: On A Fatal Flaw In Machine Learning].

In general, AI modelling should consist of the following necessary features and functions if to be a Real AI:

This is very informative and interesting for those who are interested in the blogging field.

Leave your comments

Post comment as a guest