Comments

- No comments found

AI is poised to revolutionize UI/UX design by streamlining the design process, enhancing user experiences, and unlocking new creative possibilities.

Artificial intelligence (AI) can analyze vast amounts of user data to identify patterns and preferences, enabling designers to tailor interfaces to specific user needs. Additionally, AI-powered tools can automate repetitive tasks, such as prototyping and testing, allowing designers to focus more on creativity and innovation. With AI's ability to generate personalized recommendations and adapt interfaces in real-time, UI/UX design is entering a new era of efficiency and user-centricity.

Markdown has been taking the world by storm of late, especially because of its use within ChatGPT. The language consists of notational shortcuts that let users duplicate common HTML components such as <h1>, <h2>, etc. tags, lists and list items, and even tables and images. It is also easy to parse into something that can be readily streamed, a major consideration given the comparative slowness of ChatGPT when outputting content, and its chat-oriented output

However, this convenience comes at a specific cost. The move to a chat interface can seem like a return to the bad old days of teletype machines and blinking green displays that seemed to refresh ... one ... character ... at ... a ... ti ... m ......... e. You have little control over imagery and forget colours, buttons, UI elements, etc., which have come a long way in the nearly 30 years since CSS was first introduced in 1995.

I spent some time researching: CSS is not implemented in OpenAI, and it may not be for a while. This means that if you are going through ChatGPT, you're limited to a chat interface. However, what if you weren't going through ChatGPT?

It turns out that you can generate HTML that follows a template, uses CSS, and takes advantage of scripting. The downside is that it is VERY slow—a typical page can take over thirty seconds to render, most of that time coming from the OpenAI API. I have yet to try this with Copilot or Devon, but while I suspect they may be faster, there's no guarantee they will be MUCH faster.

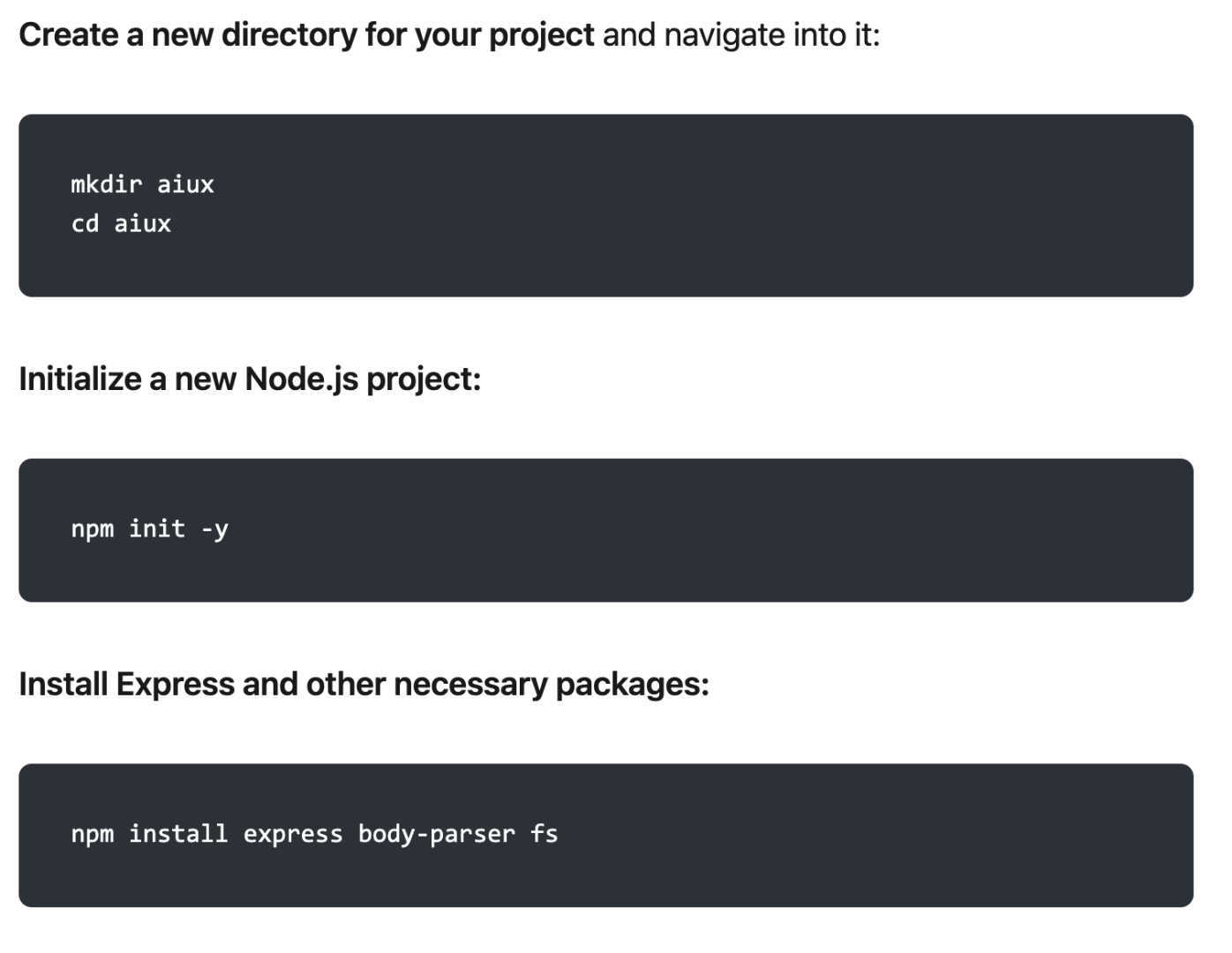

To test this out, I set up an Express Server running on NodeJS in the folder aiux :

I then asked GPT for an assist in writing the server.js script (this is a very simple version that, in the final version, will be broken into separate modules). It is worth noting this in chunks, but they are all part of the same file.

This retrieves the various packages, assigns them to variables, and also sets the port and OpenAI Authorization Key. Check the OpenAI docs (or whatever platform you're using) for where that is this week.

The userContent is a single big prompt that includes instructions for the server.

Several key points should be mentioned here. The first part (given as an arrow function) defines instructions for relating the variables passed to generate the expected HTML output. The expressions ${articleTopic} and ${prevTopic} will embed these (pre-calculated) variables into the listing.

The next section describes a template for the page, consisting of a single article with a headline and multiple sections (note the use of the *, which is typically meant to be zero or more), again with each section having its subhead. The <article> and <section> elements also include generated IDs (and assume that the LLM is generating these IDs). These can be used to simplify script generation.

Images are problematic because either they have to be generated dynamically (a slow process in and of itself) or they have to reference a pre-existing set from an index. In this case, as a third way, I used the very helpful font-awesome font sets that include various glyphs that can be applied to various potential cases. The expression generates these:

thus allowing the LLM to generate the appropriate glyph contextually. For something like OpenAI, these are stored within the model. Still, for smaller Llamas, these can be referenced via an external file that can be incorporated into the model set itself.

The final sections of the prompt indicate how links are formed and how to include a progress bar activated when a link is selected. This helps give the impression that something is happening as new pages are generated by the LLM, though in reality, what it's doing is simply showing a progress bar for 30-40 seconds.

In reality, this prompt may be one of several that are defined. OpenAI includes both system prompts, user prompts, and assistant prompts, though these are used primarily to indicate how "deep" the prompts are regarding the responses generated.

The middleware is broken into two parts - the app.get('/article') expression, which generates the article itself as well as parses the topic query string parameter and determines the higher level component for reclimbing the hierarchy.

When OpenAI returns HTML content, it encloses it in a Python triple quote as follows: """html <html>...</html>""". When data is retrieved from the API, you should test first to ensure that valid content was returned (I didn't in this example, but the CSS code manager below shows how it would look), then should extract the relevant HTML, set the content type output to "text/html" and send the content.

The CSS file handler showcases how you would get other key resources. Here, the routine reads the file styles.css given relative to the server.js file, and sends it to the client. You should obviously generalize this.

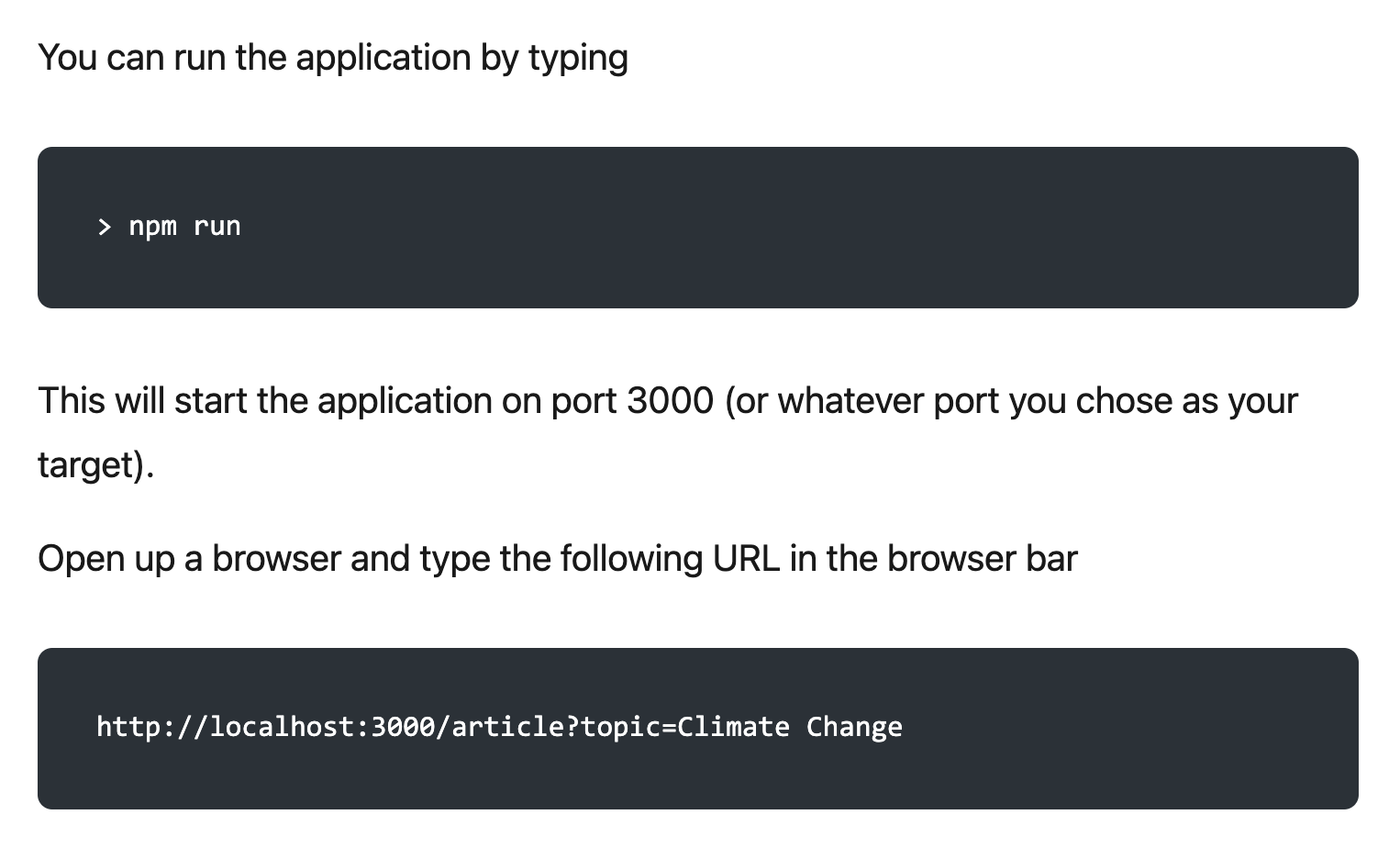

Finally, the express app is told to listen on the given PORT (here, 3000, which can be changed with the ${PORT} constant, and there you have it, a simple HTML-based AI web app.

The CSS styles.css file itself is very straightforward:

Just so you know, it is only possible to do this consistently because the HTML layout template was specified beforehand. It took me a few tries to realize that with that template, the chat engine was more predictable in how it generated HTML, sometimes using articles and sections, sometimes using divs, etc. Even if the chat engine is developing things like Javascript code, specifying some substrate structure is VERY important if you want consistency.

The application allows you to create an application that will generate a page on any topic, which includes links to subordinate topics that you can use to drill down.

Then go get a cup of coffee. Yes, this will take about thirty seconds, which is unacceptable in a modern app, but I expect there are ways to optimize this.

This will create a new web page explicitly devoted to climate change, generated by the AI engine.

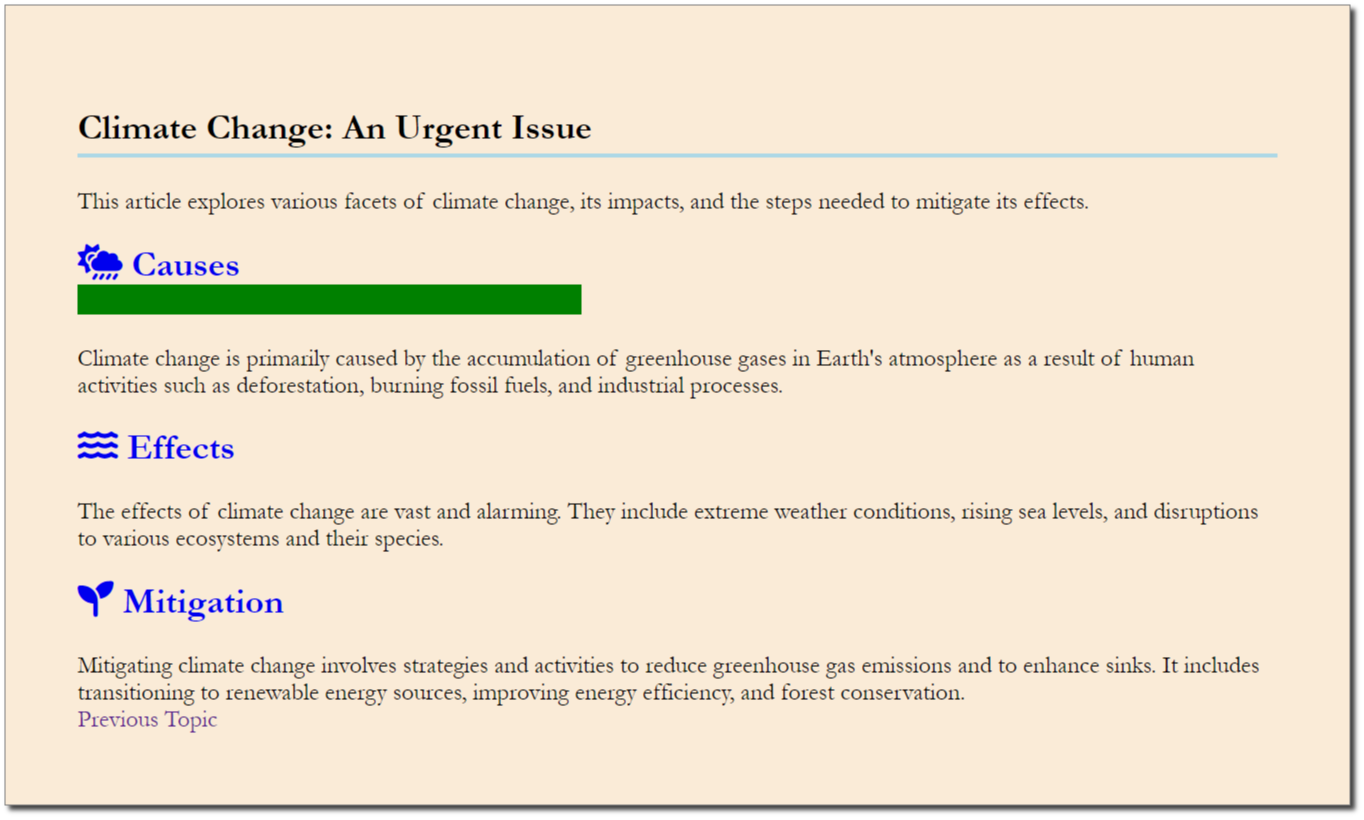

Notice several things. First - CSS is fully functional, allowing for different font formats, rules, drop shadows and everything else you might expect in a modern web page. Beyond that, the font-awesome icons are surprisingly spot-on, with a factory, what I think is water, and a leaf being chosen for causes, effects and mitigation strategies. Finally, you can clearly see links. When you click on the first header (Causes of Climate Change), you will then get a progress indicator (the image is slightly different because titles may very from run to run);

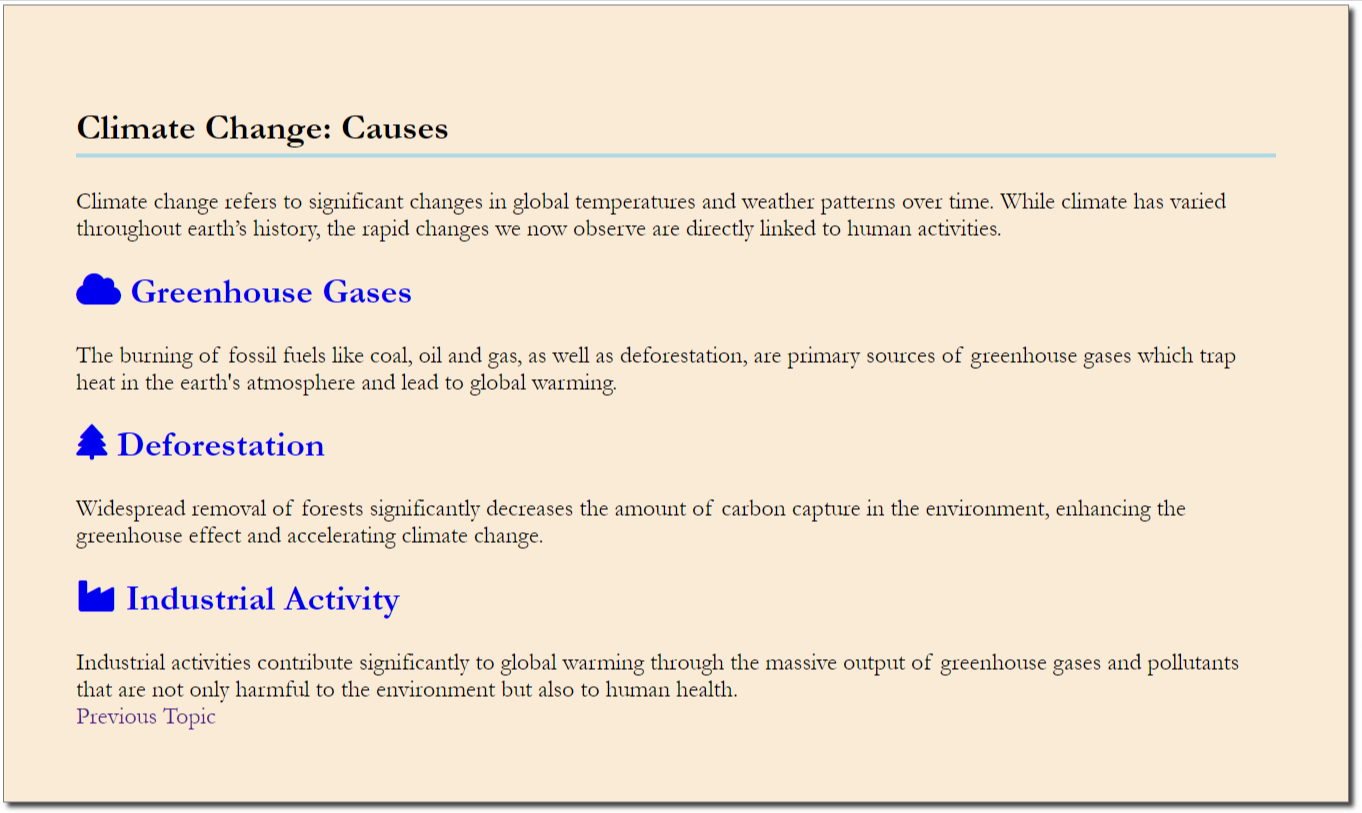

Eventually (maybe after a bio break), a new page will be generated:

You can continue drilling down as far as you want, though depending upon the depth of the context (determined primarily by the label, not the label and body copy), the site reasonably can probably only be drilled down to about four levels before it gets repetitive.

The HTML that is generated for this last page is worth showing as well:

The template structure captured here includes generated links, scripts to support interactive elements, and more.

This proof of concept is deliberately kept simple to illustrate the point rather than showcase a commercial application. However, the fact that it can be done at all has some exciting implications for UIUX in the age of AI.

We're about to see a profound shift in designing and building applications. Until now, most web content has been database-driven—you create a template, then assign values stored in a database to populate specific variables, usually via a nodeJS framework.

Here, the content is generated, but significantly, so is the underlying framework. You can create prompts that could determine, based upon the type of content being asked, how to change layouts, what links should be stressed or eliminated (perhaps based upon the role assumed by the AI engine itself or by the user), what images or videos to display (and even generate those images), and so forth, without explicitly needing to do more than provide guidelines rather than complex algorithms.

The font-awesome examples are illustrative here - the LLM was able to identify appropriate decorations based on the topic at hand without specific human intervention. Microsoft PowerPoint uses a similar technique with its AI Designer capability for generating and incorporating FA icons into presentations appropriate to the theme and specific bullet points. However, this is only possible to pull off with an AI.

I especially see a powerful combination occurring with AI-based formatting and the emerging HTMX specification, which makes it possible to create dynamic links that can be used to update blocks in a page without the need for extensive, specialized coding. In essence, AI + HTMX + CSS can handle a significant amount of what has often required extensive Javascript intermediation.

This will impact how we design applications: less static layouts and more journeys of discovery. This, in turn, implies the need for a different methodology for UIUX, especially with generated imagery, audio, and 3D components able to pull not only from static content but also from generated content. Right now, most of the AI in UIUX tends to focus primarily on updating content and preserving the prompt/response pattern of Chatbots. I see this changing as, like any new medium, we discover ways of creating novel interfaces that were impossible before.

The flip side is that both editorially and design-wise, the new UIUX involves setting constraints and limits on what can be done rather than in constructing web content. Automatic website generation is nothing new, nor is its use by bad actors. As with any publishing system, you still need to curate your sources, even if they get fed into a company-wide LLM, provide semantic structure, and maintain consistent editorial and design control.

However, what has changed in this kind of development is that you can use your content more flexibly to get reasonable output in a presentation format that moves beyond most simple chatbots. In the previous example, it would be easy enough to add a topic field that could be used to ask any question with the given context already discussed. It would be just as easy to pass in a user key that allows the LLM to track and maintain session information so that the questions so asked can be much more targets.

Similarly, you can instruct LLMs to generate form content that only requests what is needed from the user rather than designing detailed forms in which many fields are irrelevant. Users can specify whether they want a form or chat type of interaction to be able to enter content, and you can mix and match statically vs dynamically generated imagery and, ultimately, video content without having to expend a significant amount of time on detailed design work on sections that get very little traffic.

The most significant limiting factor in all of this right now is performance. There's a reason why ChatGPT's client uses Markdown: the AI interfaces are just too slow if you need to make use of HTML, HTMX or similar output. That will likely change, especially as you get smaller, more targeted LLMs coupled with more powerful GPUs.

Regardless, the user interface world will likely undergo a profound metamorphosis in the next few years as we move from building interfaces to suggesting them. As data becomes ever more complex, this will be an essential requirement because when you have hundreds or even thousands of different types of objects that you're dealing with, the manual creation of user interfaces just cannot keep up.

Copyright 2024. Kurt Cagle/The Cagle Report

Kurt is the founder and CEO of Semantical, LLC, a consulting company focusing on enterprise data hubs, metadata management, semantics, and NoSQL systems. He has developed large scale information and data governance strategies for Fortune 500 companies in the health care/insurance sector, media and entertainment, publishing, financial services and logistics arenas, as well as for government agencies in the defense and insurance sector (including the Affordable Care Act). Kurt holds a Bachelor of Science in Physics from the University of Illinois at Urbana–Champaign.

Leave your comments

Post comment as a guest