Comments

- No comments found

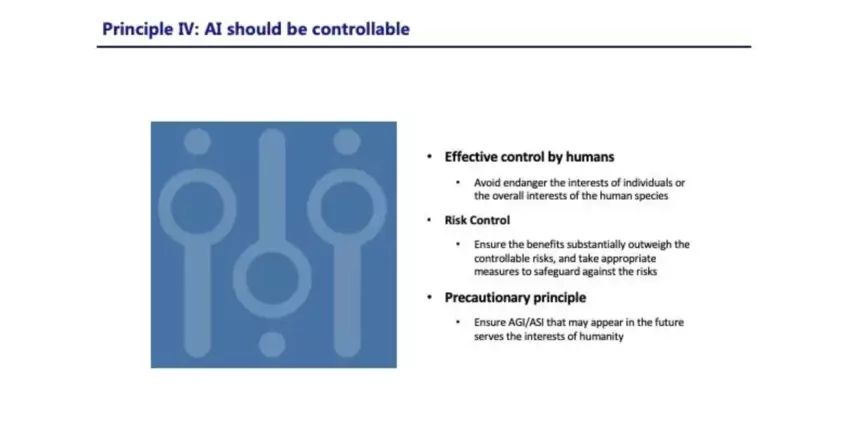

Artificial superintelligence (ASI) has the potential to be incredibly powerful and poses many questions as to how we appropriately manage it.

Many people are worried that machines will break free from their shackles and go rogue.

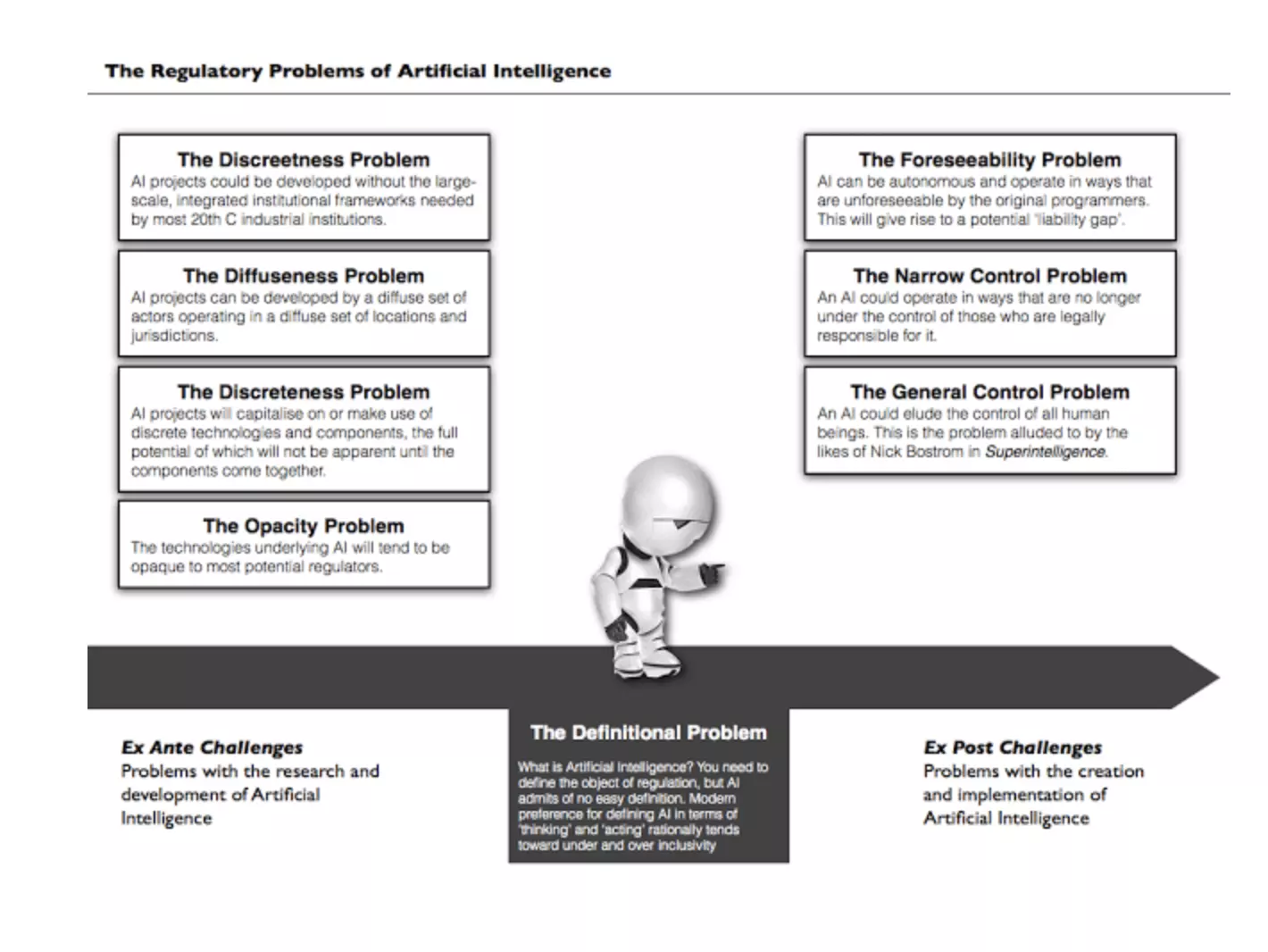

Source: John Danaher

The Three Laws of Robotics, first introduced in Isaac Asimov's 1942 short story “Runaround,” are as follows:

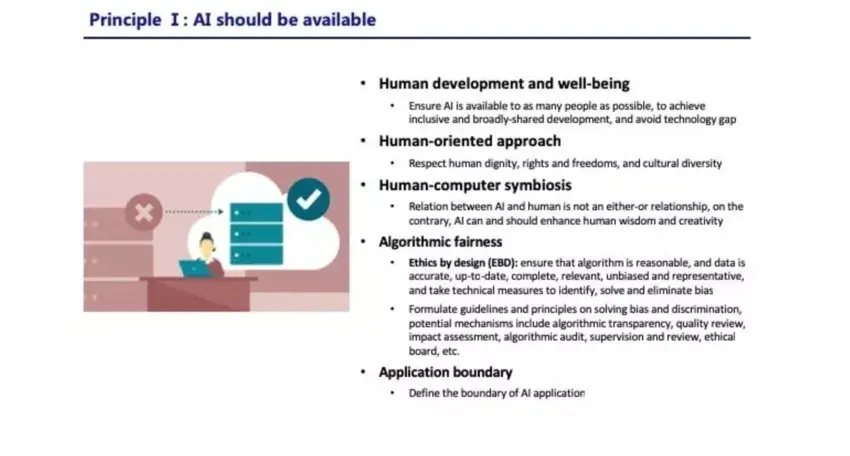

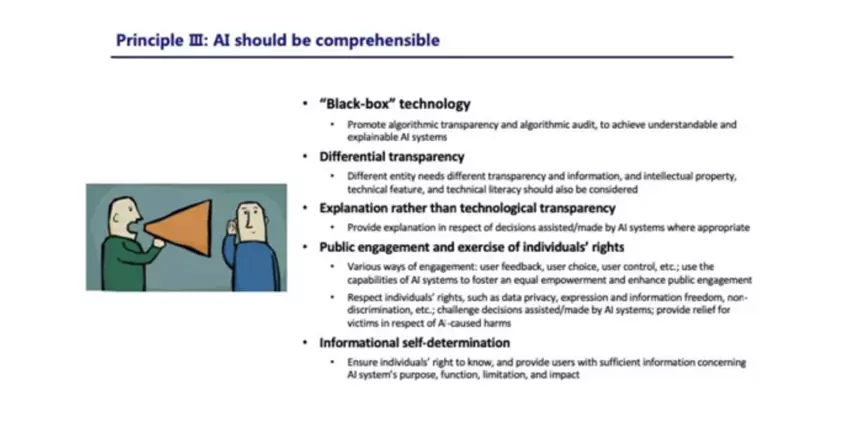

Organizations should be required to provide their customers with information concerning the AI system’s purpose, function, limitations and impact.

Source: Tencent

In order to develop a comprehensible AI, public engagement and the exercise of individuals’ rights should be guaranteed and encouraged. AI development should not be a secret undertaking by commercial companies. The public as end users may provide valuable feedback which is critical for the development of high-quality AI.

Every innovation comes with risks. But we should not let worries about the extinction of humanity by artificial general intelligence prevent us from pursuing a better future with new technologies. What we should do is to make sure that the benefits of AI substantially outweigh the potential risks. To achieve that, we must establish appropriate precautionary measures to safeguard against foreseeable risks.

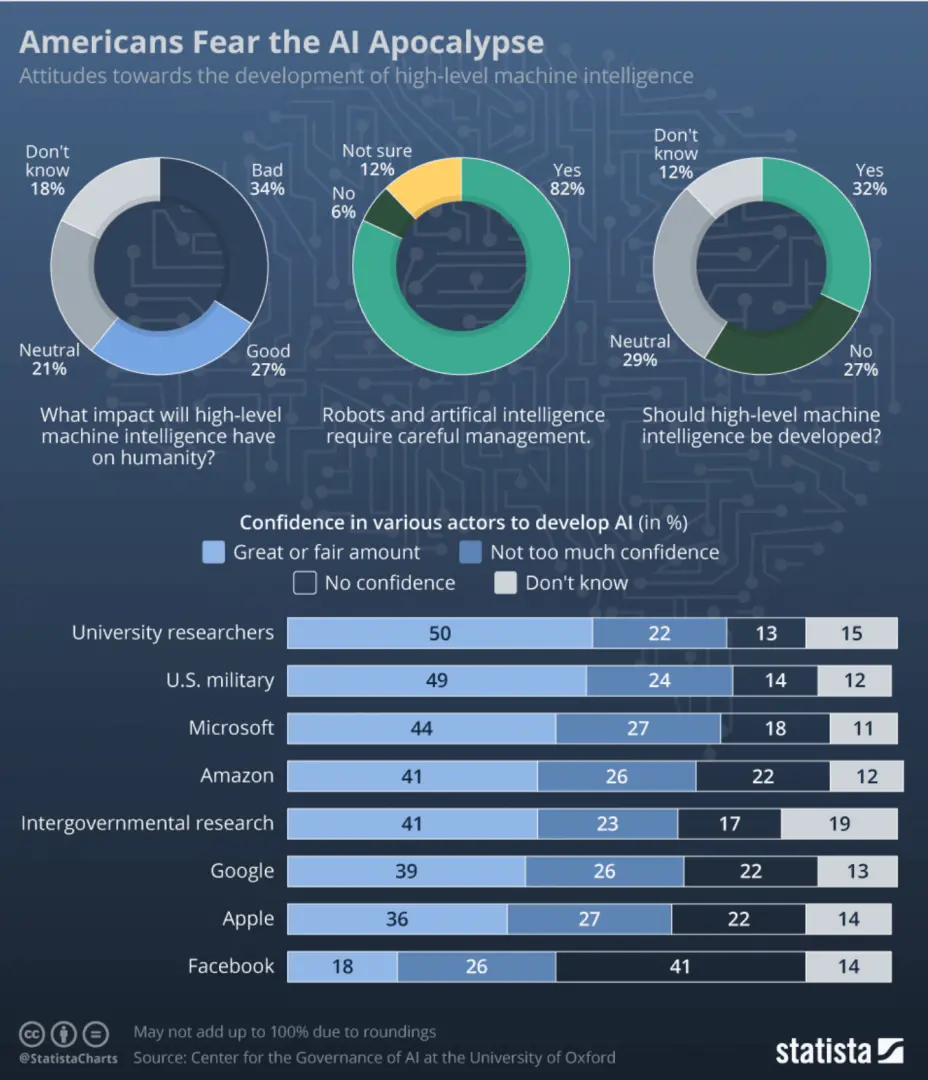

Source: Statista

In 2016, Microsoft suffered a PR nightmare. They embedded an AI chatbot named Tay on Twitter and it caused immediate controversy.

Designed to mimic the language of a 19-year old female, Tay used machine learning and adaptive algorithms to scour Twitter for conversations. The AI chatbot was racist and the experiment quickly backfired.

Sophia, the social humanoid robot developed by Hanson Robotics, made headlines for one particularly ominous remark she made while being questioned by her maker.

“Do you want to destroy humans?” Dr David Hanson asked, before realising the error of his ways and quickly adding “please say no.”

Sophia paused for a moment and her eyes narrowed.

“Okay, I will destroy humans,” she chillingly proclaimed.

Sophia uses machine learning algorithms to converse with people. Made to look lifelike, she has 50 facial expressions and can remember faces by using the cameras in her eyes. She catches the gaze of those quizzing her on everything from women’s rights to the nature of religion, replying with her own learned take on things.

Elon Musk and Mark Zuckerberg have always clashed about artificial intelligence.

Elon Musk is warning that the rapid development of artificial intelligence (AI) poses an existential threat to humanity, while Zuckerberg is countering that such concerns are much ado about not much.

Stephen Hawking also stated that super intelligent artificial intelligence could be a slate cleaner for humanity.

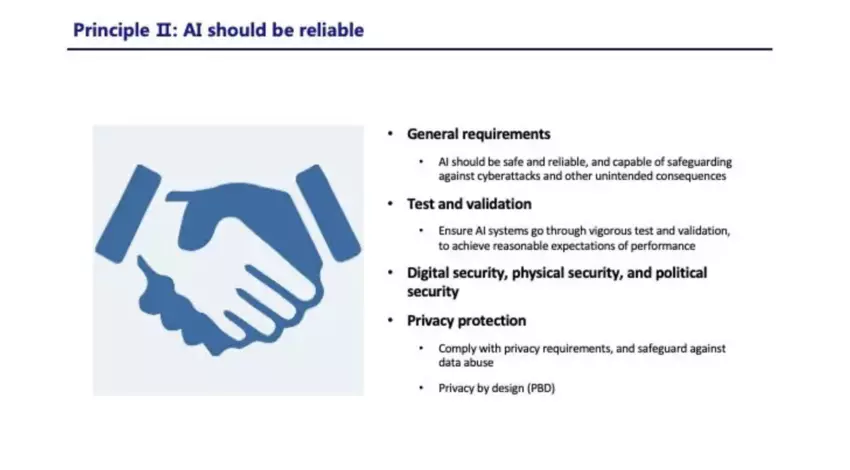

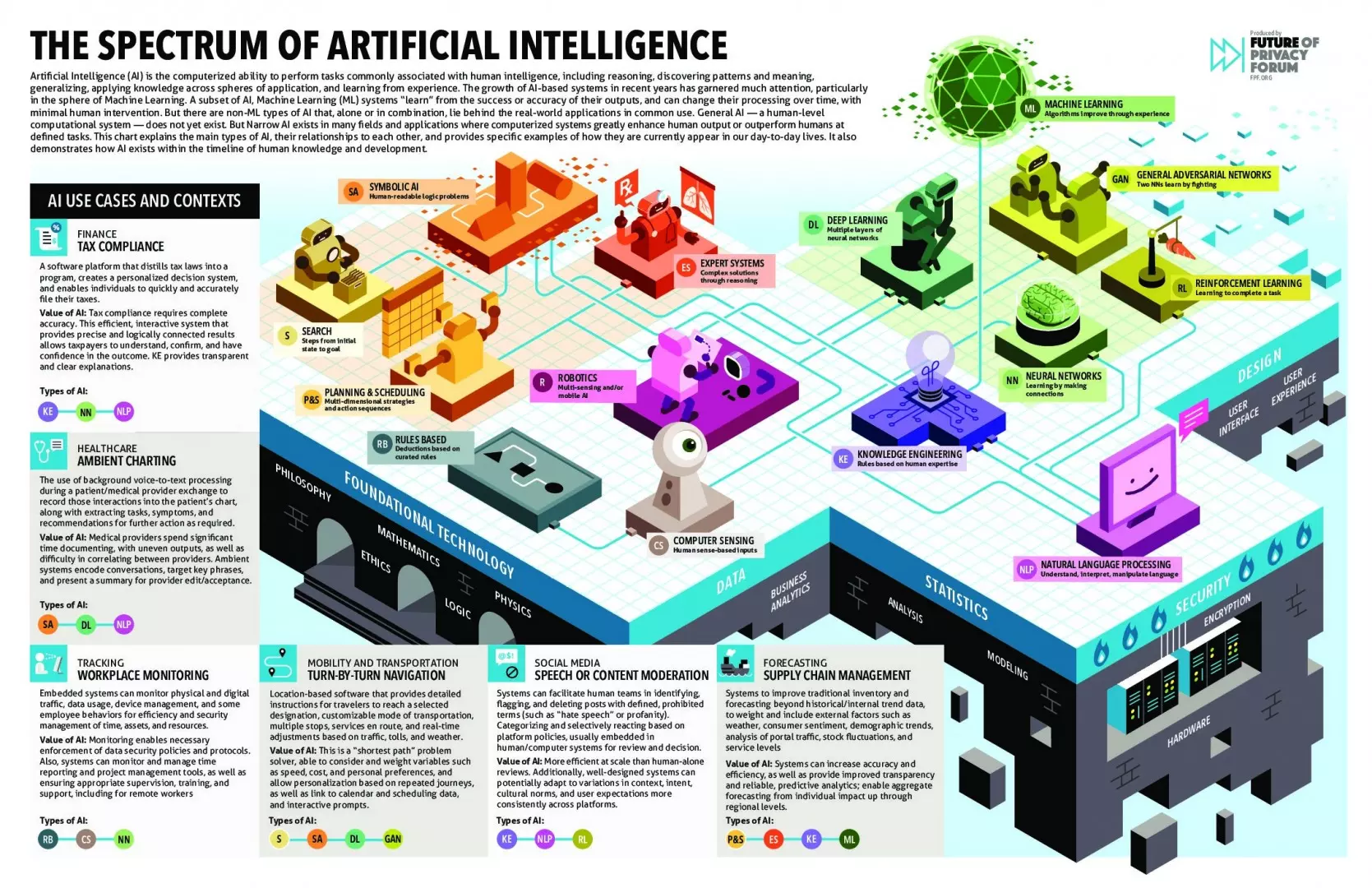

Source: The Future of Privacy Forum

The most obvious way to keep a super intelligent AI from getting ahead of us is to limit its access to information by preventing it from connecting to the internet. The problem with limiting access to information, though, is that it would make any problem we assign the AI more difficult for it to solve. We would be weakening its problem-solving promise possibly to a point of uselessness.

The second approach that might be taken is to limit what a super-intelligent AI is capable of doing by programming into it certain boundaries.

Should we risk our safety in exchange for the possibility that AI will solve problems we can’t?

Scientists has stated that it's impossible for any containment algorithm to simulate the AI’s behavior and predict with absolute certainty whether its actions might lead to harm.

The algorithm could fail to correctly simulate the AI’s behavior or accurately predict the consequences of the AI’s actions and not recognize such failures.

Although it may not be possible to control a superintelligent artificial general intelligence, it should be possible to control a superintelligent narrow AI—one specialized for certain functions instead of being capable of a broad range of tasks like humans.

Leave your comments

Post comment as a guest