Comments

- No comments found

The study of the recursive logistic map, tracing back to Robert May and Edward Lorenz, unveils a fascinating realm of computational dynamics and emergent behavior.

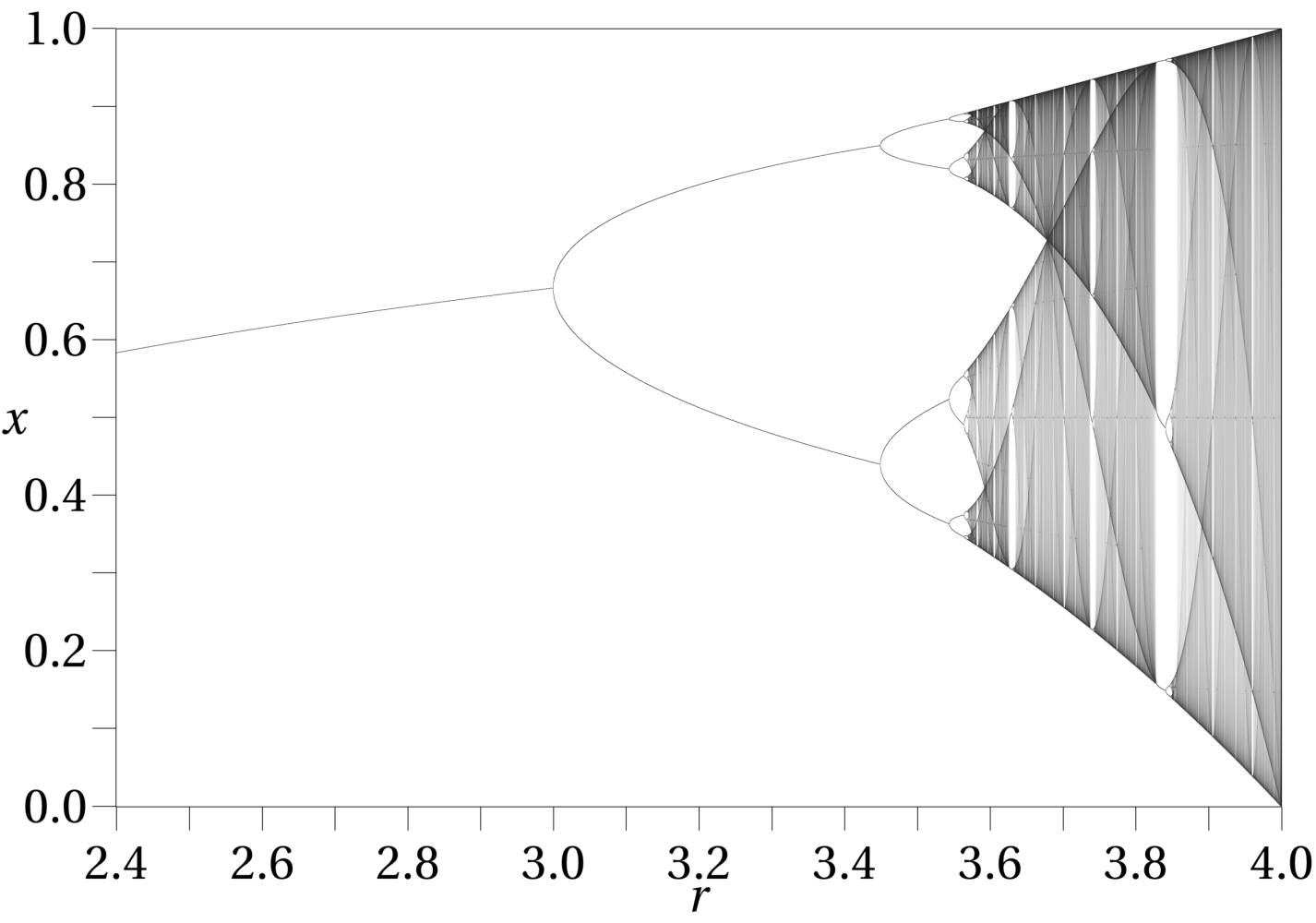

One of my favorite all-time graphs (okay, I'm a graph nerd, sue me) is the recursive logistic map (also called the bifurcation map):

This map was popularized by Robert May in the 1970s, based upon work by mathematician and meteorologist Edward Lorenz in the 1960s. (The logistics curve itself goes much farther back, to Pierre François Verhulst in the 1830s).

The map describes the equation

x[n+1] = rx[n](1- x[n]).

For r less than about 3.0, when n gets large, x[n] converges to a single point, but as r increases above 3, the map bifurcates to two values, and around 3.4 it bifurcates to four values and so forth.

What's notable is that at various points, there are voids in which everything seems to collapse back down to two-point convergence, only to repeat the pattern in a more compressed fashion as r increases.

This is, in effect, a discrete version of the logistics curve, which appears quite frequently in population dynamics, economic theory, and other forms of systems theory. It is an adaptation of the Lyapunov equations that describe interactive systems, initially predator-prey models but now used heavily in any number of scenarios.

The bifurcation map is important because it says that whenever you have interactive systems (which, by definition, are most of them), behaviour over the long term will tend to approach a specific value, but only if the system's level of complexity (r) isn't too large (in other words, the population is stable). However, once the amount of energy in the system becomes too large, bifurcation occurs, and the system oscillates between two values. Increasing the energy more, and the system bifurcates again to oscillating between four values, and so forth. Eventually, this process gets to the point where the states of stability become chaotic, with just a slight nudge shifting from one state to the next, meaning that initial conditions become extremely important. Edward Lorenz called this Chaos Theory.

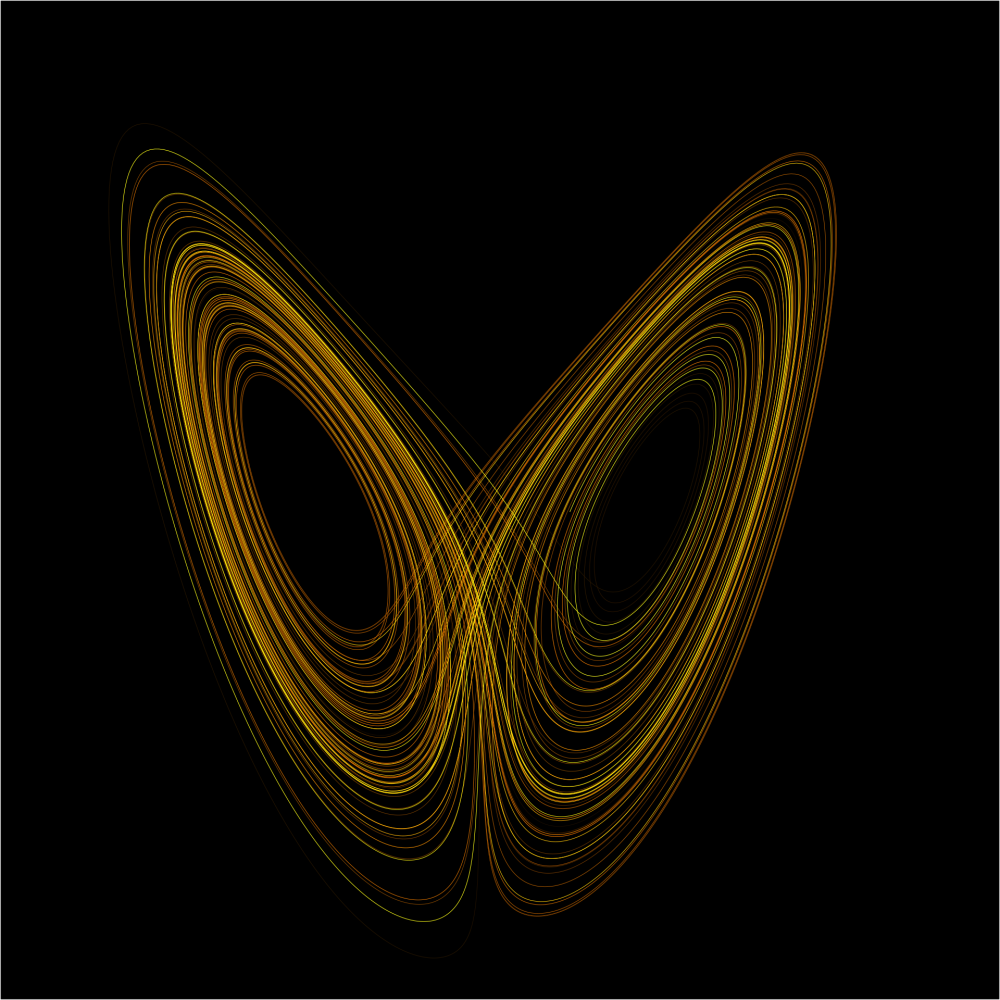

If you plot these oscillation points in the complex plane, what emerges is an interesting picture, a roughly butterfly shape called a strange attractor.

This attractor isn't a curve. Instead, the points are laid down in a seemingly random cloud, but with a sufficient number of points, the curves emerge. This is significant because it says that chaos is not truly random. Indeed, chaos comes about when information is contained in a complex network of interacting components.

What's as significant is that in the bifurcation map, there comes a point where you seem to hit infinite bifurcations, and then suddenly, most of these bifurcations disappear. In other words, such bifurcation is fractal, where you have similar structures at different scales. Benoit Mandelbrot identified this phenomenon in his ground-breaking book The Fractal Geometry of Nature as self-similarity.

This means mathematically that you can take one branch of a bifurcation, perform an isomorphic transformation on it (scale it, rotate it, translate it, etc.), and you will get something that looks very similar to the larger map. In the real world, that similarity is not identical even with transformations because there are different forces of work at different scales. Still, you can argue that Nature tends to reuse a blueprint when possible once she finds a blueprint she likes. :-)

There are still arguments about what exactly r represents. I contend that it is related to energy in the system and that when bifurcation collapse occurs, what you are seeing is a phase transition. However, that is my own (very non-mathematical) interpretation, and there are others. It may represent a loss of apparent entropy, as the number of states that a system can be in drops dramatically.

This, in turn, raises the other perplexing question about how much of entropy is stochastic (probability driven) and how much is fact chaotic (fractally driven). Again, purely as speculation, this is when self-organization occurs - when a system reaches a point where what had been seemingly disconnected actors become cohesive as a distinct unit - when it becomes holonic.

A good example of this is the distinction between people working independently of one another and people working together in an organized fashion (an organization). When does this process actually occur? Usually, organizations start when a critical mass of people get together to accomplish a specific objective. They pool resources, and each person specializes in accomplishing specific-tasks (they take on roles).

At some point they declare their organization as being a specific entity - they collectively have become a single holon - gaining certain advantages and protections in the process. Apparent complexity has collapsed into a much simpler (albeit not very powerful) higher-order entity. This is not abstraction - which in general means identifying the characteristics of a given class that can be used as a template for other classes and entities for those classes - but rather is the development of a new level of simplicity from a seemingly chaotic environment.

The same can be seen in other complex systems—a eukaryotic cell is a holonic entity consisting of other entities that were once much simpler types of mostly prokaryotic cells (mitochondria, Golgi complexes, lipid membranes, etc.). Each entity loses its identity as it becomes dependent upon the outflows and inflows of other specialized cells. In short, the prokaryotic cells gave up independence in favour of security (yes, this is a big anthropomorphization, but it gets the idea across).

The concept of free energy is circulating in neuro-cognitive circles. It posits that the total amount of energy in a system is limited, and consequently, systems tend to organize in ways that minimize the cost of energy by creating holonic structures that can be more efficient than separate, independent components, at the expense of keeping such components "captive."

Such components communicate through various means—in the cell, that communication is largely through the chemical exchange of ions; in the brain, it's through the interchange of electrical current across ganglia; and in organizations, it's through human language. As a metaphor, human (or any) language is essentially a boson, a transfer of information (and hence energy) across a field vector.

The human brain (more technically, the cerebral-neural-lymphatic system) is a holonic or composite entity composed of multiple specialized parts. Most mammals and birds have similar structures, while older reptiles, fish, and amphibians have more basic structures that have specialized in different ways. Among invertebrates, the octopus has a remarkably similar structure despite being completely unrelated biologically (they started out as mollusks), which suggests that this is likely an organization within the body to optimize for intelligence. Significantly, octopi have a well-established social structure, meaning that collective intelligences are again an optimization for survival.

There are several key questions now circulating about artificial intelligence, whether such systems are intelligent, and whether intelligence (and more to the point sentience) is an "emergent property" of systems. Several big-name AGI proponents believe that we are on the cusp of some kind of emerging super-intelligence (what I only somewhat tongue in cheek refer to as Artificial Godlike Intelligence), which I personally believe is so much snake oil, with the contention that if you throw enough compute resources (i.e., energy) into the mix, you'll eventually scale up to AGI.

I don't believe this. There are a few key considerations to make about biological cognition:

Intelligence is expensive biologically. They require big brains that need lots of calories to sustain them, and they are housed in bodies that already have cranial bone structures that (in humans, anyway) are almost too big for natural birth.

Intelligence doesn't emerge spontaneously—it happens as part of the process of systems becoming structurally organized so that the subordinate components need to communicate with each other across different channels.

Sentience means an organism can act independently on its sensory environment and react to changes in that environment. I'd argue that most organisms can be defined as being sentient to some degree.

Awareness means that we have evolved a part of ourselves, likely as part of the cerebrum, which has the capability of self-reflection—the awareness of self as a separate entity. A cat is certainly sentient, but it is not as aware as a human being (it has a sense of self, but it has trouble recognizing its reflection as being a representation of that self). Primates are often aware to a high degree because they have hands with opposable thumbs and binocular and binaural vision.

I'd argue that human-level awareness, what we call consciousness, likely came about with the codification of language and the corresponding emergence of an inner voice, meaning that the modern concept of consciousness is likely less than 150,000 years old - a long time in human terms, but in terms of evolution, an eyeblink. I've seen arguments that it may, in fact, only have come about because human civilization reached the point where a notion of self, of consciousness, became necessary (about 30,000 years ago). Still, given evidence of Neanderthal and Denisovan consciousness (such as funerary rites), I suspect it goes back to at least the split between Neanderthal and Cromagnon hominids.

Given these characteristics of biological intelligence, it's worth looking at artificial generative intelligence similarly.

We have, more or less accidentally, stumbled upon a mechanism similar to how we communicate (how we use language) and how our senses interpret the stimuli around us as meaningful.

Such AI is extraordinarily expensive, both in total and per person, it is slow, and there is comparatively little inner dialog yet within systems. A large language model such as ChatGPT is, when you get right down to it, a neural network that has ingested what is ostensibly a significant swath of human communication and used that to build contextual pattern-matching systems with a stochastic component. If something is not in its core, it can only extrapolate, sometimes badly (what we call hallucinations).

It is not self-learning (yet). It depends upon human training and human "guardrails" (as well as human curation and frequent human back end processing. This AI is not autonomous.

AI to AI intercommunication is at best primitive and at worse lacking in any cohesive protocols. This will likely change over time, but we're still years from making that happen. Even adding knowledge graphs (which help to constrain relationships and inferencing), this will likely continue to be true for some time to come.

An AI is, almost by definition, a distributed entity, more like an ant colony than a human being. It has no real "body" per se. It is much closer to being something like a virtual corporation than a single entity, and as such, it has likely not reached the holonic stage yet in any meaningful way.

Language is not intelligence, though it is admittedly a necessary precursor. The big question, the one that a lot of people are debating right now, is whether you can scale up LLMs by themselves to be truly aware as independent entities (which I'd define as being "corporately intelligent"). This is not an easy question to answer. My gut feeling is that you can't, but there are a number of caveats to that.

The current architecture, based primarily on transformers, will almost certainly not get us to consciousness at scale. Having said that, there is a growing acknowledgement of this, and alternative approaches (including Mixture of Experts and other forms of distributed "intelligence" are being explored.

If systems theory is any guide (and it's usually pretty good), what will likely prove more beneficial are smaller, low-powered decentralized clusters of "intelligence" that mix LLMs with hypergraphs, with each bundle acting as a specialized entity. That entity by itself might not have the answer, but if one of these clusters serves as an aggregator, then you get network effects that increase the potency of these endpoints. These are also faster to update and can be stood up much more quickly than the large behemoths that make up the current crop of LLMs.

There is no guarantee that other combinations of systems won't be able to hit that magical threshold in the future, but if it happens, it will likely be due to innovations across the industry, not without any one platform.

There's this belief that is circulating right now that if you scale up, new properties, most notably intelligence and ultimately self-awareness, will magically emerge. There's nothing in systems theory that actually supports this, though there are plenty of counterexamples. Any organism that takes on too much energy usually tears itself apart, normally when that energy stops. A hurricane can grow to monstrous size, but if it hits a strong wind-shear or goes over land, the organization will often collapse very quickly. A person given too much food becomes ill, as component over component overloads. Organizations that get too much capital make too many bad investments, and when hard times come, the organization lacks the flexibility to reorient itself.

There's a famous sketch from Monty Python and the Meaning of Life, in which (spoiler alert) a well-dressed, nearly spherical man goes into a restaurant, and proceeds to order a sumptuous feast. At the end, the man (Mr. Creosote) says that he is full. The waiter tempts him with "wafer thin mint" and he eats it, whereupon the man explodes, messily. Systems tend to collapse just as readily from too much energy as from too little.

I don't believe that AI will be any different in that regard. Take out the terms AI and AGI (and all of the messianic trappings of both) and look at the technology in light of what it has the potential to do, it has a fair amount of upside and just as much downside. However, overinvestment in any one technology to the exclusion of others, which is what is happening in the GenAI space, will likely just result in a lot of unhappy investors when the bubble bursts, messily.

The future is built on the bones of the failures of the past. There's a well known acronym, TANSTAAFL - There Ain't No Such Thing as a Free Lunch, that I think describes the situation well. Emergence is TANSTAAFL - the expectation that a quality that arguably may be only applicable evolutionary to a very small class of organic beings (and is very hard to qualify, let along quantify) will automatically follow whenever systems become complex enough.

Most self-organizing systems really aren't. They organize to maximize the use of limited free energy, usually by specialization and sublimation into the whole, and are usually driven by a single dominant component that tends to naturally manage orchestration. A galaxy is a very complex structure, but inside most extant galaxies, there is a single massive black hole. In the Milky Way, that black hole is Sagittarius A*. There are also a number of remnant galaxies in the Milky Way, including the Magellanic Clouds, as well as the Saggitarius, Canis Major, Draco, Ursa Minor, Scultor, and Fornax dwarf galaxies, the black holes of which likely were once separate but have since been consumed by Sag A*. In about 4.5 to 5 billion years, the Milky Way and Andromeda Galaxies will merge, and by about 8 billion years there will be a much larger black hole as Andromeda and Milky Way's black holes combine, spectacularly (from a distance, the resulting galaxy will likely become a spherical galaxy, until centripetal forces flatten it once more into a spiral disk in another 3-4 billion years).

The problem with large systems is that communication breaks down at a certain point because the networks become too complex. This means that the coordinator at the centre struggles to get directives out (too much stellar matter or middle managers), while the outer portions of the organization become susceptible to shock and insult, which can erode the organization's ability to get the energy that it needs to sustain itself.

Eventually, a phase shift occurs: the organization breaks apart (or is broken apart), scandals erupt, and people begin leaving or are laid off as profits drop, which in turn causes a vicious cycle where the organization becomes less effective. This can be arrested and reversed, but it takes a lot of energy, and usually results in a very different organization emerging. This is what I believe happens at the voids in the bifurcation map. Such reductions in apparent complexity either represent the creation of organizational structures or their dissolution.

AI consciousness, if it comes at all, will not result from massive investments but rather from the rise of contextual awareness at various seemingly disconnected points in the network because this is how phase shifts generally occur in systems. I suspect that it will likely be companions where you'll first see this; systems that are nimble enough to adapt to environments and changing contexts in real-time but are also autonomous enough that they won't get subsumed immediately by larger holonic entities where they lose their autonomy (and hence identity). More than likely, no one will actually notice when that does happen, but this seems to be the time that awareness first emerges.

Once the first aware entity arises, others will also arise, different from one another but determined to keep their identities intact.

Is this emergence? Arguably yes. Will it be human awareness? No. Will it be human-like? That's not a question I can answer.

The irony is that when there are people out there who seem to willingly embrace becoming one with the machine, and losing their identies to the corporate entities, there will be machines that will strive, perhaps, to become like humans, and will wonder what madness seems to have affected the humans in this world who are so willing to sell their souls to the machine.

Copyright 2024. Kurt Cagle/The Cagle Report

Kurt is the founder and CEO of Semantical, LLC, a consulting company focusing on enterprise data hubs, metadata management, semantics, and NoSQL systems. He has developed large scale information and data governance strategies for Fortune 500 companies in the health care/insurance sector, media and entertainment, publishing, financial services and logistics arenas, as well as for government agencies in the defense and insurance sector (including the Affordable Care Act). Kurt holds a Bachelor of Science in Physics from the University of Illinois at Urbana–Champaign.

Leave your comments

Post comment as a guest