Comments

- No comments found

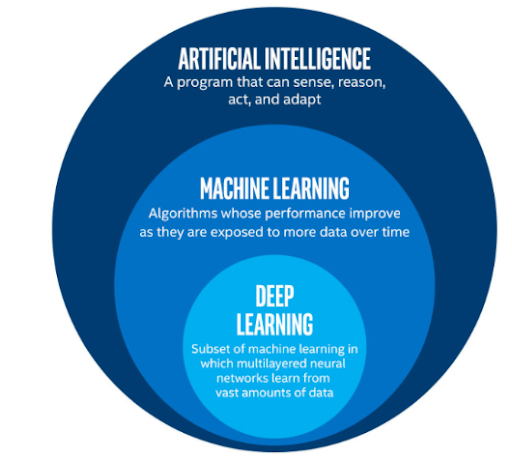

Machine learning, a branch of artificial intelligence (AI), has experienced significant advancements in recent years.

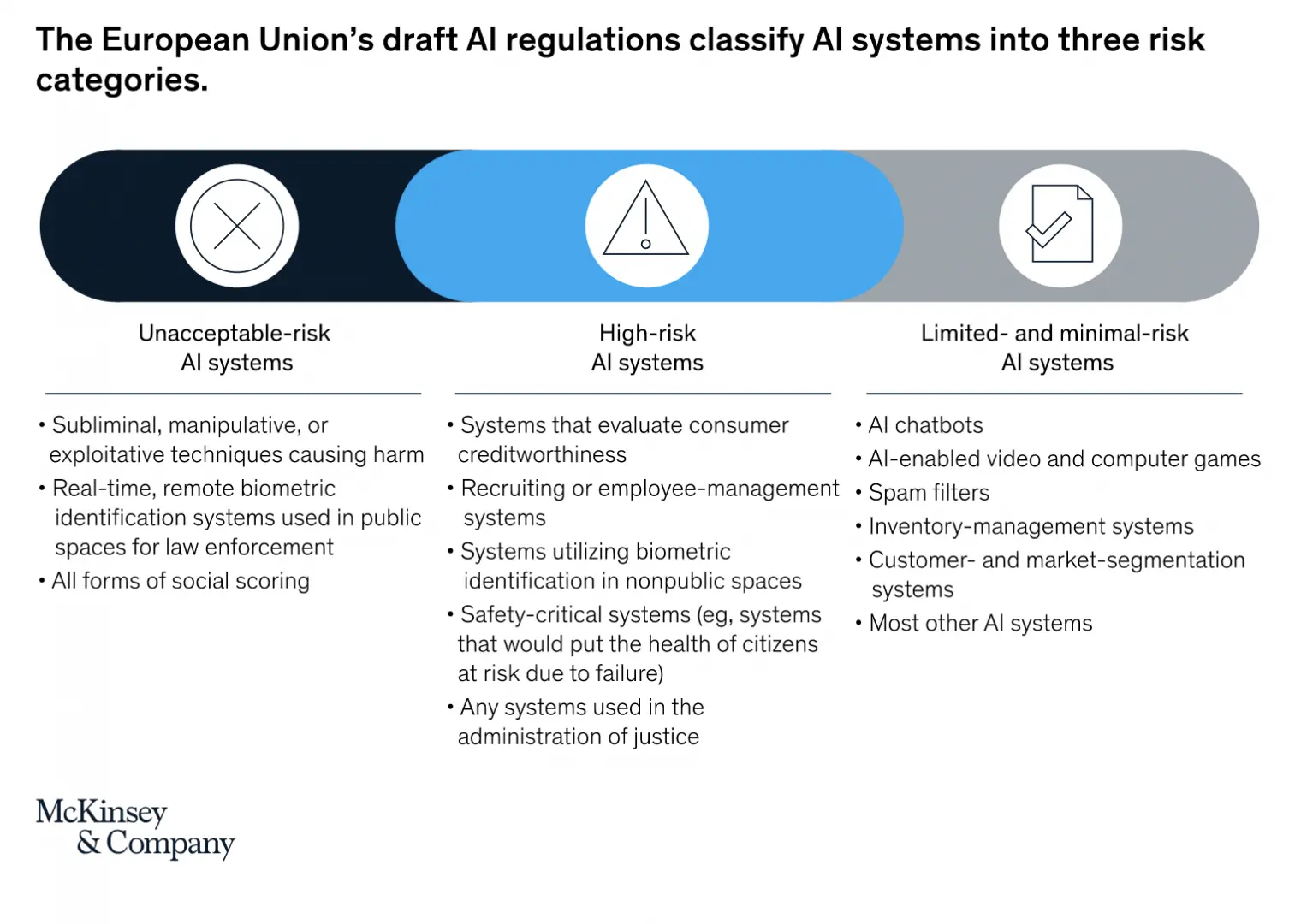

It has transformed industries, revolutionized decision-making processes, and powered innovations that were once deemed unimaginable. However, the rapid proliferation of machine learning technologies has raised concerns regarding their potential societal impact. As machine learning algorithms become increasingly autonomous and influential, the need for regulatory frameworks to govern their deployment and mitigate potential risks has become crucial. This article delves into the pressing need for regulating machine learning and explores the challenges, benefits, and potential approaches to ensure the responsible and ethical use of this powerful technology.

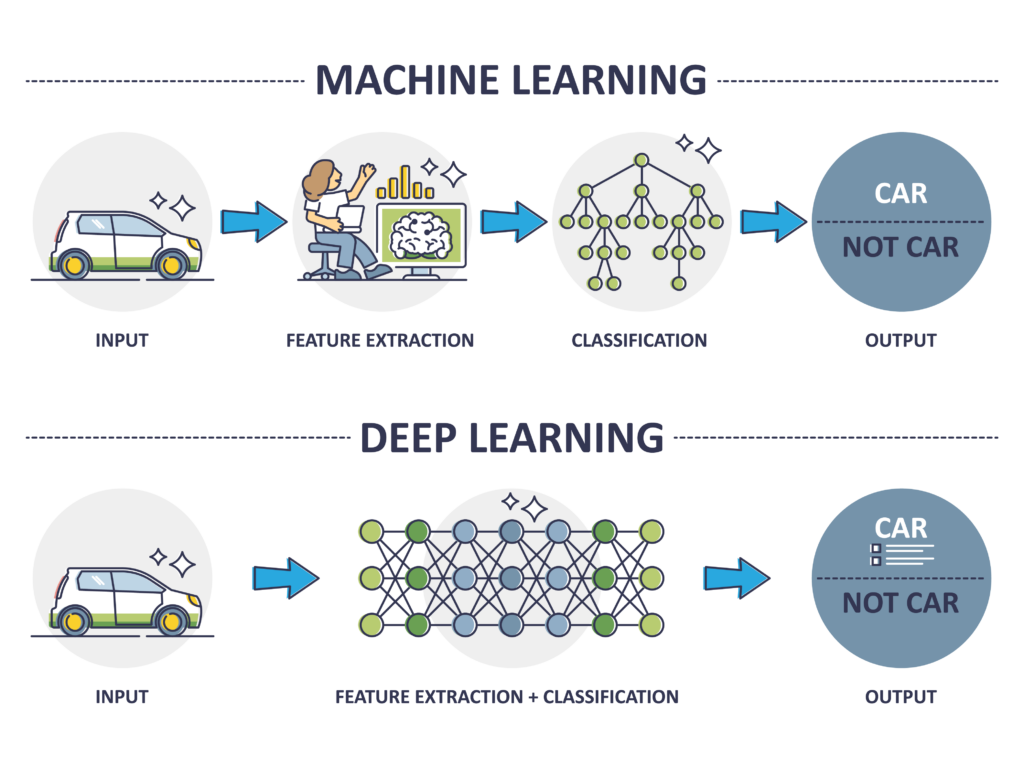

Machine learning algorithms are designed to analyze vast amounts of data, detect patterns, and make predictions or decisions based on learned patterns. These algorithms can autonomously improve their performance through iterative learning processes, without being explicitly programmed for every task. As a result, they have found applications in various domains, including finance, healthcare, transportation, and entertainment, among others.

While the advancements in machine learning bring numerous benefits, they also present challenges and risks that demand regulatory attention. Some of the key concerns include:

Machine learning algorithms learn from historical data, which can perpetuate biases present in the data. This can lead to discriminatory outcomes, such as biased hiring practices or unfair lending decisions. Without proper regulation, these biases can reinforce existing societal inequalities.

Machine learning relies on vast amounts of data, often personal and sensitive in nature. The unregulated use of such data raises concerns about privacy infringements, data breaches, and the potential misuse of personal information. Clear regulations are needed to safeguard individuals' privacy and ensure responsible data handling practices.

Many machine learning algorithms operate as "black boxes," meaning their decision-making processes are not easily understandable or explainable. This lack of transparency raises concerns about accountability, as decisions made by these algorithms may have significant real-world consequences. Regulating the transparency and explainability of machine learning systems is crucial for building trust and ensuring ethical decision-making.

Machine learning models are vulnerable to adversarial attacks, where malicious actors intentionally manipulate input data to deceive or disrupt the system's functionality. Without adequate regulation, these attacks can have severe consequences, compromising security, integrity, and reliability.

Regulating machine learning is not solely about curbing potential risks; it also offers several benefits:

Proper regulation can enforce fairness and prevent discrimination by mandating algorithms to be free from biases or ensuring that any biases are identified and addressed transparently. This can promote equal opportunities and reduce inequalities in various domains, including hiring, lending, and criminal justice systems.

Regulations can enforce the development of interpretable and explainable machine learning models. This empowers individuals and organizations to understand and challenge the decisions made by algorithms, leading to increased accountability and trust.

Regulatory frameworks can provide guidelines and standards for the collection, use, and storage of data in machine learning applications. By implementing strict privacy regulations, individuals' personal information can be safeguarded, fostering trust in machine learning systems.

Regulations can mandate measures to protect machine learning systems from adversarial attacks. By establishing security standards and best practices, potential vulnerabilities can be mitigated, ensuring the reliability and integrity of these systems.

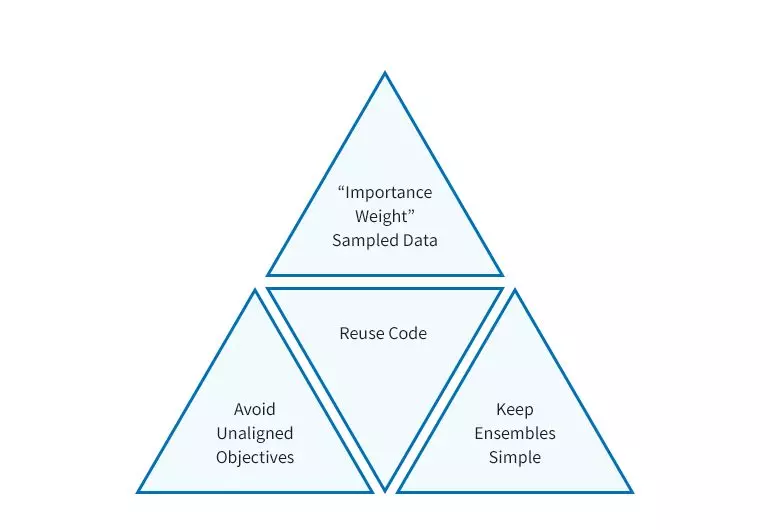

Regulating machine learning requires a nuanced approach that balances the need for oversight without stifling innovation.

Here are some potential approaches to regulating machine learning:

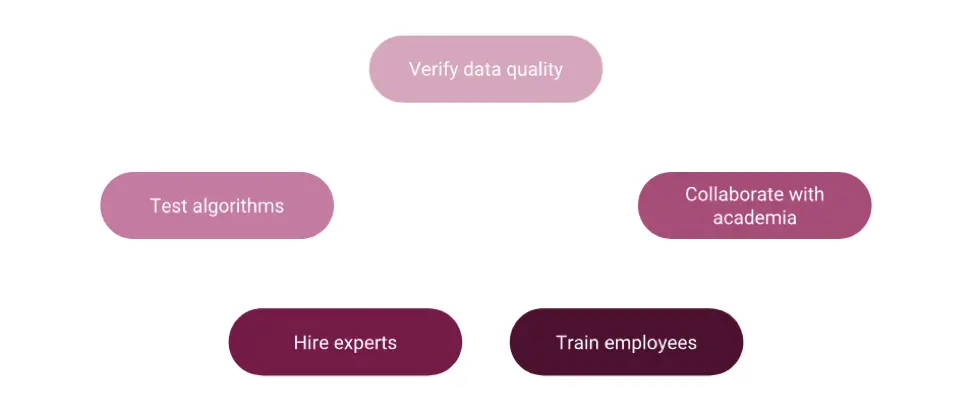

Establishing ethical guidelines and principles for the development and deployment of machine learning systems can provide a foundation for responsible AI practices. These guidelines can outline principles such as fairness, transparency, accountability, and privacy protection. Industry associations and organizations can play a role in developing and promoting these guidelines, while governments can incentivize compliance and provide oversight.

Requiring algorithmic audits and impact assessments can help identify potential biases, risks, and unintended consequences of machine learning algorithms. These assessments can be conducted prior to deployment and periodically thereafter to ensure ongoing compliance. Independent third-party audits and certifications can enhance credibility and trust.

Regulating the collection, use, and storage of data is crucial in machine learning. Stricter data governance regulations can ensure that personal and sensitive data is handled with care, with explicit consent from individuals. Clear guidelines on data anonymization, data retention, and data sharing can help protect privacy while enabling responsible use of data for machine learning purposes.

Regulators can require machine learning models to be transparent and explainable to stakeholders. This can be achieved through methods such as interpretable algorithms, model documentation, or providing explanations for the decisions made by the algorithms. By enabling stakeholders to understand the reasoning behind machine learning outcomes, accountability and trust can be fostered.

Establishing regulatory bodies or expanding the roles of existing bodies to oversee machine learning applications can ensure compliance with ethical standards and regulations. Certification programs can be developed to assess the adherence of machine learning systems to regulatory requirements. These bodies can also handle complaints, conduct investigations, and impose penalties for non-compliance.

Collaboration between governments, industry stakeholders, and research institutions is essential in shaping effective regulations for machine learning. International standards can be developed to provide a common framework for responsible AI practices, enabling cross-border cooperation and harmonization of regulations. Such collaboration can prevent regulatory fragmentation and ensure consistent standards across jurisdictions.

It's important to harness the benefits of machine learning while mitigating potential risks. Striking a balance between innovation and oversight is essential for the responsible and ethical use of this transformative technology.

Clear regulations can address concerns such as bias, privacy, transparency, and security, while fostering trust and accountability. By implementing appropriate frameworks and working collaboratively across sectors and jurisdictions, we can ensure that machine learning remains a powerful tool for societal progress while upholding fundamental values and protecting the welfare of individuals and communities.

Leave your comments

Post comment as a guest