Comments

- No comments found

Artificial intelligence (AI) has infiltrated nearly every aspect of our lives, from personalized recommendations to self-driving cars.

As AI's influence grows, a critical question lingers: how do these intelligent systems arrive at their decisions? Enter Explainable AI (XAI) – a burgeoning field striving to demystify the "black box" of AI and foster trust in its outcomes.

This article delves into the heart of XAI, exploring its significance, challenges, and promising approaches. We'll unveil the motivations behind XAI, unravel the complexities of "explainability," and showcase cutting-edge techniques that shed light on AI's inner workings.

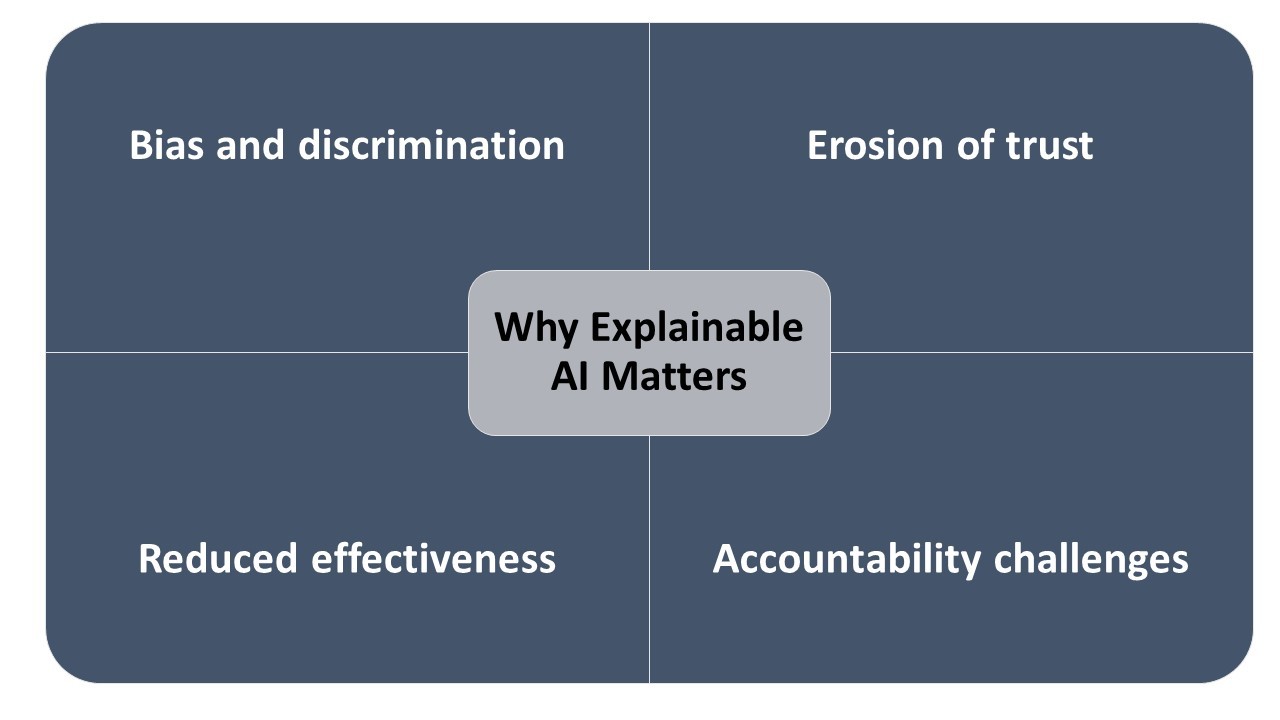

Imagine being denied a loan with no clear explanation, or relying on an autonomous vehicle guided by an impenetrable algorithm. The lack of transparency in AI decisions can lead to:

Erosion of trust: Users struggle to trust opaque systems, hindering adoption and potential benefits.

Bias and discrimination: Unexplained biases embedded in AI models can perpetuate unfairness and perpetuate societal inequalities.

Accountability challenges: Without understanding how decisions are made, pinpointing responsibility for errors or harmful outcomes becomes difficult.

Reduced effectiveness: Debugging and improving opaque models is cumbersome, hindering their overall performance.

XAI addresses these concerns by bringing transparency and understanding to AI processes. With XAI, we can:

Verify fairness and mitigate bias: Identify and rectify biases in training data and models, ensuring equitable outcomes.

Build trust and confidence: Users can better understand how AI systems work, increasing acceptance and willingness to interact with them.

Improve interpretability and debuggability: By understanding the reasoning behind decisions, developers can pinpoint errors and refine models for better performance.

Enhance regulatory compliance: Explainability can help organizations adhere to emerging regulations governing AI use.

"Explainability" in XAI is multifaceted. Different stakeholders have varying needs:

End users: They desire clear, concise explanations of AI outputs, often in natural language or visualizations.

Domain experts: They need deeper insights into the model's internal workings, including feature importance and decision logic.

Developers and auditors: They require access to technical details like feature representations and model parameters for debugging and analysis.

Therefore, a single universal explanation doesn't exist. XAI offers a spectrum of techniques tailored to different audiences and purposes.

The field of XAI is brimming with diverse approaches, each offering unique perspectives on AI's decision-making. Here are some prominent examples:

Local Explanations: These methods explain individual predictions, highlighting features contributing most to the outcome. Techniques like LIME (Local Interpretable Model-Agnostic Explanations) and SHAP (SHapley Additive exPlanations) fall into this category.

Global Explanations: These methods provide insights into the overall behavior of the model, revealing patterns and relationships within its internal workings. Rule extraction and feature importance analysis are examples of global explanation techniques.

Counterfactual Explanations: These methods explore what-if scenarios, showcasing how changing specific features would affect the prediction. This helps users understand the model's sensitivity to different inputs.

Visualizations: Visualizing data and model behavior can be highly effective in conveying information, especially for non-technical audiences. Interactive graphs, decision trees, and attention maps fall under this category.

No single technique addresses all explainability needs. Often, a combination of approaches is used to provide a comprehensive understanding of the AI system.

Despite its significant progress, XAI still faces challenges:

The inherent complexity of AI models: Many advanced models, like deep neural networks, are inherently complex, making them difficult to explain.

The trade-off between accuracy and explainability: Sometimes, making a model more explainable can slightly impact its accuracy, presenting a balancing act for developers.

Standardization and evaluation: No universal standards exist for measuring explainability, making it difficult to compare different techniques.

Addressing these challenges is crucial for XAI's continued advancement. Promising research avenues include:

Developing inherently explainable models: Designing models with explainability in mind from the outset, rather than retrofitting it later.

Explainable AI for complex models: Addressing the explainability challenges posed by sophisticated models like deep learning systems.

Standardized metrics and benchmarks: Establishing common metrics and benchmarks for evaluating the effectiveness of different XAI techniques.

XAI is not just a technical challenge; it's a critical step towards responsible and ethical AI development. By demystifying AI's decision-making, we can build trustworthy systems that contribute positively.

Ahmed Banafa is an expert in new tech with appearances on ABC, NBC , CBS, FOX TV and radio stations. He served as a professor, academic advisor and coordinator at well-known American universities and colleges. His researches are featured on Forbes, MIT Technology Review, ComputerWorld and Techonomy. He published over 100 articles about the internet of things, blockchain, artificial intelligence, cloud computing and big data. His research papers are used in many patents, numerous thesis and conferences. He is also a guest speaker at international technology conferences. He is the recipient of several awards, including Distinguished Tenured Staff Award, Instructor of the year and Certificate of Honor from the City and County of San Francisco. Ahmed studied cyber security at Harvard University. He is the author of the book: Secure and Smart Internet of Things Using Blockchain and AI.

Leave your comments

Post comment as a guest