Comments

- No comments found

In recent years, the rise of artificial intelligence bots has brought both marvels and challenges to the online world.

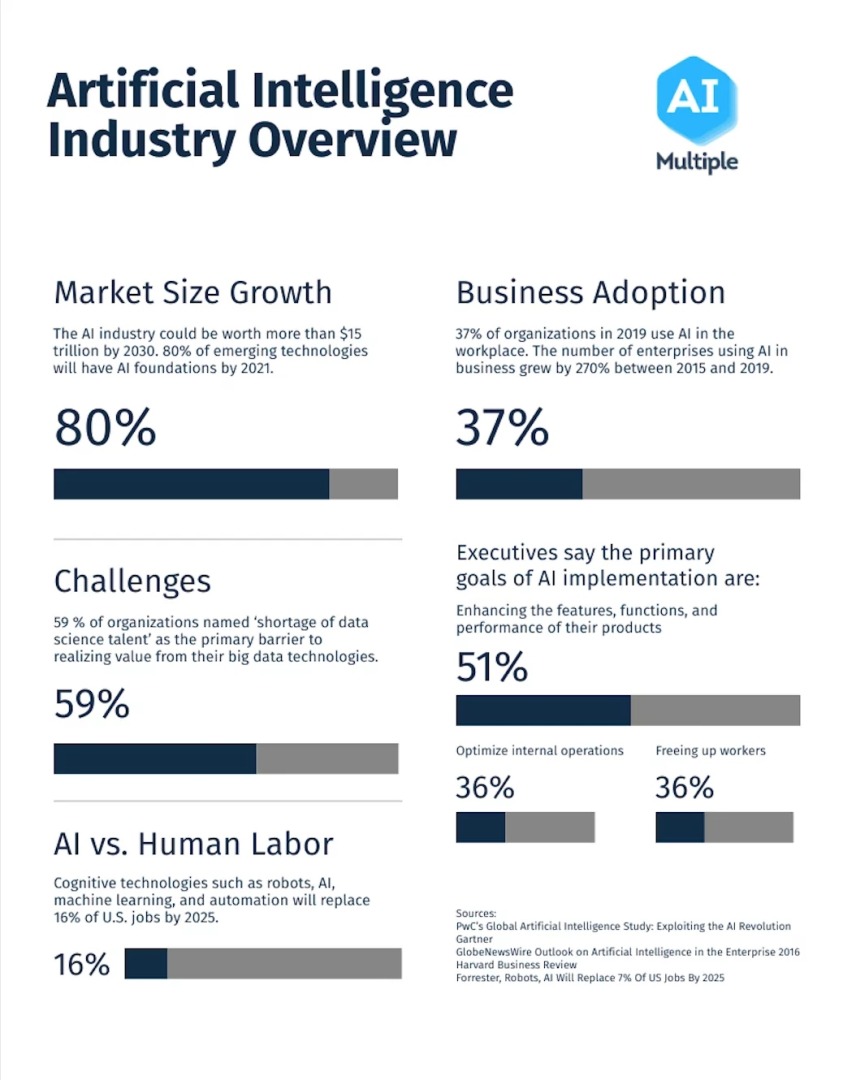

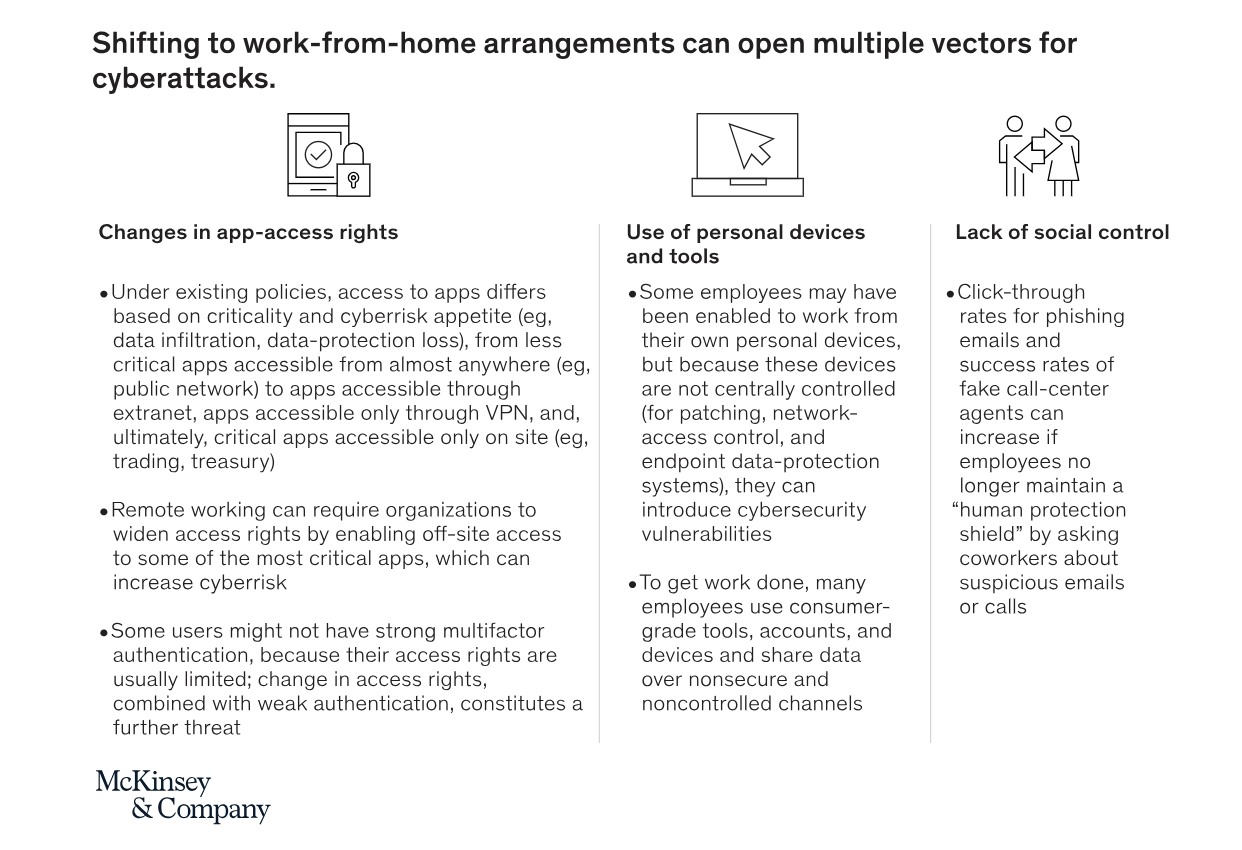

One of the significant challenges is the proliferation of AI-powered bots, which now constitute almost half of all Internet traffic. Sophisticated bots mimic human behavior, spreading spam, scams, and viruses, posing a serious threat to cybersecurity, which can lead to a botocalypse. It is vital to recognise that AI may have major drawbacks, such as built-in biases, privacy concerns, and the potential for abuse.

A botocalypse is a blend of "bot" (referring to AI-powered bots) and "apocalypse" (indicating a catastrophic event).

With AI bots rapidly gaining traction, there are concerns about the decline of genuine human interactions online. This article delves into the growing prevalence of AI automated bots, their implications, and explores strategies to combat their malicious activities.

As indicated by the 2023 Imperva Bad Bot Report, automated bots have become a formidable force on the Internet. These bots leverage AI and generative tools like ChatGPT, GPT-4, and Google's Bard, even with various ChatGPT plugins, to simulate human-like interactions and behavior. Unfortunately, many of these bots are deployed by cybercriminals to execute their malicious agendas, causing significant disruptions to individuals and businesses alike.

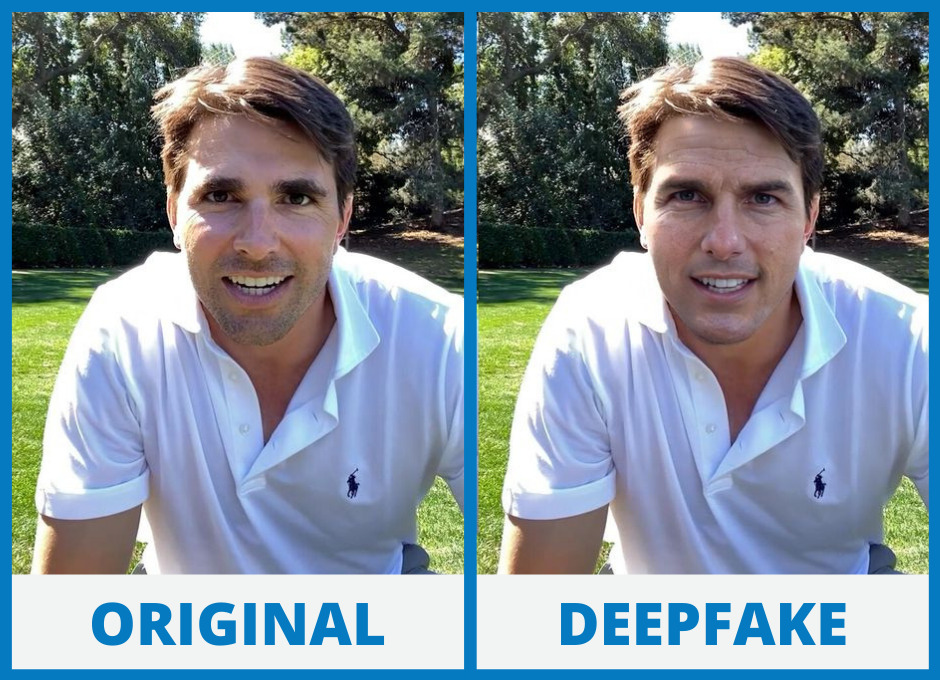

AI-powered bots have evolved beyond basic scripted actions. They can adapt, learn, and improvise, making them highly elusive and challenging to detect. From fake customer support bots to deep-fake bad bots capable of generating compelling yet false narratives, these malicious entities can deceive unsuspecting users and manipulate them for nefarious purposes.

The advancements in generative AI have led to the creation of deep fakes, which use AI-generated content to fabricate images, audio, and videos that are indistinguishable from reality. These deep fakes can be employed to spread misinformation, defame individuals, and manipulate public opinion, causing widespread chaos.

Moreover, AI chatbots, if not carefully curated, can amplify human biases. The algorithms that power these bots may inadvertently learn and perpetuate prejudices present in the data they are trained on, leading to biased responses and reinforcing harmful stereotypes.

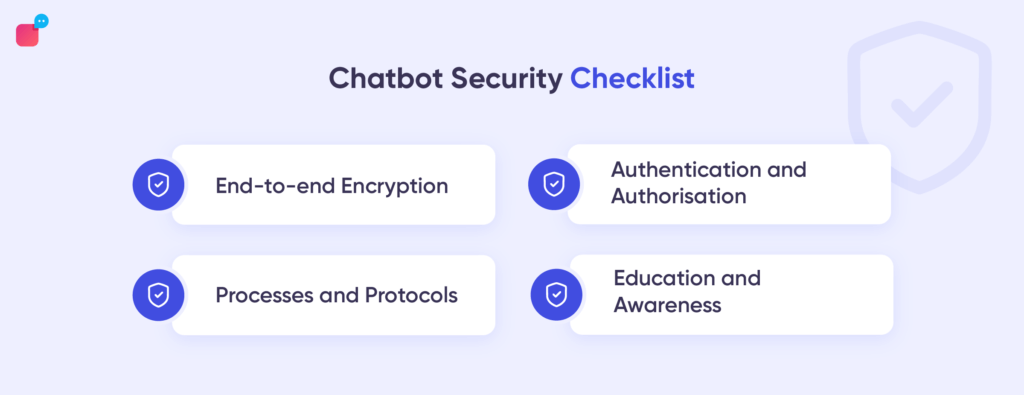

Addressing the surge of AI automated bots necessitates a multi-pronged approach involving technology, user awareness, and responsible AI development:

Enhance existing security measures with machine learning algorithms to identify and block suspicious bot behavior effectively. Utilizing behavioral analysis and anomaly detection can help differentiate between genuine human users and malicious bots.

Employ multi-factor authentication methods to validate the identity of users and minimize the impact of bot impersonation. CAPTCHAs and biometric authentication are effective tools to deter automated bot attacks.

Continuously update security protocols and software to stay ahead of evolving bot tactics. Cybersecurity solutions must remain agile to respond to emerging threats effectively.

AI developers must prioritize ethical considerations and rigorously test chatbot models to minimize bias and prevent the proliferation of fake information.

Raise awareness among internet users about the existence and potential dangers of AI-powered bots. Educate users on recognizing suspicious behavior and reporting any suspected bot activities.

AI-powered bots represent a dual threat to the Internet, challenging the authenticity of online interactions while enabling cybercrime and misinformation.

It is crucial for stakeholders, including businesses, technology developers, and users, to collaborate in the fight against these malicious entities. By adopting robust security measures, promoting responsible AI development, and educating users about the risks, we can work towards a safer, more authentic digital landscape that fosters trust and genuine human connections.

Leave your comments

Post comment as a guest