With all the hype around “AI” and Machine Learning, I thought that I’d dabble in unpacking some of the key concepts. I find that most of my reading time now is spent in this area.

Firstly, Artificial Intelligence isn’t really something brand new. It has been written about for decades, most famously by Alan Turing in 1951. In his article “Computing Machinery and Intelligence” he spoke of the “imitation game”, which later came to be known as the Turing Test. There was a movie in 2014 called “The Imitation Game” which details Turing’s life, and how he cracked the enigma code in WW2 (starring the usually excellent Benedict Cumberbatch), highly recommended.

In terms of actually experiencing a simple AI, the easiest way to do this is to play a videogame. Even in the 80’s with “Pac-Man”, as the player you were trying to outwit the 4 enemies on screen – the algorithms behind the enemy players could be considered an early implementation of simple AI. Modern games have more complex implementations of "AI" that may surprise you with their intelligence.

Was Blinky an A.I ?

So why is AI becoming more prominent now? Very simply, we have now reached a tipping point where Big Data, software advances and cloud computing can be leveraged together to add value to businesses and society. Even though we are still many years from creating a sentient AI like HAL 9000, we can implement things like Machine Learning today to bring about improvements and efficiencies.

Machine Learning

Machine Learning is a subset of A.I, not really A.I in totality. Way back in 1959, Arthur Samuel defined Machine Learning as "the ability to learn without being explicitly programmed". Basically, this field entails creating algorithms that can find patterns or anomalies in data, and make predictions based on those learnings. Once "trained" on a set of data, these algorithms can very reliably (if chosen correctly), find those patterns and make predictions. Using tools like Azure Machine Learning, you can deploy this as a web service to automate the prediction process, and also do these predictions as a batch.

Now I personally became exposed to similar concepts around 2006 when working with SQL 2005. That product release included a lot of "data mining" functionality. Data Mining basically involved using algorithms (many built into SSAS 2005) to find patterns in datasets, a precursor to Machine Learning today.

I was really exciting by the possibilities of Data Mining, and tried to show it to as many customers as possible, however the market was just not ready. Many customers told me that they just want to run their data infrastructure as cheaply as possible and don't need any of this "fancy stuff". Of course, the tools today are a lot easier to use and we now include support for R and Python, but I think what was missing back in 2007 was industry hype. Industry hype, coupled with fear of competitors overtaking them, is possibly forcing some of those old I.T managers to take a look at Machine Learning now, while we also have a new breed of more dynamic I.T management (not to mention business users who need intelligent platforms) adopting this technology.

Machine Learning today has evolved a lot from those Data Mining tools, and the cloud actually makes using these tools very feasible. If you feel unsure about the value Machine Learning will bring to your business, you can simply create some test experiments in the cloud to evaluate without making any investment into tools and infrastructure, and I'm seeing customers today embrace this thinking.

Deep Learning

Deep Learning can be considered a particular type of Machine Learning. The difference is that Deep Learning relies on the use of Neural Networks, a construct that simulates the human brain. We sometimes refer to Deep Learning as Deep Neural Networks, i.e. Neural Networks with many, many layers. The scale of deep learning is much greater than Machine Learning.

Neural Networks

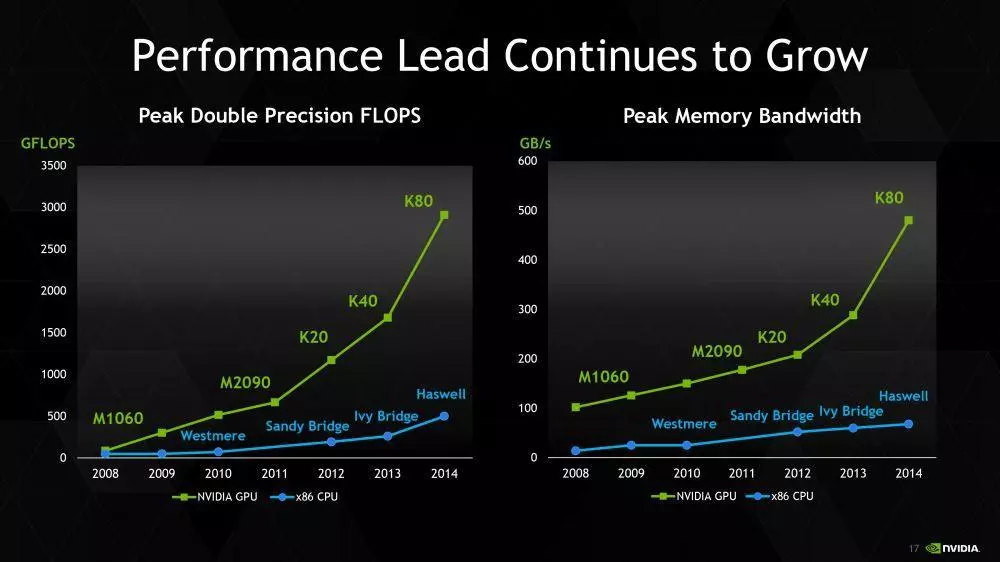

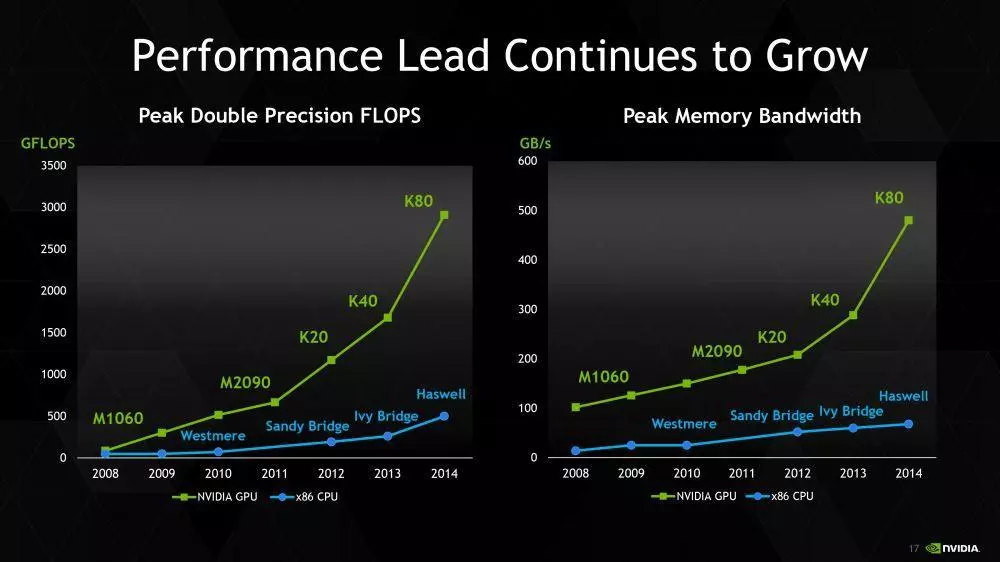

Neural Networks have been around for decades. As mentioned, this is a construct that mirrors the human brain. In the past, we could build neural networks but simply didn't have the processing power to get quick results from them. The rise of GPU computing has given Neural Networks and Deep Learning a boost. There is a fast widening gap in the number of Floating Point Operations per second (FLOPS) that is possible with GPUs compared to traditional CPUs. In Azure, you can now spin up VMs that are GPU based and build neural networks (where you might not have invested in such resources in the old on-premises world).

Image courtesy NVIDIA Corporation

On the 24 August 2017, Microsoft announced a Deep Learning acceleration platform built on FPGA (Field Programmable Gate Array) technology.

While Machine Learning works well with repetitive tasks (i.e. Finding a pattern in a set of data), a neural network is better for performing tasks that a human is good at ( i.e. Recognizing a face within a picture).

Narrow AI vs General AI

All of the above would typically fall under Narrow AI (or Weak AI). Narrow AI refers to non-sentient AI that is designed for a singular purpose (I.e. A Machine Learning model designed to analyze various factors and predict when customers are likely to default on a payment, or when a mechanical failure will occur). Narrow AI can be utilized today in hundreds of scenarios, and with tools like Azure ML, it's very easy to get up and running.

General AI (or Strong AI) refers to a sentient AI that can mimic a human being (like HAL). This is what most people think of when they hear the words "Artificial Intelligence". We are still many years away from this type of AI, although many feel that we could get there by 2030. If I had to predict how we get there, I would say perhaps a very large scale neural network built on quantum computing, with software breakthroughs being made as well. This is the type of AI that many are fearful of, as it will bypass human intelligence very quickly and there's no telling what the machine will do.

Why would we need a Strong AI? Some obvious use cases would be putting it onboard a ship for a long space journey – it is essentially a crew member that does not require food or oxygen and can work 24/7. On Earth, the AI would augment our capabilities and be the catalyst for rapid technological advancement. Consider this : we may not know where the AI will add the most value, however, once we build it, it will tell us where.

The good news is that you don't need General AI (a HAL 9000) to improve businesses in the world today. We are currently under-utilizing Narrow AI, and there is tremendous opportunity in this space. I encourage you to investigate what's out there today and you will be amazed at the possibilities.

Leave your comments

Post comment as a guest