Comments

- No comments found

In a bold move to counteract the spread of AI-generated image disinformation, Google is pioneering the use of digital watermarks.

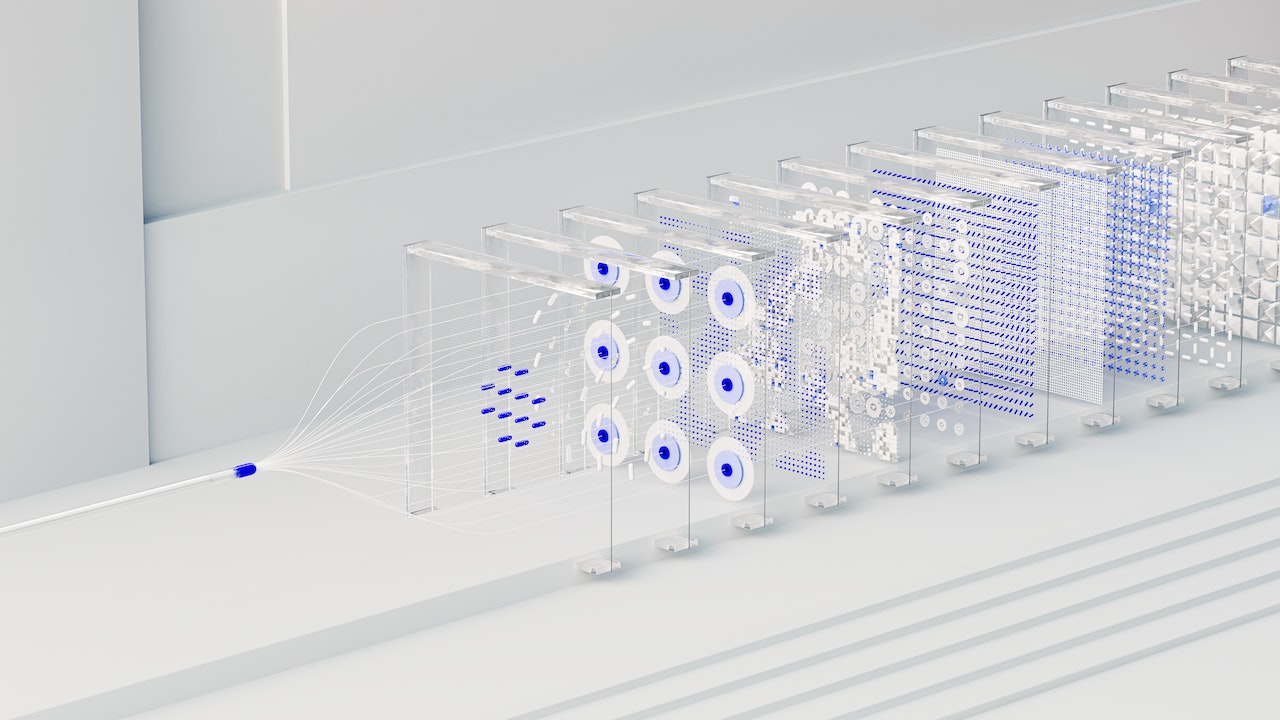

DeepMind, Google's AI arm, has developed SynthID, a cutting-edge solution aimed at identifying images created by artificial intelligence. This technology addresses the growing challenge of distinguishing between authentic images and those produced by AI-powered algorithms.

The novel approach of SynthID involves embedding imperceptible alterations into individual image pixels, rendering the watermarks invisible to human eyes but detectable by computers. This strategy enhances the accuracy of identifying images generated by machines. While not entirely immune to extreme image manipulation, SynthID presents a promising step towards ensuring visual content authenticity.

The surge in AI image generators has introduced complexity in discerning real images from artificially created ones. Widely used tools like Midjourney, with over 14.5 million users, enable effortless image creation based on simple text input. However, concerns regarding copyright and ownership have accompanied this trend, prompting the exploration of innovative solutions.

Google's commitment to tackling this challenge is reflected in its proprietary image generator, Imagen. The watermarking system, devised by Google, will exclusively target images produced using this platform. By focusing on its own technology, Google aims to streamline the implementation and effectiveness of SynthID.

Traditional watermarks, such as logos or text, serve as identifiers and deterrents against unauthorized image usage. However, these conventional marks are vulnerable to alteration or removal, rendering them ineffective for AI-generated images. SynthID's groundbreaking approach creates watermarks that remain practically imperceptible to humans but consistently detectable by DeepMind's software, even after cropping, editing, or resizing.

While Pushmeet Kohli, head of research at DeepMind, acknowledges the "experimental launch" of SynthID, he emphasizes the importance of user engagement to assess its robustness. Kohli affirms that even subtle modifications are capable of withstanding various manipulations, enhancing the viability of AI-generated image identification.

Google's participation in a voluntary agreement with six other AI-leading companies to implement watermarks signifies a collective push for safe AI development. However, industry experts like Claire Leibowicz from Partnership on AI emphasize the need for standardization across businesses. Coordination in methodologies, reporting, and transparency can enhance the reliability of AI-generated content identification.

China's proactive stance in banning AI-generated images without watermarks underscores the global significance of this challenge. Tech giants like Microsoft, Amazon, and Meta have joined Google in pledging to adopt watermarking practices. Meta, for instance, has extended this commitment to its unreleased video generator, signaling a comprehensive approach to transparency.

Google's SynthID is poised to revolutionize the way AI-generated images are identified, combating the proliferation of disinformation. By rendering watermarks virtually invisible yet resolutely detectable, SynthID bridges the gap between human perception and machine analysis. As the digital landscape grapples with the intricacies of AI-generated content, SynthID's experimental introduction heralds a new era of image authenticity and accountability.

Leave your comments

Post comment as a guest