Comments

- No comments found

Scientists are working hard to enable artificial intelligence (AI) to identify and reduce the impact of human biases.

Artificial intelligence algorithms are meant to replicate the working mechanism of the human brain for optimizing organizational activities.

Unfortunately, while we have been able to get closer to actually recreating human intelligence artificially, AI also displays another distinctively human trait- prejudice against someone based on their race, ethnicity or gender. Bias in AI is not exactly a novel concept. Examples of biased algorithms in healthcare, law enforcement and recruitment industries have been uncovered recently as well as in the past. As a result, intolerance and discrimination from the centuries gone by keep emerging in one form or another even as the world seems to be moving towards inclusivity for everyone. To know how AI reinforces long-held human prejudices, we need to enlist the ways in which bias creeps into AI’s models and neural networks.

An AI-powered system's decisions only reflect the type of input data used for model training. Therefore, if the datasets imbibed by an AI model are discriminatory, the output recommendation or decisions will follow the same trend. In the initial machine learning phase, biased AI models can be created in two ways. Firstly, as specified already, the data used to train an AI model (on the whole) is narrow and biased. And secondly, discriminatory algorithms are created due to biased samples within a given dataset. The input data may be narrow either due to negligence or because data scientists working on the training process are themselves conservative, narrow-minded, and, yes, prejudicial.

One of the best examples of discriminatory AI is the infamous COMPAS system, used in several states across the US. The AI-powered system used historical penitentiary databases and a regression model to predict whether released criminals were likely to re-offend in the future. As expected, the system’s predictions showed that nearly double the number of re-offenders would be African Americans in comparison to Caucasians. One of the primary reasons for this bias was that the network administrators never tried to detect the system’s discriminatory undertones while analyzing its predictions. Often, the victims of bias in AI are women, individuals belonging to racial or ethnic minorities in a given area, or those with an immigrant background. As they say, AI’s models do not introduce new biases but simply reflect the ones that are already present in society.

As specified above, the procedure of data collection for machine training can be biased as well. In this case, designated AI governance officials would be aware of the bias in the collected data but would nonetheless choose to ignore it. For instance, during the collection of data related to student background for admissions, it could be possible that a school only selects candidates if they are white. Additionally, the school may simply refuse to provide learning opportunities to other children. In what can be a cycle, the school's choice to exclusively select white students may be closely observed by an AI model. Later, the model will carry the racist tradition forward as its pattern analyses only point towards that being the right course of action during school admissions. Thus, racism is reinforced several times over despite the presence of cutting-edge technology that handles the process.

Apart from race or gender-based discrimination, bias in AI may exist even in the form of preferential treatment for the wealthy. So, poor people may be under-represented in AI’s datasets. A hypothetical example of this can even be imagined in the ongoing COVID-19 era. Several countries have developed their own mobile applications that can be used to track persons who are infected with the virus and alert others in any given area to maintain their distance from such individuals. While the initiative may serve a noble purpose, individuals without smartphones will simply be invisible in the application. While this type of bias is nobody’s fault in particular, it defeats the purpose of designing such applications in the first place.

To sum up, discriminatory training data and operational practices can directly lead to bias in AI systems and models.

Another way of bias seeping into AI’s models could be through proxies. Some of the details and information used for machine training may match with protected characteristics. Instances of bias because of this may be unintentional because the data used to make rational and calibrated decisions may end up serving as proxies for class membership.

For example, imagine a financial institution that uses an AI-powered system to predict which loan applicants may struggle with their repayments. The datasets used for training the AI system will contain historical information spanning more than three decades. Now, this input data does not contain details related to the applicants’ skin color or gender. However, suppose that the system predicts that persons residing in a particular locality (linked to a certain postal code) will default on their loan installment repayments. This prediction is generated solely on the basis of historical records. The persons staying in that area may rightly feel that they are being discriminated against when the bank decides to not sanction their loan requests due to their location of residence. This type of bias in AI can be eliminated by involving human officials who can override the AI system’s decisions based on actual facts and not just historical records.

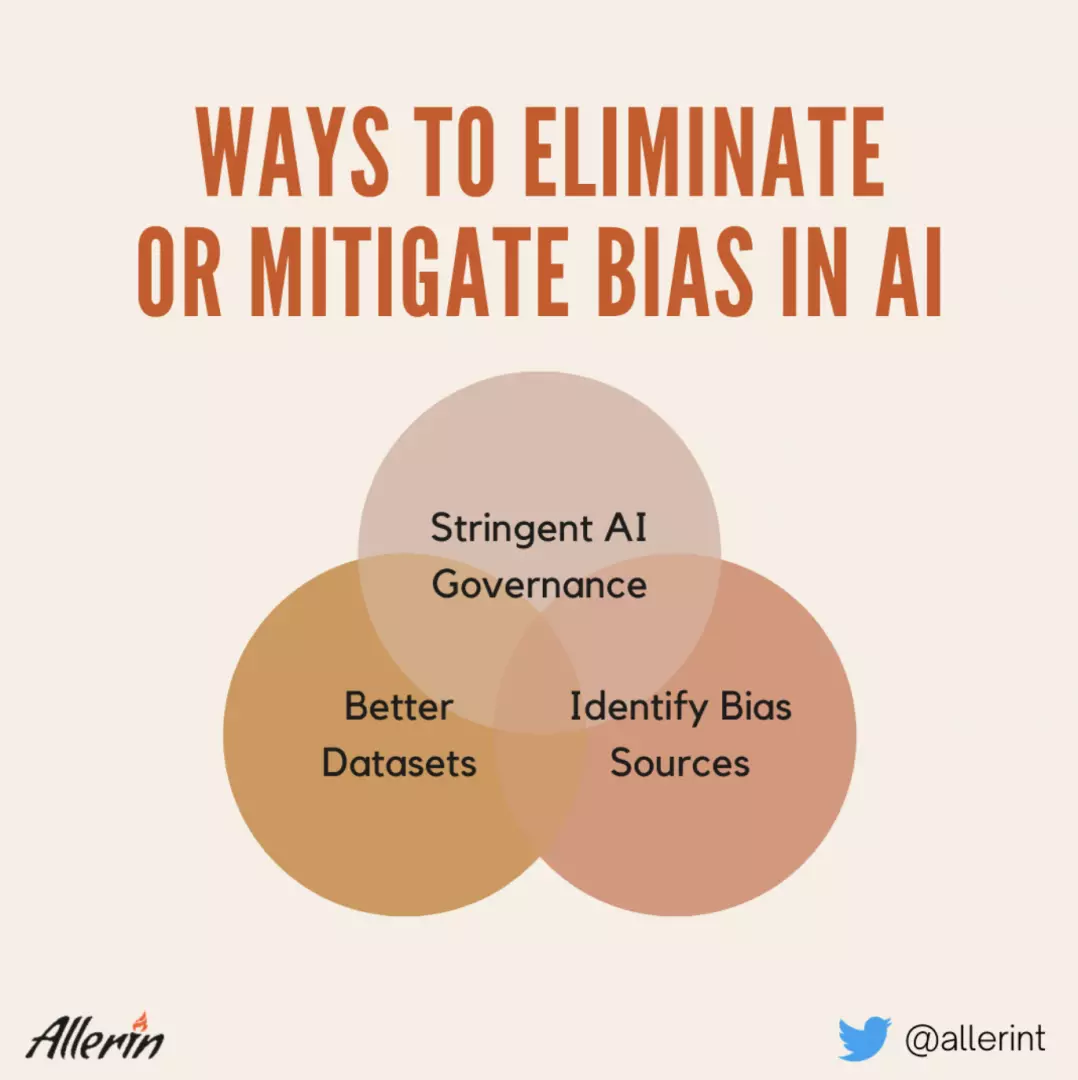

Apart from these, there are several other ways in which biased AI is born, which then goes on to reinforce age-old biases in today’s day and age. There are a few ways in which bias in AI can be eliminated for good, or at least reduced to a great extent.

Every single individual in an organization needs to try to reduce the likelihood of their AI systems being biased in their work. As we have seen, bias in AI solely stems from the type of data that it receives for either machine training or its day-to-day operations. Data scientists and other experts who gather large swathes of training and operational data need to use diverse data that includes people belonging to all ethnicities and racial minorities. Women must be represented as much as men in such datasets. Additionally, segmentation in AI models should only exist if the data experts provide input data to the model that is similarly segmented.

Additionally, organizations using AI-powered applications must not use different models for different groups regarding racial and ethnic diversities. In case there is inadequate data for a single group of people, organizations can use techniques such as weighting to balance out its importance with respect to the other groups. There are chances of biases getting into an AI model if every data group is not handled with care and equal weightage.

Detecting areas and operations where certain types of bias may enter an AI system is the primary responsibility of AI governance teams and executive-level employees in any organization. Ideally, this process must be carried out before the incorporation of AI in organizations. Organizations could mitigate bias by examining datasets and checking whether they will cause AI’s models to have a narrow ‘point of view’ or not. After a thorough examination, an organization must conduct trial runs to see if their AI systems show signs of bias in their working. Most importantly, organizations must make a list of questions that cover all the biased AI areas. Then, they must keep finding solutions in order to answer each of those questions one after the other.

The AI governance team plays a key role in preventing AI systems from becoming discriminatory over time. To keep bias in AI out of the equation, the governance team must regularly update the AI models. More importantly, the team should set non-negotiable regulations and guidelines for detecting and eliminating, or at least mitigating, bias from input datasets used for machine training. Apart from this, clear communication channels must be established so that employees at any level in an organization can inform the governance team on receiving customer complaints regarding AI discrimination. These channels will work even if the employees themselves discover that their organization’s AI may be biased.

Bias in AI can be extremely distressing for individuals or groups falling prey to its decisions. More problematically, biased AI systems are new-generation symbols of centuries of marginalization and discrimination faced by unfortunate victims throughout human history. Therefore, we must ensure that algorithmic bias is nipped in the bud by ensuring diverse input datasets for model training and competent AI governance. Discriminatory AI is everyone’s problem, and, so, all parties involved in the design, implementation, and maintenance phases of AI models in organizations should coalesce to resolve it.

Naveen is the Founder and CEO of Allerin, a software solutions provider that delivers innovative and agile solutions that enable to automate, inspire and impress. He is a seasoned professional with more than 20 years of experience, with extensive experience in customizing open source products for cost optimizations of large scale IT deployment. He is currently working on Internet of Things solutions with Big Data Analytics. Naveen completed his programming qualifications in various Indian institutes.

Leave your comments

Post comment as a guest