With the help of gradient descent algorithms, coders can reduce the cost function and increase the optimization of algorithms.

ML algorithms and deep learning neural networks work on a set of parameters like weights and biases, and a cost function that evaluates how good a set of parameters is. The lower the cost function goes, the greater is the accuracy of training data and output. To reduce this cost function is one of the key aspects of optimization in AI models. There are various optimization algorithms available that can be used to optimize AI models. And, the gradient descent algorithm is a highly popular optimization algorithm from them.

How a Gradient Descent Algorithm Works

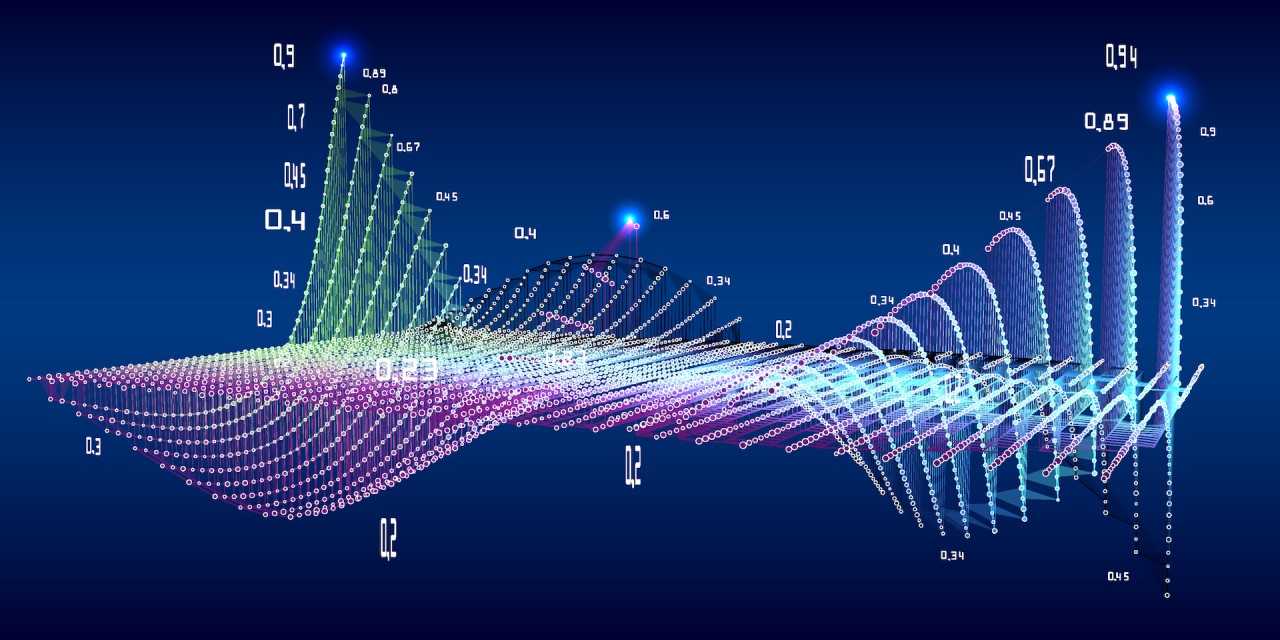

Suppose you have a ball and you place it on an inclined plane, according to the gravitational law, the ball will roll downwards until it rests on a gentle plane and stops rolling. Gradient descent algorithm functions in a similar way to the above example. Suppose the ball in the above example is the cost function, then gradient descent algorithms will act as a gravitational force to bring it down until it reaches an optimal value which would be the gentle plane. Every time the gradient descent algorithm runs, it calculates a cost function and then it iterates to find whether the cost function can be further minimized or not. And, this process continues till it finds the optimal cost function which cannot be minimized. The gradient descent starts with a set of parameters, and then it improves them slowly. The gradient or derivative calculated tells the developer the incline or slope of the cost function. Then to reduce the cost, the developer moves in the direction opposite to gradient. The gradient is calculated with the help of different types of gradient descent algorithms.

What are the Different Types of Gradient Descent Algorithms?

On the basis of the amount of data a gradient descent algorithm ingests, it can be classified into three types:

Leave your comments

Post comment as a guest