Comments

- No comments found

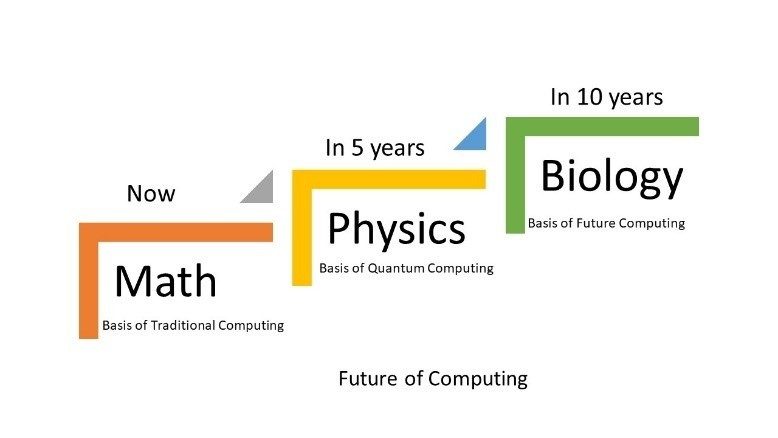

Quantum computing is the area of study focused on developing computer technology based on the principles of quantum theory.

Tens of billions of public and private capitals are being invested in Quantum technologies. Countries across the world have realized that quantum technologies can be a major disruptor of existing businesses, they have collectively invested $24 billion in quantum research and applications in 2021.

Classical computing relies, at its ultimate level, on principles expressed by Boolean algebra. Data must be processed in an exclusive binary state at any point in time or what we call bits. While the time that each transistor or capacitor needs be either in 0 or 1 before switching states is now measurable in billionths of a second, there is still a limit as to how quickly these devices can be made to switch state.

As we progress to smaller and faster circuits, we begin to reach the physical limits of materials and the threshold for classical laws of physics to apply. Beyond this, the quantum world takes over, in a quantum computer, a number of elemental particles such as electrons or photons can be used with either their charge or polarization acting as a representation of 0 and/or 1. Each of these particles is known as a quantum bit, or qubit, the nature and behavior of these particles form the basis of quantum computing. Classic computers use transistors as the physical building blocks of logic, while quantum computers may use trapped ions, superconducting loops, quantum dots or vacancies in a diamond.

Physical vs Logical Qubits

When discussing quantum computers with error correction, we talk about physical and logical qubits. Physical qubits are the physical qubits in quantum computers, whereas logical qubits are groups of physical qubits we use as a single qubit in our computation to fight noise and improve error correction.

To illustrate this, let’s consider an example of a quantum computer with 100 qubits. Let’s say this computer is prone to noise, to remedy this we can use multiple qubits to form a single more stable qubit. We might decide that we need 10 physical qubits to form one acceptable logical qubit. In this case we would say our quantum computer has 100 physical qubits which we use as 10 logical qubits.

Distinguishing between physical and logical qubits is important. There are many estimates as to how many qubits we will need to perform certain calculations, but some of these estimates talk about logical qubits and others talk about physical qubits. For example: To break RSA cryptography we would need thousands of logical qubits but millions of physical qubits.

Another thing to keep in mind, in a classical computer compute-power increases linearly with the number of transistors and clock speed, while in a Quantum computer compute-power increases exponentially with the addition of each logical qubit.

The two most relevant aspects of quantum physics are the principles of superposition and entanglement.

Superposition: Think of a qubit as an electron in a magnetic field. The electron’s spin may be either in alignment with the field, which is known as a spin-up state, or opposite to the field, which is known as a spin-down state. According to quantum law, the particle enters a superposition of states, in which it behaves as if it were in both states simultaneously. Each qubit utilized could take a superposition of both 0 and 1. Where a 2-bit register in an ordinary computer can store only one of four binary configurations (00, 01, 10, or 11) at any given time, a 2-qubit register in a quantum computer can store all four numbers simultaneously, because each qubit represents two values. If more qubits are added, the increased capacity is expanded exponentially.

Entanglement: Particles that have interacted at some point retain a type of connection and can be entangled with each other in pairs, in a process known as correlation. Knowing the spin state of one entangled particle – up or down – allows one to know that the spin of its mate is in the opposite direction. Quantum entanglement allows qubits that are separated by incredible distances to interact with each other instantaneously (not limited to the speed of light). No matter how great the distance between the correlated particles, they will remain entangled as long as they are isolated. Taken together, quantum superposition and entanglement create an enormously enhanced computing power.

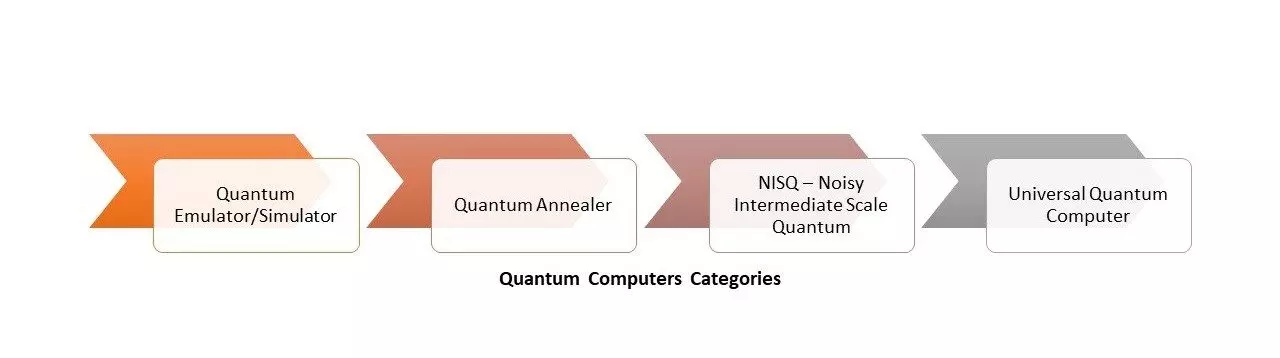

Quantum computers fall into four categories:

Quantum Emulator/Simulator

These are classical computers that you can buy today that simulate quantum algorithms. They make it easy to test and debug a quantum algorithm that someday may be able to run on a Universal Quantum Computer (UQC). Since they don’t use any quantum hardware, they are no faster than standard computers.

Quantum Annealer

A special purpose quantum computer designed to only run combinatorial optimization problems, not general-purpose computing, or cryptography problems. While they have more physical Qubits than any other current system they are not organized as gate-based logical qubits. Currently this is a commercial technology in search of a future viable market.

Noisy Intermediate-Scale Quantum (NISQ) computers.

Think of these as prototypes of a Universal Quantum Computer – with several orders of magnitude fewer bits. They currently have 50-100 qubits, limited gate depths, and short coherence times. As there are several orders of magnitude of Qubits, NISQ computers cannot perform any useful computation, however they are a necessary phase in the learning, especially to drive total system and software learning in parallel to the hardware development. Think of them as the training wheels for future universal quantum computers.

Universal Quantum Computers / Cryptographically Relevant Quantum Computers (CRQC)

This is the ultimate goal. If you could build a universal quantum computer with fault tolerance (i.e., millions of error- corrected physical qubits resulting in thousands of logical Qubits), you could run quantum algorithms in cryptography, search and optimization, quantum systems simulations, and linear equations solvers.

Post-Quantum / Quantum-Resistant Codes

New cryptographic systems would be secure against both quantum and conventional computers and can interoperate with existing communication protocols and networks. The symmetric key algorithms of the Commercial National Security Algorithm (CNSA) Suite were selected to be secure for national security systems usage even if a CRQC is developed. Cryptographic schemes that commercial industry believes are quantum-safe include lattice-based cryptography, hash trees, multivariate equations, and supersingular isogeny elliptic curves.

• Interference – During the computation phase of a quantum calculation, the slightest disturbance in a quantum system (say a stray photon or wave of EM radiation) causes the quantum computation to collapse, a process known as de-coherence. A quantum computer must be totally isolated from all external interference during the computation phase.

• Error correction – Given the nature of quantum computing, error correction is ultra-critical – even a single error in a calculation can cause the validity of the entire computation to collapse.

• Output observance – Closely related to the above two, retrieving output data after a quantum calculation is complete risks corrupting the data.

Ahmed Banafa is an expert in new tech with appearances on ABC, NBC , CBS, FOX TV and radio stations. He served as a professor, academic advisor and coordinator at well-known American universities and colleges. His researches are featured on Forbes, MIT Technology Review, ComputerWorld and Techonomy. He published over 100 articles about the internet of things, blockchain, artificial intelligence, cloud computing and big data. His research papers are used in many patents, numerous thesis and conferences. He is also a guest speaker at international technology conferences. He is the recipient of several awards, including Distinguished Tenured Staff Award, Instructor of the year and Certificate of Honor from the City and County of San Francisco. Ahmed studied cyber security at Harvard University. He is the author of the book: Secure and Smart Internet of Things Using Blockchain and AI.

Leave your comments

Post comment as a guest