Setting up your hypothesis test for success as a data scientist is critical. I want to go deep with you on exactly how I work with stakeholders ahead of launching a test. This step is crucial to make sure that once a test is done running, we’ll actually be able to analyze it. This includes:

- A well defined hypothesis

- A solid test design

- Knowing your sample size

- Understanding potential conflicts

- Population criteria (who are we testing)

- Test duration (it’s like the cousin of sample size)

- Success metrics

- Decisions that will be made based on results

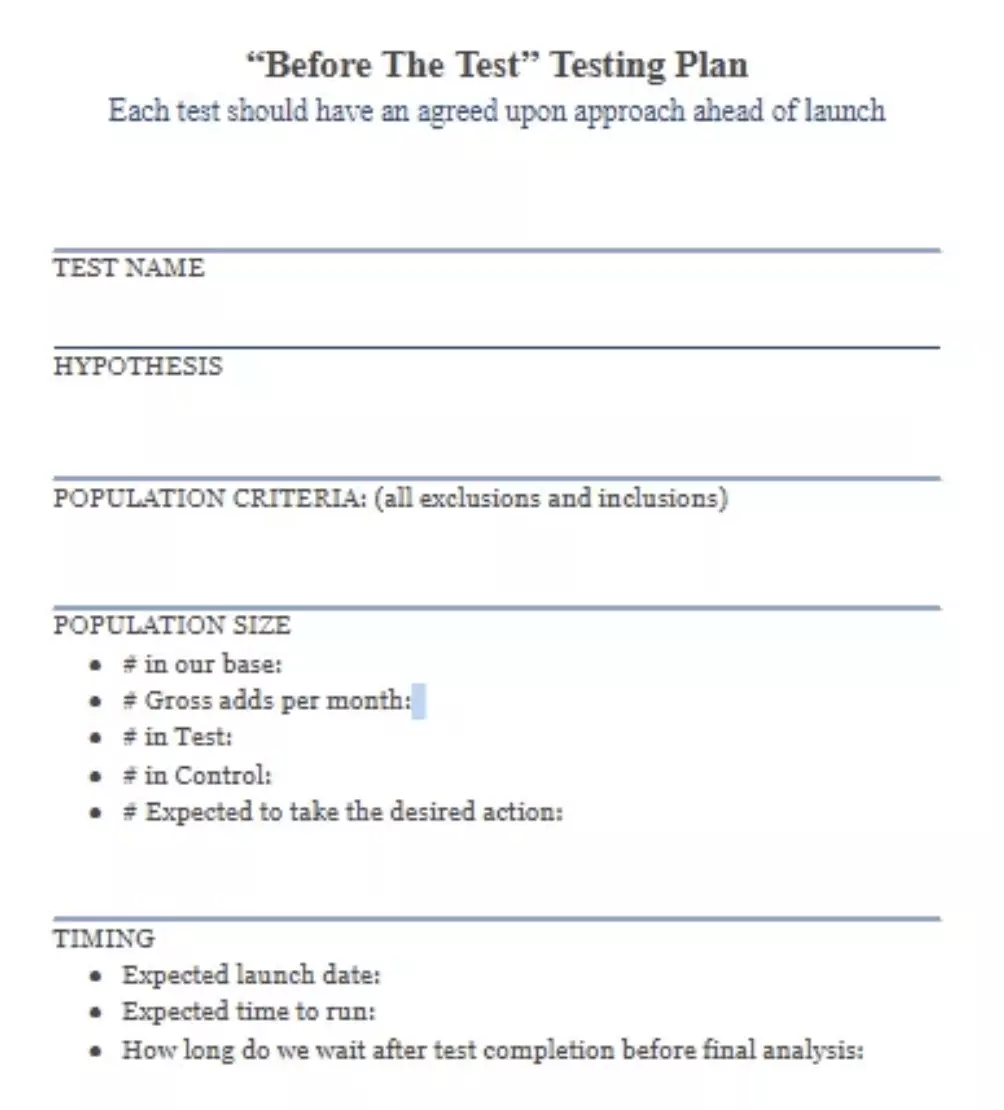

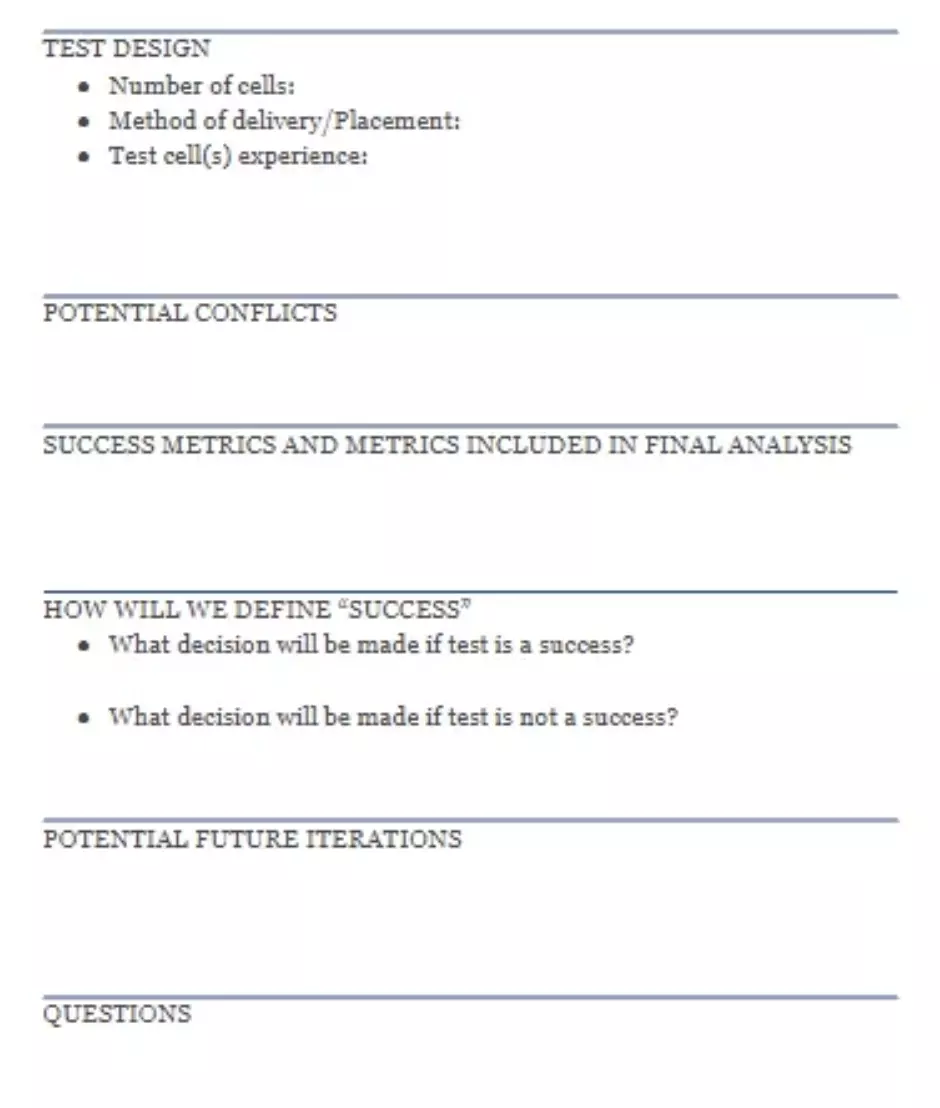

This is obviously a lot of information. Before we jump in, here is how I keep it all organized:

I recently created a google doc at work so that stakeholders and analytics could align on all the information to fully scope a test upfront. This also gives you (the analyst/data scientist) a bit of an insurance policy. It’s possible the business decides to go with a design or a sample size that wasn’t your recommendation. If things end up working out less than stellar (not enough data, design that is basically impossible to analyze), you have your original suggestions documented.

In a previous article I wrote:

“Sit down with marketing and other stakeholders before the launch of the A/B test to understand the business implications, what they’re hoping to learn, who they’re testing, and how they’re testing. In my experience, everyone is set up for success when you’re viewed as a thought partner in helping to construct the test design, and have agreed upon the scope of the analysis ahead of launch.”

Well, this is literally what I’m talking about:

This document was born of things that we often see in industry:

Hypothesis

I’ve seen scenarios that look like “we’re going to make this change, and then we’d like you to read out on the results”. So, your hypothesis is what? You’re going to make this change, and what do you expect to happen? Why are we doing this? A hypothesis clearly states the change that is being made, the impact you expect it to have, and why you think it will have that impact. It’s not an open-ended statement. You are testing a measurable response to a change. It’s ok to be a stickler, this is your foundation.

Test Design

The test design needs to be solid, so you’ll want to have an understanding of exactly what change is being made between test and control. If you’re approached by a stakeholder with a design that won’t allow you to accurately measure criteria, you’ll want to coach them on how they could design the test more effectively to read out on the results. I cover test design a bit in my article here.

Sample Size

You need to understand the sample size ahead of launch, and your expected effect size. If you run with a small sample and need an unreasonable effect size for it to be significant, it’s most likely not worth running. Time to rethink your sample and your design. Sarah Nooravi recently wrote a great article on determining sample size for a test.

- An example might be that you want to test the effect of offering a service credit to select customers. You have a certain budget worth of credits you’re allowed to give out. So you’re hoping you can have 1,500 in test and 1,500 in control (this is small). The test experience sees the service along with a coupon, and the control experience sees content advertising the service but does not see any mention of the credit. If the average purchase rate is 13.3% you would need a 2.6 point increase (15.9%) in the control to see significance at 0.95 confidence. This is a large effect size that we probably won’t achieve (unless the credit is AMAZING). It’s good to know these things upfront so that you can make changes (for instance, reduce the amount of the credit to allow for additional sample size, ask for extra budget, etc).

Potential Conflicts

It’s possible that 2 different groups in your organization could be running tests at the same time that conflict with each other, resulting in data that is junk for potentially both cases. (I actually used to run a “testing governance” meeting at my previous job to proactively identify these cases, this might be something you want to consider).

- An example of a conflict might be that the acquisition team is running an ad in Google advertising 500 business cards for $10. But if at the same time this test was running another team was running a pricing test on the business card product page that doesn’t respect the ad that is driving traffic, the acquisition team’s test is not getting the experience they thought they were! Customers will see a different price than what is advertised, and this has negative implications all around.

- It is so important in a large analytics organization to be collaborating across teams and have an understanding of the tests in flight and how they could impact your test.

Population Criteria

Obviously you want to target the correct people.

But often I’ve seen criteria so specific that the results of the test need to be caveated with “These results are not representative of our customer base, this effect is for people who [[lists criteria here]].” If your test targeted super performers, you know that it doesn’t apply to everyone in the base, but you want to make sure it is spelled out or doesn’t get miscommunicated to a more broad audience.

Test Duration

This is often directly related to sample size.

You’ll want to estimate how long you’ll need to run the test to achieve the required sample size. Maybe you’re randomly sampling from the base and already have sufficient population to choose from. But often we’re testing an experience for new customers, or we’re testing a change on the website and we need to wait for traffic to visit the site and view the change. If it’s going to take 6 months of running to get the required sample size, you probably want to rethink your population criteria or what you’re testing. And better to know that upfront.

Success Metrics

This is an important one to talk through. If you’ve been running tests previously, I’m sure you’ve had stakeholders ask you for the kitchen sink in terms of analysis.

If your hypothesis is that a message about a new feature on the website will drive people to go see that feature; it is reasonable to check how many people visited that page and whether or not people downloaded/used that feature. This would probably be too benign to cause cancellations, or effect upsell/cross-sell metrics, so make sure you’re clear about what the analysis will and will not include. And try not to make a mountain out of a molehill unless you’re testing something that is a dramatic change and has large implications for the business.

Decisions!

Getting agreement ahead of time on what decisions will be made based on the results of the test is imperative.

Have you ever been in a situation where the business tests something, it’s not significant, and then they roll it out anyways? Well then that really didn’t need to be a test, they could have just rolled it out. There are endless opportunities for tests that will guide the direction of the business, don’t get caught up in a test that isn’t actually a test.

Conclusion

Of course, each of these areas could have been explained in much more depth. But the main point is that there are a number of items that you want to have a discussion about before a test launches. Especially if you’re on the hook for doing the analysis, you want to have the complete picture and context so that you can analyze the test appropriately.

I hope this helps you to be more collaborative with your business partners and potentially be more “proactive” rather than “reactive”. No one has any fun when you run a test and then later find out it should have been scoped differently. Adding a little extra work and clarification upfront can save you some heartache later on. Consider creating a document like the one I have pictured above for scoping your future tests, and you’ll have a full understanding of the goals and implications of your next test ahead of launch.

A version of this article first appeared here.

Leave your comments

Post comment as a guest