Comments (2)

David Poole

Enlightening read

Michelle Sanders

Good post, that was highly interesting.

There’s a little secret that ontologists know that should make insurance companies, and that makes quite a few other people sweat more than a little bit. That secret is simple — insurance is not really all that complicated if you get all the quasi-standards out of the way.

Insurance companies, in general, have been fighting standardization in the industry for a while now, but they have also been losing. Classifications have existed for various medical procedures for quite some time — ICD-9-CM or ICD-10 for diagnostics, ICMP or ICHI for medical procedures, ATC or NDC for pharmaceutical designations, IDC-O or SNO-MED for anatomical information, with new ones coming along all the time. Most of these tend to be very precise, and the codes themselves are usually presented either as linear lists (because these are easy to represent in traditional databases) or as hierarchical trees. Many of these are somewhat ad-hoc — they are managed by industry consortia — and more than a few of these are also licensed at a hefty enough rate to put them outside the availability of ordinary programmers.

Insurance works largely by playing these codes and their definitions because fee schedules are invariably worked out on the basis of which code is used. If ICMP, for instance, has two or more codes for thoracic surgery, insurance companies will generally opt for the code which generates the most money for them, even if the intent of the surgery falls into a different code. This reduction approach drives up costs, and makes it difficult to compare different insurance companies … which of course is seen as a benefit by those companies.

However, smart data (semantics, if you want to get into the scary lexical term) is beginning to change that. Most codes systems represent taxonomies of information, which in turn tends to stress certain types of relationships preferentially to others. Moreover, while codes may be common, how those codes are stored are still all too often stored in different fields in different data systems, which means that unless two organizations happen to have the same storage formats and agree to a common interlingua for communication, most health care records and artifacts that are “exchangeable” are typically PDFs or images.

Smart data differs from relational data in that it is generally much easier to encode complex structural content, and is easier, given that content, to use patterns in the source data to determine content that’s encoded in different ways, dynamically. This process is called inferencing, and is one form of AI. As a simple example, let’s say that a report includes a field called BP given as 159 over 82. Now, a medical professional who saw this would make a number of inferences — BP is probably blood pressure, and by convention the first (159) represents the systolic pressure, the second (82) the diastolic pressure.

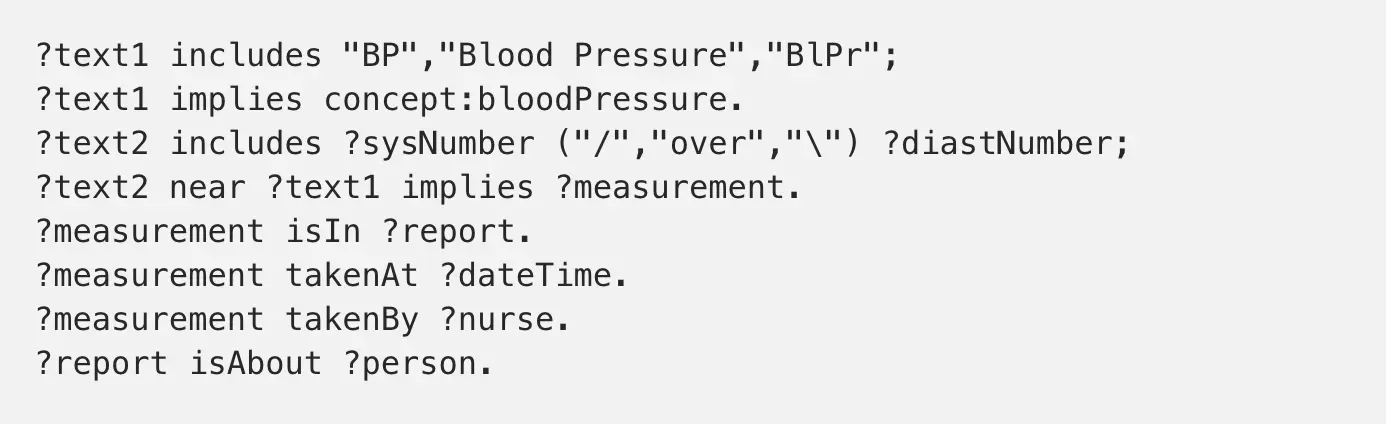

Now, in a relational system, someone has to read that report, and make all of these inferences then encode this information in a special box that represents the two values of the blood pressure. In a smart data system, however, much of this can be inferred based upon contextual information using more or less the following inferences:

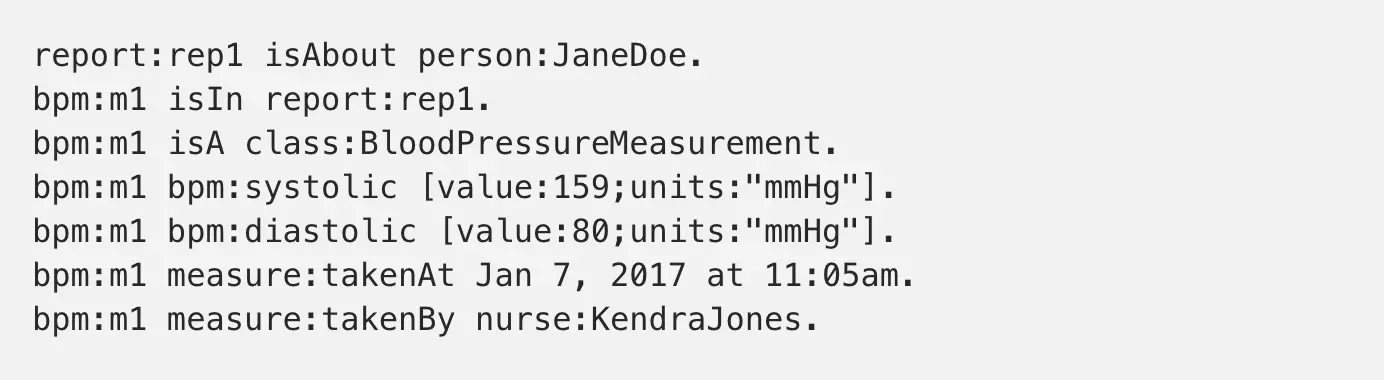

This process can consequently determine assertions:

A similar analysis could be done with different representations. Some may contain all of this information, some may be only be partial, but the idea is that you should be able over time to get a BP history for an individual that can then be correlated over different forms over time — and that only needs the intervention of someone to write the initial inference rules once.

What makes this powerful is that such systems begin to break down the requirements of separate encodings based upon different hospitals or doctors having their own forms or formats. You may have several different ways of representing blood pressure, but the conceptual information is going to be the same regardless of which representation is used, which is generally not true even when dealing with various services representations.

Such inferences can also be much more diagnostic in nature as well, and this is where smart data moves beyond saving keystrokes and into saving lives. An electrocardiogram converts the electrical discharges in the heart into an analog intensity signal, which can in turn be digitized. This digitized data can be saved, but it is possible to also capture inflection points and timing, compressing this and representing this data in the same smart data fashion. By doing this, it becomes possible using inferencing to detective patterns and to determine whether they are from known artifacts (arhythmia due to heart attack damage) or represent potential new problems). This can be a signal to a doctor for followup interviews, and can also serve with other types of inferences to say that when certain diagnostics show up together, they are often indicative of specific conditions, and respond with certain treatments.

Yet this also has implications for insurance companies, both immediate and longer term. The body is a system — high blood pressure may be associated with diabetes, which in turn may result in liver damage, higher risk of strokes and heart attacks, the potential for poor circulation and the development of necrotic gangrene in the extremities, eyesight degradation and other symptoms. By providing a holistic, time-oriented view of information from several sources in a way that can be universally shared, insurance companies can also ascertain future treatment costs.

Currently, this is done only very minimally. Indeed, the problem is that most people do not visit a doctor for a checkup more than maybe a couple of times a year, which means that the frequency of gathering data points about people is minimal and is largely descriptive. As cell phones, fitbits and other personal computational devices become more common, however, the potential of gathering diagnostic information jumps dramatically — though it also means that that volume of such data also jumps dramatically. And this is where companies such as Google or Amazon may very well displace insurance companies and even much of the more mundane care that doctors provide.

These companies are already beginning to establish electronic health records of their own, records that can be shared between different hospitals and care providers — regardless of whether those care providers are part of a given network or not. This means that they are establishing service APIs and formats for the transmission of common metrics, and can send information either about specific individuals or, scrubbed, about large numbers of people. With inferential tools, they can establish baselines, and from there can start offering much the same insurance services (whether in or out of network) that insurance providers do now.

Moreover, given the likely changes in the regulatory space with the Trump administration, it is very likely that Google or Amazon will start partnering with states directly to create single payer networks that would bypass the insurance companies altogether. They would be able to do this, ironically, because they have no incentive to keep record formats proprietary. They would benefit then primarily by being paid directly by the states rather than by employers or the individuals themselves through premiums, which would instead be subsumed under marginally higher taxes. I fully expect that California will lead the way there, with New York, Washington State, Massachusetts and Colorado probably close behind them.

Whether this will happen will largely be a matter of politics (ironically, by pushing so hard to see ObamaCare fail, the insurance companies may very well be hurting their own futures as state alternatives become commonplace). However, it is important to understand that the technology that makes this possible is here now — not some distant future — and that the appearance of smart data hubs in the health care and insurance fields is pretty much inevitable.

Enlightening read

Good post, that was highly interesting.

Kurt is the founder and CEO of Semantical, LLC, a consulting company focusing on enterprise data hubs, metadata management, semantics, and NoSQL systems. He has developed large scale information and data governance strategies for Fortune 500 companies in the health care/insurance sector, media and entertainment, publishing, financial services and logistics arenas, as well as for government agencies in the defense and insurance sector (including the Affordable Care Act). Kurt holds a Bachelor of Science in Physics from the University of Illinois at Urbana–Champaign.

Leave your comments

Post comment as a guest