Comments

- No comments found

Today’s artificial intelligence (AI) is limited. It still has a long way to go.

Some AI researchers are getting an insight that machine learning algorithms, in which computers learn through trial and error, have become a form of "alchemy."

AI researchers allege that machine learning is alchemy

In fact, it is largely about statistical inductive inference machines relying on big data computing, algorithmic innovations and statistical learning theory and connectionist philosophy.

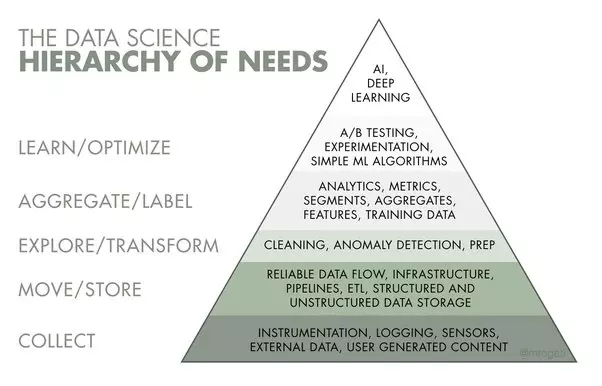

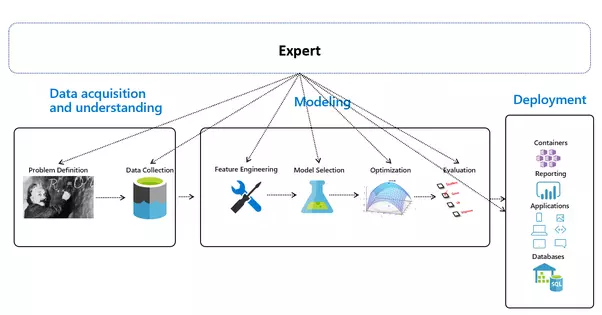

For most people it is merely building a machine learning (ML) model with an easy journey, going via data collection, curation, exploration, feature engineering, model training, evaluation, and finally, deployment.

EDA: Exploratory Data Analysis

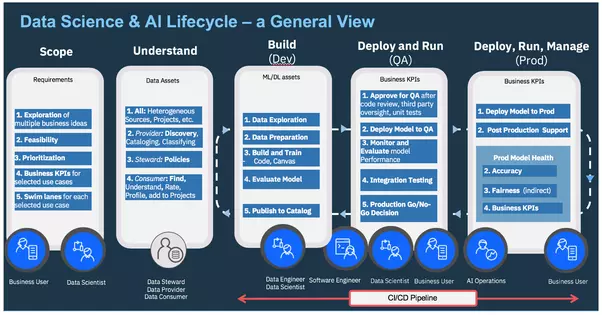

AI Ops — Managing the End-to-End Lifecycle of AI

Today's AI Alchemy is coming from "Machine Learning", which algorithms need to be configured and tuned for every different real-world scenario. This makes it very manually intensive and takes a huge amount of time from a human supervising its development. This manual process is also error-prone, not efficient, and difficult to manage. Not to mention the scarcity of expertise out there to be able to configure and tune different types of algorithms.

The configuration, tuning, and model selection is increasingly automated, and all big-tech companies, such as Google, Microsoft, Amazon, IBM, are coming with the similar AutoML platforms, automating the Machine Learning model building process.

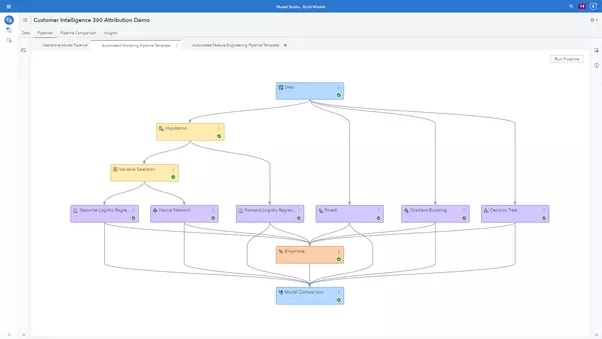

AutoML involves automating the tasks that are required for building a predictive model based on machine learning algorithms. These tasks include data cleansing and preprocessing, feature engineering, feature selection, model selection, and hyperparameter tuning, which can be tedious to perform manually.

And the end-to-end ML pipeline presented is consisting of 3 key stages, while missing the source of all data, the world itself:

Automated Machine Learning — An Overview

The key secrecy of the Big-Tech AI alchemists is the Skin-Deep Machine Learning going as Dark Deep Neural Networks, which models demand training by a large set of labeled data and neural network architectures that contain as many layers as impossible.

Each task needs its special network architecture:

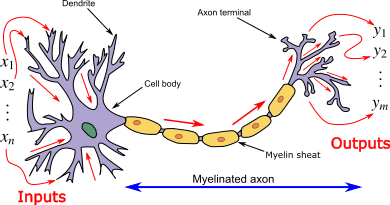

ANN was introduced as an information processing paradigm as if to be inspired by the way the biological nervous systems/brain process information. And such ANN is represented as “Universal Function Approximators”, which can learn/compute all sorts of activation functions.

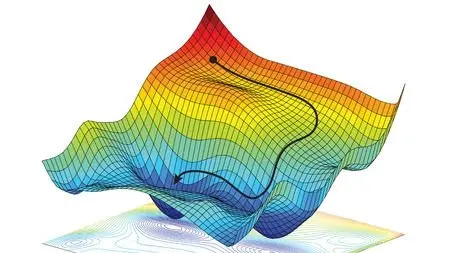

Neural networks compute/learn through a specific mechanism of backpropagation and error correction during the testing phase.

Just imagine that by minimizing the error, these multi-layered systems are expected one day to learn and conceptualize ideas by themselves.

Introduction to Artificial Neural Networks (ANN)

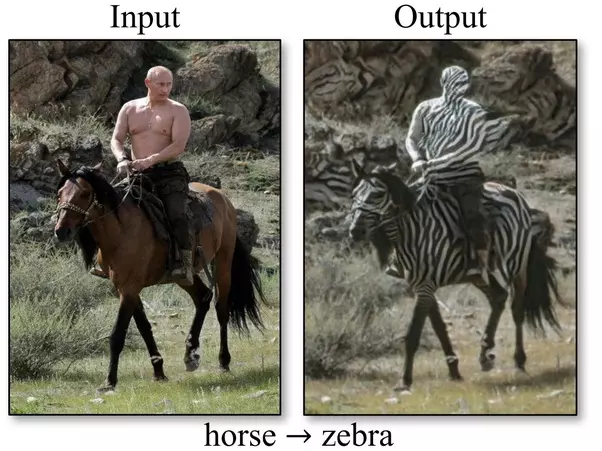

In all, a few lines of R or Python code will suffice for a piece of machine intelligence and there’s a plethora of resources and tutorials online to train your quasi-neural networks, like all sorts of deepfake networks, manipulating image-video-audio-text, with zero knowledge of the world, as Generative Adversarial Networks, BigGAN, CycleGAN, StyleGAN, GauGAN, Artbreeder, DeOldify, etc.

They create and modify faces, landscapes, universal images, etc., with zero understanding what it is all about.

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

14 Deep and Machine Learning Uses That Made 2019 a New AI Age.

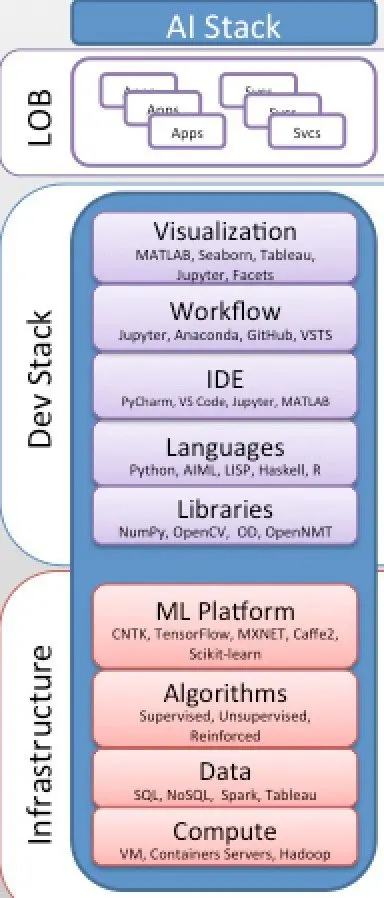

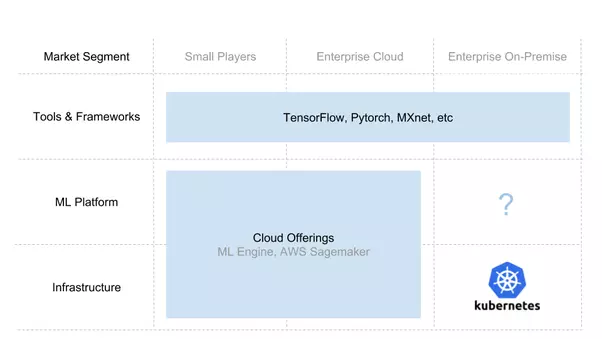

There is a myriad of digital alchemy tools and frameworks going in their own ways:

As a sad result, a Data Scientist’s working environment: scikit-learn, R, SparkML, Jupyter, R, Python, XGboost, Hadoop, Spark, TensorFlow, Keras, PyTorch, Docker, Plumbr, and the list goes on and on, is providing the whole "Fake AI Stack" for your alchemy of AI.

Modern AI Stack & AI as a Service Consumption Models

What is impersonated as AI, is in fact, a false and counterfeit AI. At the very best, it is a sort of automatic learning technologies, ML/DL/NN pattern-recognizers, which are essentially mathematical and statistical in nature, unable to act intuitively or model their environment, being with zero intelligence, nil learning and NO understanding.

That a totally fake AI should be somehow rectified is becoming plain even to the Turing award fakers, Hinton, LeCun and Bengio. All three Turing Award winners agree on the criticality of Deep Learning’s problems.

Yann LeCun presented the top 3 challenges to Deep Learning:

Learning with fewer labeled samples

Learning to reason

Learning to plan complex action sequences

Geoff Hinton — "It's about the problems with CNNs and why they’re rubbish”:

CNNs not good at dealing with rotation or scaling

CNNs do not understand images in terms of objects and their parts

CNNs are brittle against adversarial examples

Yoshua Bengio — “Neural Networks need to develop consciousness”:

Should generalize faster from fewer examples

Learn better models from the world, like common sense

Get better at “System 2” thinking (slower, methodological thinking as opposed to fast recognition).

The world faces an unprecedented case of totally fake artificial intelligence (FAI) led by the US G-MAFIA + China's BAT-Triada.

These kinds of fakes are typically punished under fraud and trademark laws .

Penalties are increased where the crime resulted in financial gain, or resulted in another party suffering a financial loss. Counterfeiting - FindLaw

Google (Alphabet) has joined the trillion-dollar market cap, a group that has included Apple, Amazon and Microsoft. The biggest tech companies, which include the four above and Facebook, are collectively worth $5 trillion.

Meantime, 45 million Americans collectively owe in student loans $1.5 trillion (6.9% of GDP), and they are struggling to pay it back, dreaming for $100000–500000 fake AI/ML/DL/NN placement in any G-MAFIA or BAT-triada company.

Leave your comments

Post comment as a guest