Comments

- No comments found

The AI industry is experiencing a revolution, but not necessarily in the way people expect.

While attention has been focused on advanced language-generating systems and chatbots, private AI companies have quietly entrenched their power. A recent study by researchers from the Massachusetts Institute of Technology found that a handful of individuals and corporations control much of the resources and knowledge in the AI sector, a phenomenon referred to as “industrial capture”. The study calls on policymakers to pay closer attention to this trend as it could ultimately shape the impact of AI on our collective future.

Source: Deloitte & Wall Street Journal

Quality data is increasingly crucial as generative AI, the technology underlying the likes of ChatGPT, is being embedded into software used by billions of people such as Microsoft Office, Google Docs and Gmail, and businesses from law firms to the media and educational institutions are being upended by its introduction.

The MIT study found that almost 70 percent of AI PhDs went to work for companies in 2020, compared to 21 per cent in 2004. Similarly, there was an eightfold increase in faculty being hired into AI companies since 2006, far faster than the overall increase in computer science research faculty. This means that many researchers feel they cannot compete with industry due to a lack of compute or engineering talent.

In particular, academics were unable to build large language models like GPT-4, a type of AI software that generates plausible and detailed text by predicting the next word in a sentence with high accuracy. The technique requires enormous amounts of data and computing power that primarily only large technology companies like Google, Microsoft and Amazon have access to.

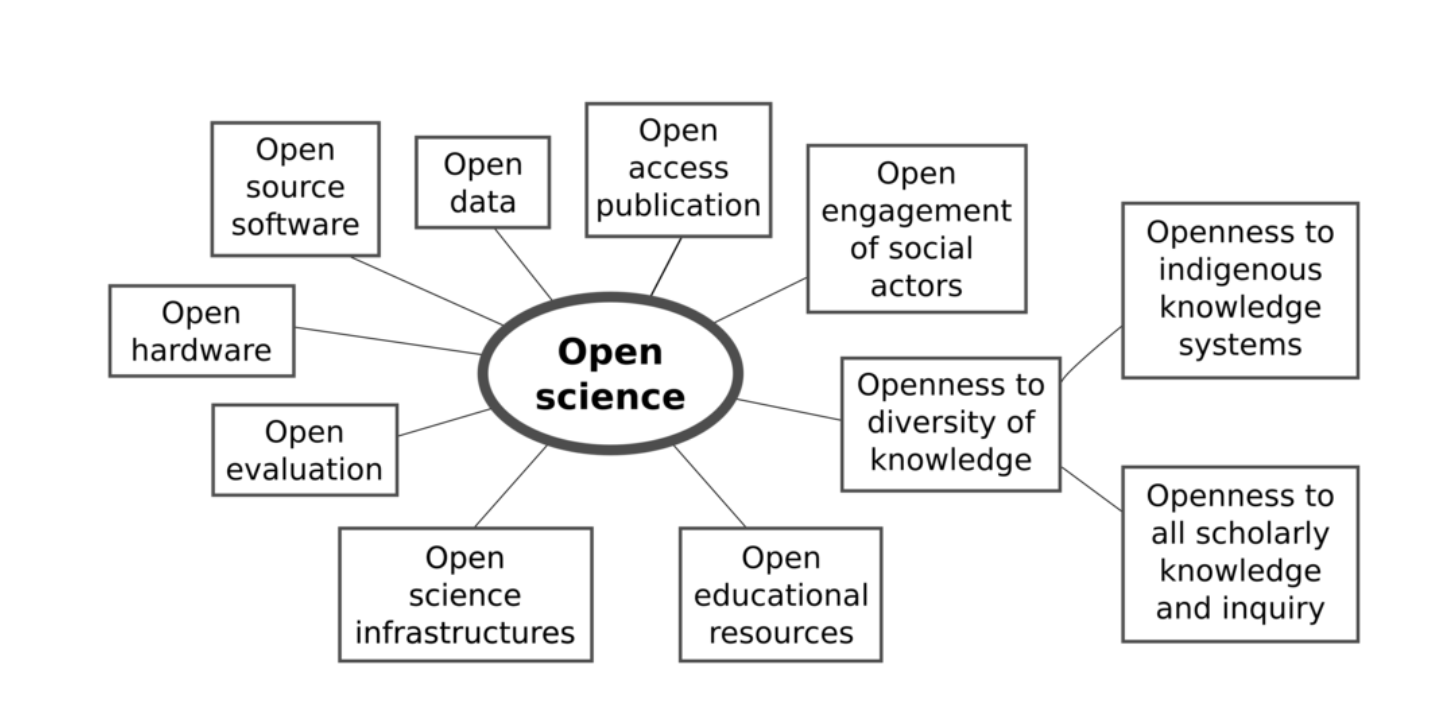

Lack of access to large language models means that researchers cannot replicate the models built in corporate labs, and can therefore neither probe nor audit them for potential harms and biases very easily. This poses a significant risk, as AI models built in corporate labs may not necessarily prioritize the public interest, and new applications may be commercially driven rather than in the broader public interest.

The consequences of this shift are manifold. It means public alternatives to corporate AI tech, such as models and data sets, are becoming increasingly scarce. New applications are likely to be commercially driven rather than in the broader public interest.

Even if big tech companies have good intentions, there are some autocratic stakeholders who do not want the secrets of large language models to be revealed.

In order to address this issue, researchers and policy experts agree that policymakers cannot turn a blind eye. Some experts suggest that governments should set up academia-only data centres to allow researchers to run experiments, while others believe this would further concentrate power among those who own infrastructure like cloud services. Nevertheless, all experts agree that facilitating access to AI research is crucial to prevent industrial capture from becoming a major threat to academic freedom and accountability.

The availability of data that the research community can easily access and analyse is critical for the development of effective AI. According to a study conducted by the McKinsey Global Institute, countries that promote open data sources and data sharing are more likely to make significant progress in AI research.

One worryingly consistent trend is the level of risk mitigation that organisations employ to boost digital trust. While the use of AI has increased, there has been no significant increase in the reported mitigation of any AI-related risks.

Policymakers must ensure that access to AI research and development is not restricted to a small group of tech companies, by making it open to anyone who has the skills and expertise to contribute. This will enable us to create a future in which the benefits of AI are shared widely and equitably, and in which the technology is used to advance the public interest rather than serve the interests of a privileged few.

Leave your comments

Post comment as a guest