Comments

- No comments found

In order to make AI more ethically sound and practical, it’s crucial to enhance explainability in deep neural networks,” says Yoshihide Sawada, principal investigator at Aisin.

Transparency in AI’s working can be headache-inducing for organizations that incorporate the technology in their daily operations. So, what can they do to put their concerns surrounding explainable AI requirements to rest?

AI’s far-reaching advantages in any industry are pretty well-known by now. We are aware of how the technology helps thousands of companies around the world by speeding up their operations and allowing them to use their personnel more imaginatively. Additionally, the long-term cost and data security benefits of AI incorporation have also been documented countlessly by several tech columnists and bloggers. AI does have its fair share of problems, though. One such problem is the technology’s sometimes-questionable decision-making. More importantly though, the bigger issue is the slight lack of explainability whenever an AI-powered system makes embarrassing or catastrophic errors.

Human beings make mistakes on a daily basis. Nevertheless, we know exactly how a mistake was made. There is a clear sequence of corrective actions that can be taken to avoid making a similar one in the future. However, some of AI's errors are not explainable because data experts do not know how an algorithm came to a specific conclusion during an operation. Therefore, explainable AI should be a primary priority for organizations planning to implement the technology into their daily work as well as the ones that have incorporated it already.

One of the common fallacies regarding AI is that it is completely immune to making errors. Neural networks, especially in their early stages, can be fallible. At the same time, these networks execute their commands in a non-transparent manner. As mentioned earlier, the route taken for an AI model to arrive at a particular conclusion is not clarified at any point during an operation. Therefore, creating an interpretation of such an error becomes nearly impossible for even accomplished data experts.

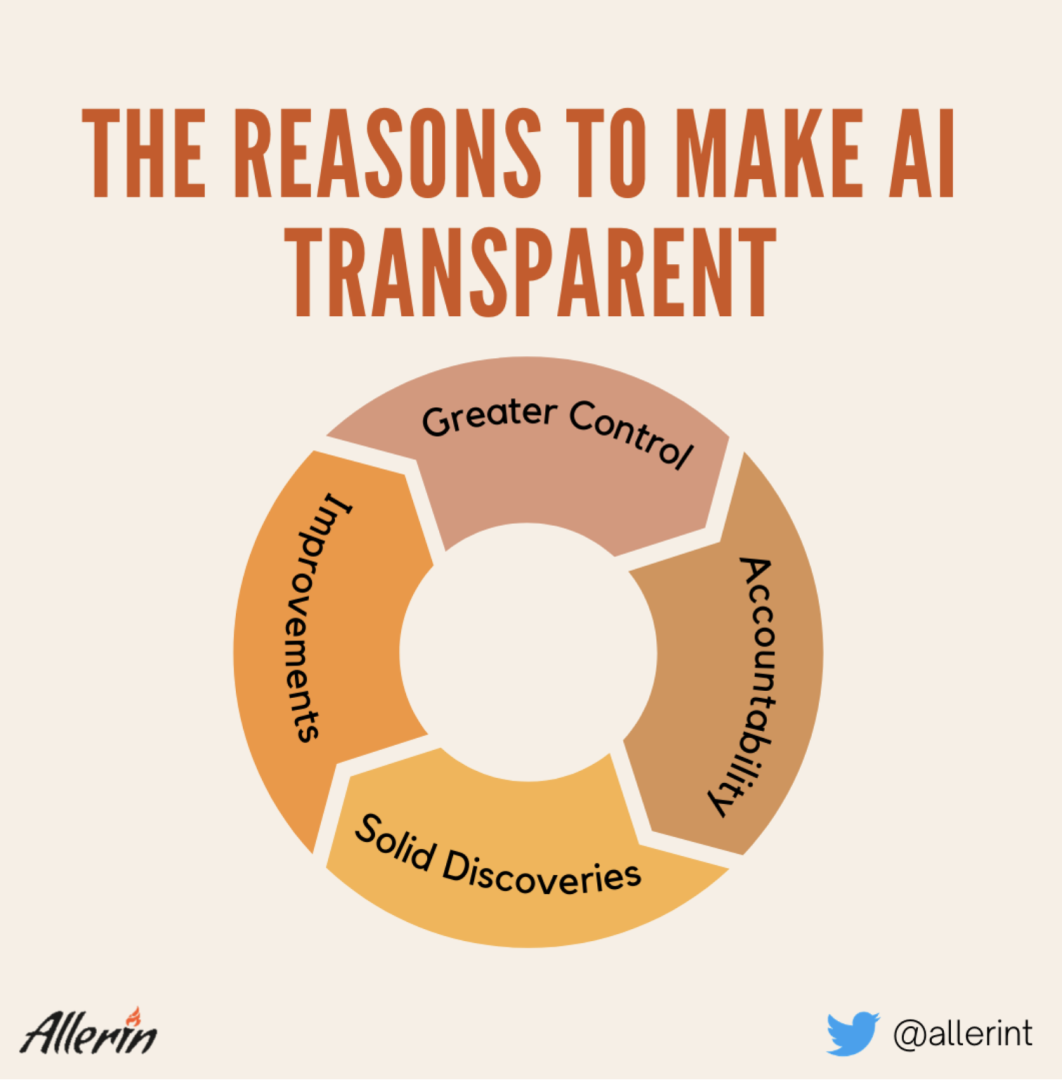

AI's transparency problem stands out in the healthcare industry. Consider this example: A hospital has a neural network or a black box AI model in place to diagnose a brain ailment in a patient. The intelligent system is trained to find data patterns from past records and the patient's existing medical papers. Using predictive analysis, if the model forecasts that the subject is vulnerable to brain-related diseases in the future, then the reasons behind the prediction may usually not be 100 percent clear. For private and public organizations, here are the four main reasons to make AI's working more transparent:

As specified earlier, stakeholders need to know the inner workings and reasoning logic behind an AI model's decision-making process, especially for unexpected recommendations and decisions. An explainable AI system ensures that algorithms can make fair and ethical recommendations and decisions in the future. This can boost the compliance and trust in AI’s neural networks within organizations.

Explainable AI generally prevents system errors from taking place in work operations. The increased knowledge regarding an AI model's existing weaknesses can be used to eliminate them. As a result, organizations have greater control over the outputs provided by AI systems.

As we know, AI models and systems need to undergo continuous improvements from time to time. Explainable AI’s algorithms will keep getting smarter over the course of regular system updates.

New threads of information will enable humankind to discover solutions for big problems of the current age (such as medicines or treatments to cure HIV AIDS; methods to handle attention deficit disorders). More importantly, these discoveries will be backed by conclusive evidence and justifications for universal verification.

Transparency within an AI-powered system can be displayed in the form of analytic statements in a natural language that humans can comprehend, visualizations that highlight the data used to formulate an output decision, cases that display points supporting a given decision or statements that highlight why alternative decisions were rejected by the system.

In recent years, the field of explainable AI has developed and expanded. Crucially, if this trend continues in the future, then organizations will be able to use explainable AI to boost their output while knowing the rationale behind every key AI-backed decision.

While these were the reasons why AI needs to be more transparent, there are several barriers that prevent the same from taking place. Some of these roadblocks include:

As we know, explainable AI can improve the aspects of fairness, trust and legal abidance in artificial intelligence systems. However, several organizations may be less enthralled about increasing the accountability of their intelligent systems because explainable AI may come with its own set of issues. Some of these issues are:

The theft of vital details that outline an AI model’s working.

The threat of cyber-attacks by external entities due to the increased knowledge of a system’s vulnerabilities.

On top of these, many people believe that uncovering and disclosing confidential decision-making data in an AI system can leave organizations susceptible to lawsuits or regulatory action.

To emerge unscathed from this ‘transparency paradox,’ organizations will have to think about the risks related to explainable AI compared to its obvious benefits. They will have to manage these risks efficiently while ensuring that the information generated from an explainable AI system is not watered down.

Additionally, organizations must understand two things: firstly, the costs associated with making AI transparent should not discourage them from incorporating such systems anyway. Organizations must create a risk management plan to accommodate explainable models in such a way that the key information provided by them remains confidential. Secondly, organizations must improve their cybersecurity frameworks to detect and eliminate the bugs and network threats that could result in data breaches.

Deep learning is an integral part of AI. Deep learning models and neural networks are generally trained in an unsupervised manner. Deep learning neural networks are a key AI component and are involved in image recognition and processing, advanced speech recognition, natural language processing and system translation. Unfortunately, while this AI component can handle much more complex tasks compared to regular machine learning models, deep learning also introduces the black box problem in daily operations and tasks.

As you know, neural networks replicate the working of the human brain. The structure of an artificial neural network is built to mimic a real one. Neural networks are created with several layers of interconnected nodes and other ‘hidden’ layers. While these neural nodes carry out basic logical and mathematical operations to arrive at conclusions, they are also intelligent and intuitive enough to process historical data to generate results from them. Really complex operations involve several neural layers and billions of mathematical variables. As a result, the output generated from such systems has little to no chance of being comprehensively verified and validated by AI experts in organizations.

Organizations such as Deloitte and Google are working on the creation of tools and digital applications that can blast their way past the black box walls and uncover the data used to make key AI decisions in order to boost the transparency of intelligent systems.

To make AI more accountable, organizations have to reimagine their existing AI governance strategies. Here are some of the key areas wherein better governance can reduce transparency-based AI issues.

During the initial stages, organizations can prioritize trust and transparency while building their AI systems and training their neural networks. Keeping an eye on how AI service providers and vendors design the AI networks can alert the key decision-makers in organizations about the early issues related to an AI model’s competence and accuracy. In this way, having a hands-on approach during the system design phase can shed light on some of AI’s transparency-based issues for organizations to observe.

As AI regulations all over the world become more and more stringent regarding AI accountability, organizations can really benefit from making their AI models and systems compliant with such norms and standards. Organizations must push their AI vendors to create explainable AI systems. To eliminate bias in AI’s algorithms, organizations can approach cloud-based service providers instead of recruiting expensive data experts and teams. Organizations must ease their compliance burden by clearly directing the cloud service providers to tick all the compliance-related boxes during the installation and implementation of AI systems in their workplaces. Apart from these points, organizations can also include points in their AI governance plan regarding privacy and data security, among others.

Artificial intelligence and deep learning are responsible for some of the most astounding technological advances we have made since the turn of the millennium. Fortunately, while 100% explainable AI does not exist yet, the concept of AI-powered systems being transparent is not an unachievable dream. It is up to the organizations implementing such systems to improve their AI governance and take risks in order to make it happen.

Naveen is the Founder and CEO of Allerin, a software solutions provider that delivers innovative and agile solutions that enable to automate, inspire and impress. He is a seasoned professional with more than 20 years of experience, with extensive experience in customizing open source products for cost optimizations of large scale IT deployment. He is currently working on Internet of Things solutions with Big Data Analytics. Naveen completed his programming qualifications in various Indian institutes.

Leave your comments

Post comment as a guest