Comments

- No comments found

The rapid advances in artificial intelligence (AI) as demonstrated by the recent launch of GPT-4 and previously by ChatGPT are generating a great deal of excitement.

Artificial intelligence continues to evolve by offering new possibilities in various industries and aspects of human existence, creating numerous debates about its potential impact on our everyday lives and across the global economy.

The C-Suite of large organisations in different sectors are actively discussing whether and how such models may be deployed within their organisations, whilst at the same time there has been a rapid adoption of the models by end users. However, Large Language Models (LLMs) using Transformers with the self-attention mechanism is not the only area of AI that is advancing rapidly. Alongside the vast potential of LLMs and the Transformer based approach that underlies it, is also the rise of the AI on the Edge (of the network), across the devices that we interact with in our daily lives.

The convergence of artificial intelligence with 5G and Edge is set to revolutionise our world over the next few years. 5G networks have been growing rapidly (5G usage already surpassing 1 billion subscriptions) and the Edge pace of change in the future will only continue to accelerate as Standalone (SA) 5G networks emerge resulting in change at a faster pace than at any other point in human history. Every sector of the economy is set to be impacted.

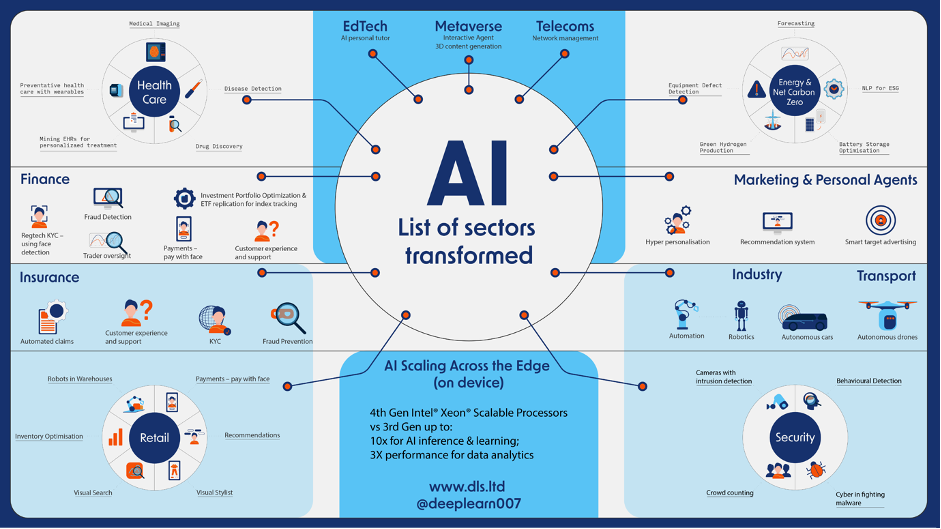

AI is set to transform every sector of the economy:

I am delighted to announce that I am working with Intel as an Intel Ambassador in relation to Intel® Xeon® Scalable processors, however, for the avoidance of doubt the thoughts and opinions expressed within this article are my own. This is a product that I genuinely believe has potential to deliver significant benefits in terms of both sustainability and enhanced operational performance of AI models in particular in the era of the Edge (on device) or the hybrid Cloud / Edge model (see below for more details).

At the heart of such advances will be the hardware that amounts to the brain of the computing system. These are known as Central Processing Units (CPUs) A CPU undertakes critical tasks such as the execution and processing of instructions. They play a major role in response times and speed of a computing system (or device).

With built-in accelerators and software optimizations (over the previous generation), the 4th generation Intel® Xeon® Scalable processors utilise built-in acceleration as an alternative, more efficient way to achieve higher performance than growing the CPU core count.

This results in more efficient Central Processing Unit (CPU) utilization, lower electricity consumption, and higher ROI, while helping businesses achieve their sustainability goals. The enhanced ROI is enabled by lower utility costs, without the need to purchase additional accelerators due to the fact that Intel have it integrated. In turn this results in better CPU utilization because of the potential to leverage the benefits of integrated accelerators and the ability to saturate the CPU cores for additional workloads.

As a case study example of NEC collaboration with Intel to achieve market-leading low power consumption in 5G core network UPF to support green networks. The case study focuses on the User Plane Function (UPF) for 4G/5G for 4G/5G networks whereby CPU energy consumption was reduced by more than 30%. NEC noted that this was achieved “by using the Intel Xeon Scalable processor telemetry for the UPF’s load determination logic, NEC has enabled the dynamic control of hardware resources in real time for optimal power consumption.”

The WEF published a survey from Davos 2023 whereby 98% of CEOs stated that they needed to change their business models between now and the next 3 years. Furthermore, technological advancement and the challenges of climate change were cited as major factors for business models becoming redundant.

Our business and policy leaders are facing the challenges of on the one hand combating climate change and also the energy costs crisis (in turn requiring energy efficiency and reduced carbon footprint) whilst also providing for a business platform and economic model that allows for growth and technological competitiveness to respond to the changing consumer needs.

In order to avail sustainability goals alongside growing revenues, we need greater efficiencies in terms of computational costs, whilst also enabling firms to respond with strategic agility to their changing business environment and extract the greatest competitive advantage from their technological resources.

Examples will include the ability to leverage:

Voice technology: customer call centre assistants to help direct the customer to the relevant service, personalised assistants that may enable a user to adjust their home settings;

Natural Language: with more advanced personal assistants and chatbots, evaluation of sentiment, document classification and summarisation;

Computer Vision: with traffic flow management systems that may reduce congestion thereby reducing journey times and pollution. A further example use case is product defect detection in manufacturing facilities by scanning product components at scale and also in healthcare with medical imaging;

Time Series with forecasting of renewable energy supply, power demand and helping optimise supply with demand;

Anomaly detection for the prevention of fraud and to enhance cybersecurity;

Analytics: to better understand their customers and their internal operating efficiencies.

In the era of the AI scaling across the Edge and ultra-low latency SA 5G networks, business leaders are likely going to need AI capabilities that respond in near real-time to customers’ needs and user requests. This is where Intel® Advanced Matrix Extensions (Intel® AMX) provides a strategic advantage.

AI capabilities maybe significantly accelerated on the CPU with Intel® Advanced Matrix Extensions (Intel®AMX). Intel AMX is a built-in accelerator that improves the performance of Deep Learning training and inference on 4th Gen Intel®Xeon® Scalable processors, ideal for workloads like natural language processing (NLP), recommendation systems, and image recognition.

An example in the manufacturing sector is provided by unplanned outages that are very costly with the auto manufacturing sector reporting that every minute of unplanned outages costing USD 22,000. The combined total cost of unplanned outages to the manufacturing sector is estimated to amount to a vast $50 billion a year.

Machine Learning techniques may enable advance detection of such outages based upon historical data from the machines and local sensors and allow for timely interventions to limit such outages and thereby also reduce machine degradation thereby extending valuable asset life whilst also reducing lost revenues from missed production times. This would require having capable CPUs and in some cases GPUs on the local devices and sensors that may be monitoring the machinery.

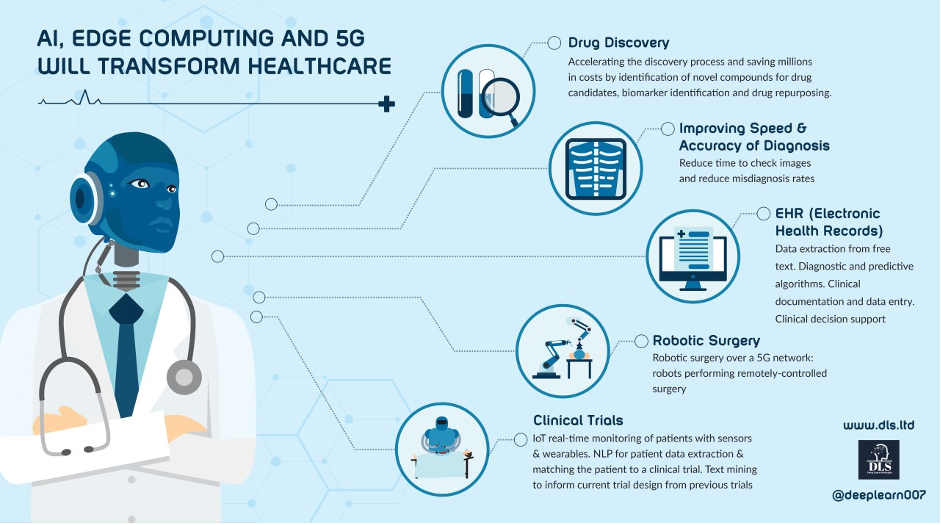

Furthermore, advances in healthcare with AI and remote devices are set to transform the ability to meet patient needs with the ability to monitor vital bio readings in near real-time and also detect if a senior person has fallen over and unable to get up thereby alerting their carers or if vital bio readings are passed to warn their physician to call them in for a check-up. We may move into a world of preventative medicine thereby improving patient outcomes and reducing costs for both the patient and the overall economy.

Indeed, there is vast potential to scale AI in healthcare from speeding up drug discovery to applying NLP on electronic health records with the aim of predictive healthcare to make an intervention with a diagnosis before too much damage occurs. Furthermore, AI on the edge has vast potential to impact areas such as clinical trials as well as robotic procedures.

Edge SKUs of the 4th Gen Intel Xeon Scalable platform provide long-life manufacturing availability to help customers extend the life of their systems and provide replacements throughout the equipment life cycle.

4th Gen Intel® Xeon® Scalable processors have the most built-in accelerators of any CPU on the market to deliver performance and power efficiency advantages across the fastest growing workload types in AI, analytics, networking, storage, and HPC. The 4th Gen Xeon SP has extensive hardware and software optimizations that supports matrix multiply instructions across market relevant frameworks, toolkits, and libraries which enhance AI training and inference performance. The capability of the built-in accelerators provides an advantage for IoT devices with AI embedded into the devices and sensors for near real-time engagement with users (be they human customers, or for machine-to-machine engagement).

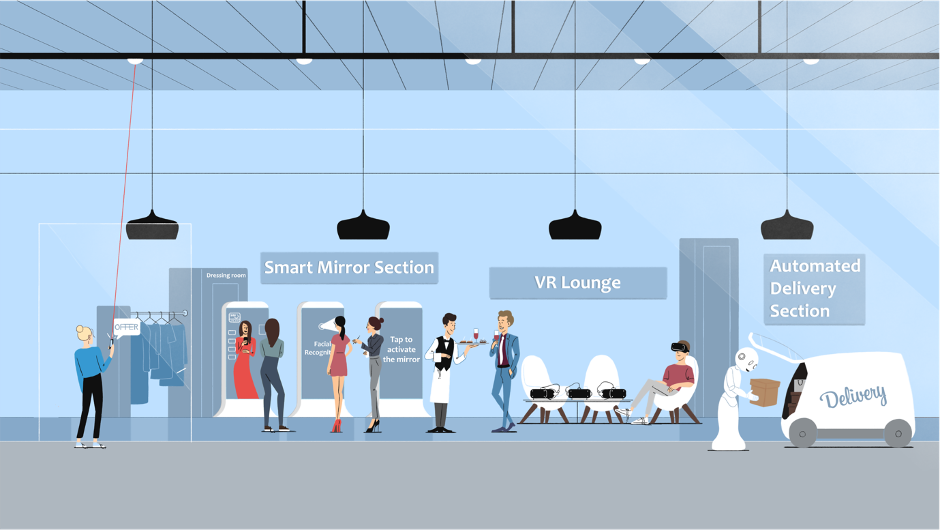

Consider a use case example, in a world where AI meets the IoT referred to as the AIoT, firms will be able to respond in near-real time to customers’ needs. A customer walking down the mall or high street seeking the latest products or bespoke offerings. Maybe the customer has been making queries on their mobile device and the stores in the vicinity respond with dynamic responses to meet the customer’s interests with targeted promotions on the fly that are relevant to the customer.

4th Gen Intel® Xeon® Scalable processors have seamlessly integrated accelerators to speed up data movement and compression for faster networking, boost query throughput for more responsive analytics, and offload scheduling and queue management to dynamically balance loads across multiple cores. To enable new built-in accelerator features, Intel supports the ecosystem with OS level software, libraries, and APIs. Such features are key to enabling a good user experience in ultra-low latency environments and meeting customer needs in near real-time whatever the sector and use case (dynamic retail brand promotions on the fly, autonomous robotics, healthcare analytics, IoT device responses).

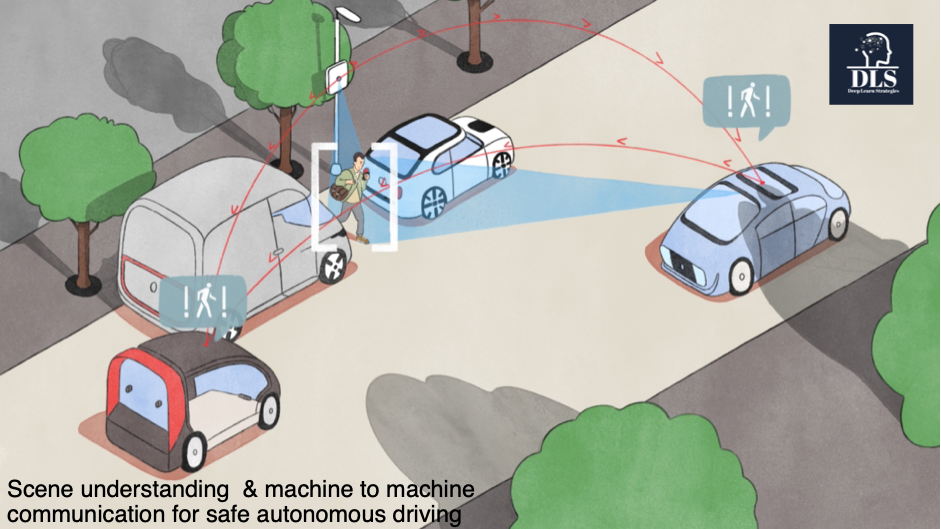

As a good example of a use case that may occur upon the arrival of autonomous systems at scale, it will not be a sensible approach to have an autonomous vehicle (AV) waiting for a traffic light signal to change and then in turn waiting for a remote server to receive its request and then respond back to a query of what the AV should do next. Rather the AV will make the decisions autonomously on the device itself (at the edge of the network) as the risk of waiting may cause an accident – for example a car indicating and braking that it is going to turn right, the AV must understand and respond dynamically in real-time. Autonomous stems (robotics vehicles, drones) and indeed devices and sensors that will have AI operating within the device will need energy efficient and powerful CPUs and GPUs to enable them to undertake tasks efficiently.

The world that we are entering into and scaling across the rest of this decade – real-time, dynamic responses, machine to machine communication, AI on the edge of the network (on the devices and sensors around us).

The 4th Gen Intel® Xeon® Scalable processors built-in accelerators enhance efficiency allowing for a gain up to 1.8x higher throughput for packet processing on Open vSwitch (OVS) with Intel® Data Streaming Accelerator (Intel® DSA) compared to software on cores without acceleration and up to 3.27x performance on packet forwarding with Intel® Dynamic Load Balancer (Intel® DLB) vs. software queue management on cores without acceleration.

We are likely to experience the following:

A continued growth in cloud as data continues to grow with AI models residing in the cloud. Such data may be used for training AI algorithms, data lakes and analytics. Powerful Central Processing Units and Graphical Processing Units will be key to analyse this data and find meaningful insights as well as for training new Machine Learning algorithms to improve customer service or to drive internal business process efficiencies. It is going to be essential to ensure that such data centres are energy efficient and contribute to reducing carbon footprint as well as enabling high performance. 4th Gen Intel® Xeon® Scalable processors have a range of features for managing power and performance and are manufactured with 90%+ renewable electricity.

The hybrid Cloud / Edge model whereby AI is trained on the cloud and inferences on the edge of the network closer to where the data is generated (often on the device). Powerful CPUs and GPUs with built-in processors will help efficiently deliver better performing algorithms;

AI on the edge itself (on the device) thereby allowing near instant responses to users (customers /clients) and historical data transferred to the cloud. As AI R&D advances and neural compression technology too, we may attain a level of AI that goes beyond inferencing and actually learns continuously from the new data to respond dynamically to unseen environments with causal reasoning. Efficiency will be key to for the successful deployment of AI onto the Edge, across devices and sensors as they will often be operating in relatively low (constrained) power environments where battery life may be of key importance, which is why the power efficiency of the 4th Gen Intel® Xeon® Scalable processors is an advantage in terms of deployment across the edge of the network.

4th Gen Intel Xeon Scalable processors also have power management tools to enable more control and greater operational savings. For example, telemetry tools are available to help intelligently monitor and manage CPU resources, build models that help predict peak loads on the data center or network, and tune CPU frequencies to reduce electricity use when demand is lower. The new Optimized Power Mode in the platform BIOS can deliver up to 20% socket power savings with a less than 5% performance impact for selected workloads.

The reason for the radical technological change is as follows:

The rapid advances of AI in areas such as natural language and computer vision. Transformers with Self-Attention and Generative AI being key examples.

Stand-alone (SA) 5G networks will enable not just substantially greater speed but also ultra-low latency estimated at between 1ms-4ms. Latency refers to the time it takes to send a signal from a server to a client device. Ultra-low latency is essential in a world where devices are communicating with each other and to enable near real-time responses in dynamic environments. It will also enable Augmented and Virtual reality to perform as intended by helping overcome latency issues.

Furthermore, SA 5G networks will allow for a substantial increase in network capacity relative to 4G networks. This in turn will enable a massive increase in device connectivity.

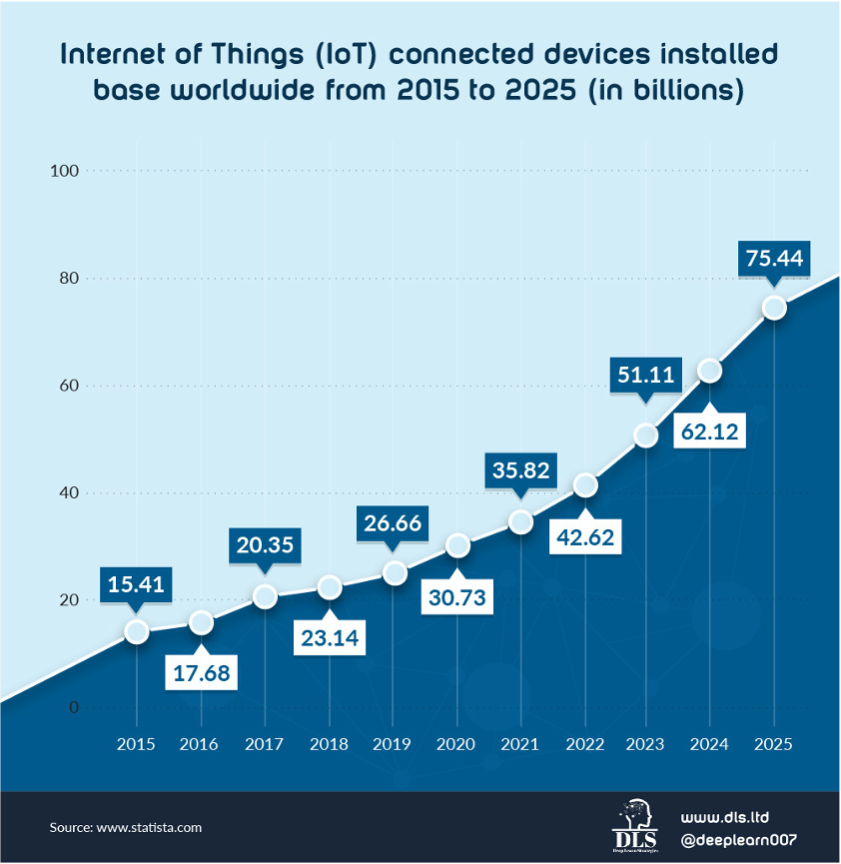

This in turn will enable the scaling of the IoT. Furthermore, Statista have forecast that by 2025 there will be 75 Billion internet connected devices. This equates to a staggering 9+ devices per person on the planet!

This dramatic increase in IoT devices will in turn lead to a vast increase in data. IDC Seagate forecast that by 2025 there will be 175 Zetabytes of data generated with 1/3rd of that data being generated and consumed in real-time (such as video or extended reality). For the sake of comparison IDC state that the amount of data generated in 2020 amounted to 64 Zetabytes.

This in turn will lead to the need for greater use of Machine Learning and Data Science to manage the explosion of data and to gain actionable insights.

We’ll need AI chips (CPUs and GPUs) that are energy and computationally efficient to last in low powered battery (power constrained) environments that many IoT devices and sensors will operate in

Furthermore, a reduction in traffic back and forth between server and client will reduce computational costs but will require that the local devices have sufficient computational capability whilst also being resource efficient (constrained power and also carbon footprint)

Performance gains from 4th Gen Intel® Xeon® Scalable include the following:

Accelerate AI workloads 3x to 5x for Deep Learning inference on SSD- ResNet34 and up to 2x for training on ResNet50 v1.5 with Intel® Advanced Matrix Extensions (Intel® AMX) compared with the previous generation;

Run cloud and networking workloads using fewer cores with faster cryptography. Increase client density by up to 4.35x on an open-source NGINX web server with Intel® Quick Assist Technology (Intel® QAT) using RSA4K compared to software running on CPU cores without acceleration;

More networking compute at lower latency while helping preserve data integrity. Achieve up to 79% higher storage I/O per second (IOPS) with as much as 45%

lower latency when using NVMe over TCP, accelerating CRC32C error checking with Intel® Data Streaming Accelerator (Intel® DSA), compared to software error checking without acceleration;

Improve database and analytics performance with 1.91x higher throughput for data decompression in the open source RocksDB engine, using Intel® In Memory

Analytics Accelerator (Intel® IAA) compared to software compression on cores

without acceleration solutions with 8.9x increased memory to

memory transfer using Intel® Data Streaming Accelerator (Intel® DSA), versus

previous generation direct memory access;

For 5G v RAN deployments, increase network capacity up to 2x with new Intel AVX for vRan instruction set acceleration compared to the previous generation.

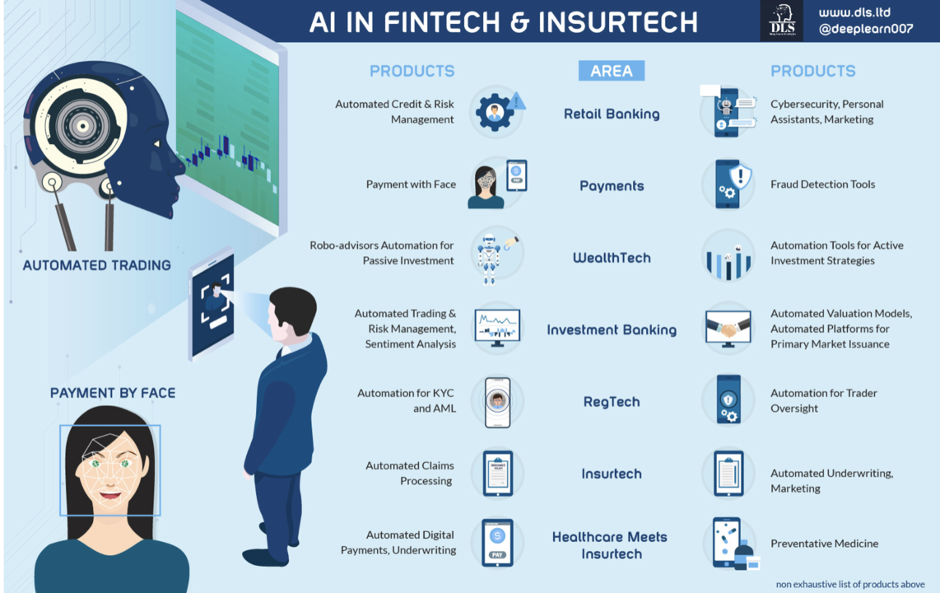

The Financial Services sector including banking, insurance and the emerging FinTech and InsurTech sectors will all be impacted by the scaling of data, the rise of the edge and AIoT.

This will range from use cases that may entail biometric ID with Computer Vision applied towards face recognition or Computer Vision for insurers to automate claims payments for example to verify the insured car and assess the damage, or applications of natural language and voice for customer service applications.

Security is likely going to be a key issue in the era of the AIoT as SA 5G networks expand and scale and device security will be key. This will be key for all areas whether Financial Services, healthcare, manufacturing, smart cities or our homes.

Businesses need to protect data and remain compliant with privacy regulations whether deploying on premises or in the cloud. 4th Gen Intel® Xeon® Scalable processors help bring a zero-trust security strategy to life while unlocking new opportunities for business collaboration and insights even with sensitive or regulated data. Intel® Software Guard Extensions (Intel® SGX) is the most researched, updated, and deployed confidential computing technology in data centers on the market today, with the smallest trust boundary of any confidential computing technology in the data center today. Built-in accelerators for encryption help free up CPU cores while improving performance.

The rise of the AIoT enabled by SA 5G networks and the forecast for dramatic increase in data crated by the IoT devices provides for both opportunities and threats for firms. By preparing in advance and investing in suitable hardware such as 4th Gen Intel® Xeon® Scalable processors will enable business leaders to prepare for this exciting new world and to be positioned for the opportunities rather than drown in the threats.

It also enables firms to scale AI and data analytics capabilities whilst retaining a focus on sustainability given the efficiency gains provides.

The opportunities for firms who position to take advantage may be substantial. For example, a report commissioned by Intel and conducted by Ovum forecast that the media and entertainment sector may generate $1.3 Trillion in revenues by 2028.

There has been a great deal of excitement generated by NLP (Large Language Models) models recently such as GPT3 and Chat GPT. However, over the course of the next few years neural compression will increasingly result in Deep Learning models existing on mobile devices and sensors (Computer Vision and Transformer models with Self-Attention for language or even potentially multimodal). The devices, sensors and local data centres will need powerful CPUs and GPUs to enable AI models to operate efficiently and as intended. With this in mind the Intel Xeon 4th Generation scalable processors with built-in accelerators make a great match for the era of the AIoT alongside meeting sustainability obligations.

For the purposes of this article Artificial Intelligence is defined as the area of developing computing systems which are capable of performing tasks that humans are very good at, for example recognising objects, recognising and making sense of speech, and decision making in a constrained environment.

Machine Learning is defined as the field of AI that applies statistical methods to enable computer systems to learn from the data towards an end goal. The term was introduced by Arthur Samuel in 1959.

Neural Networks are biologically inspired networks that extract abstract features from the data in a hierarchical fashion. Deep Learning refers to the field of neural networks with several hidden layers. Such a neural network is often referred to as a deep neural network.

Intel® QuickAssist Technology (Intel® QAT)

Help reduce system resource consumption by providing accelerated cryptography, key protection, and data compression with Intel® Quick Assist Technology (Intel® QAT). By offloading encryption and decryption, this built-in accelerator helps free up processor cores and helps systems serve a larger number of clients.

Intel®Data StreamingAccelerator(Intel® DSA)

Drive high performance for storage, networking, and data -intensive workloads by improving streaming data movement and transformation operations. Intel®Data Streaming Accelerator (Intel®DSA) is designed to offload the most common data movement tasks that cause overhead in data centre-scale deployments. Intel DSA helps speed up data movement across the CPU, memory, and caches, as well as all attached memory, storage, and network devices.

Intel® Dynamic Load Balancer (Intel® DLB)

Improve the system performance related to handling network data on multicore Intel® Xeon® Scalable processors. Intel®Dynamic Load Balancer (Intel®DLB) enables the efficient distribution of network processing across multiple CPU cores/threads and dynamically distributes network data across multiple CPU cores for processing as the system load varies. Intel DLB also restores the order of networking data packets processed simultaneously on CPU cores.

Intel® In-Memory Analytics Accelerator

Run database and analytics workloads faster, with potentially greater power efficiency. Intel® In-Memory Analytics Accelerator (Intel® IAA) increases query throughput and decreases the memory foot print for in-memory database and big data analytics workloads. Intel IAA is ideal for in-memory databases, open-source databases and data stores like RocksDB, Redis, Cassandra, and MySQL.

Compute Express Link (CXL) This is an exciting new development. It is is the SPR is first product that supports CXL attached devices for the AIoT across the Edge and delivers reduced compute latency in the data centre and lower TCO with Compute Express Link (CXL) 1.1 for next-generation workloads. CXL is an alternate protocol that runs across the standard PCIe physical layer and can support both standard PCIe devices as well as CXL devices on the same link. CXL provides a critical capability to create a unified, coherent memory space between CPUs and accelerators and will revolutionize how data centre server architectures will be built for years to come.

Imtiaz Adam is a Hybrid Strategist and Data Scientist. He is focussed on the latest developments in artificial intelligence and machine learning techniques with a particular focus on deep learning. Imtiaz holds an MSc in Computer Science with research in AI (Distinction) University of London, MBA (Distinction), Sloan in Strategy Fellow London Business School, MSc Finance with Quantitative Econometric Modelling (Distinction) at Cass Business School. He is the Founder of Deep Learn Strategies Limited, and served as Director & Global Head of a business he founded at Morgan Stanley in Climate Finance & ESG Strategic Advisory. He has a strong expertise in enterprise sales & marketing, data science, and corporate & business strategist.

Leave your comments

Post comment as a guest