Comments

- No comments found

In an era dominated by artificial intelligence (AI), the quest for transparency and accountability has emerged as a critical concern.

IBM's Watsonx stands at the forefront of efforts to address this challenge, offering solutions aimed at bridging the gap between AI transparency and accountability.

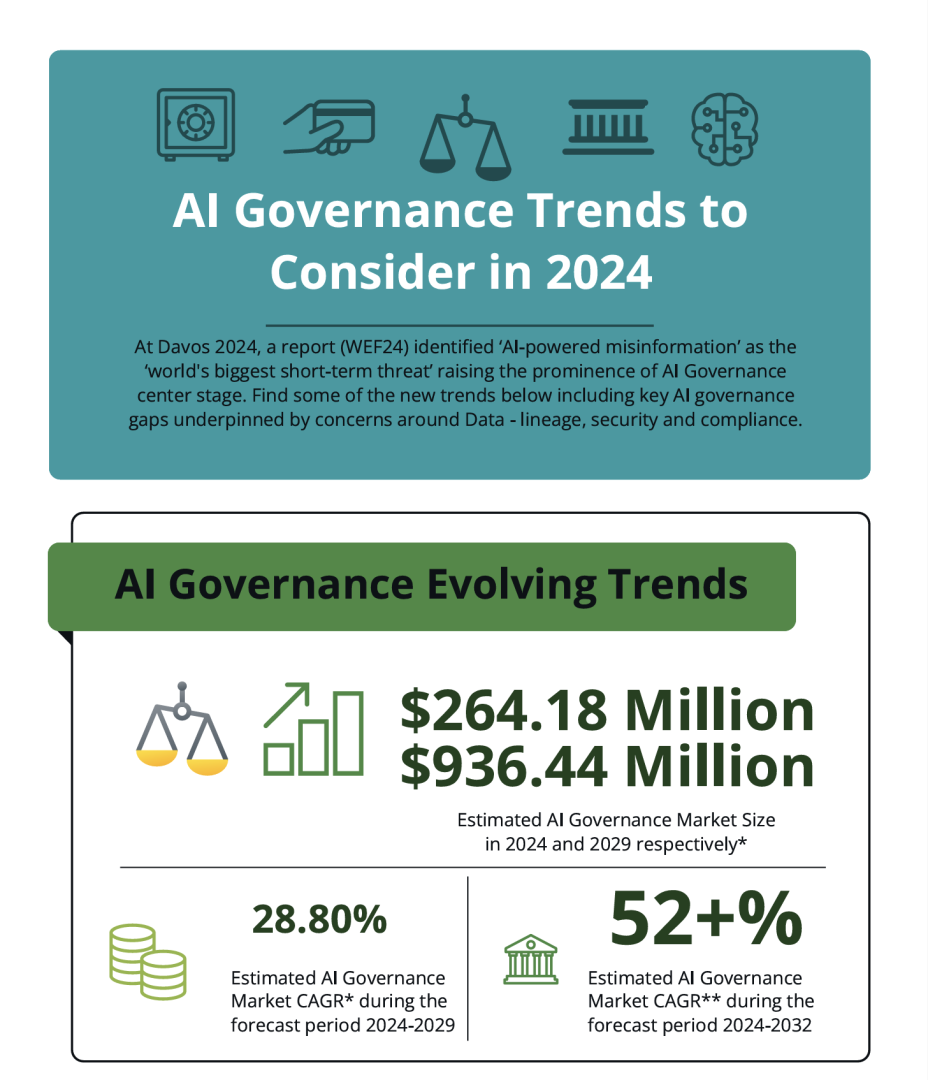

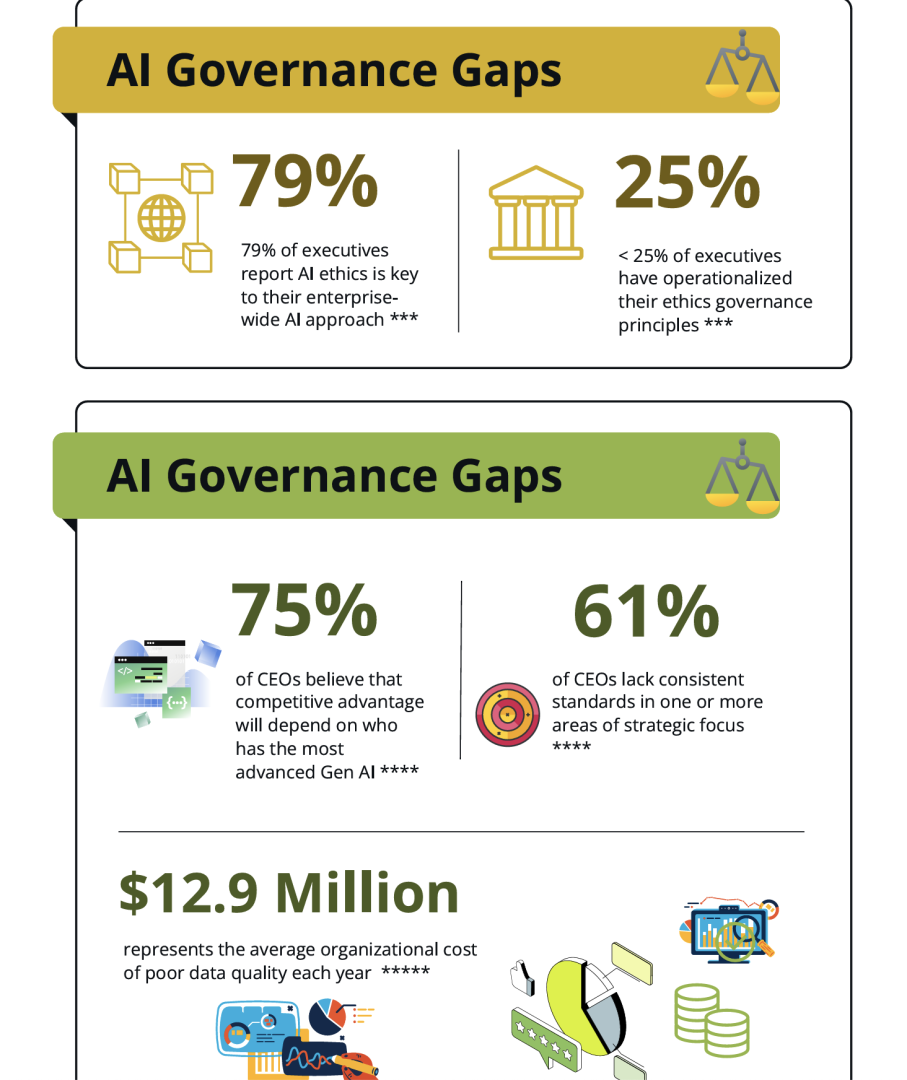

At Davos a new report identified ‘AI-powered misinformation’ as the ‘world's biggest short-term threat’ raising the prominence of AI Governance fully at centre stage. For while not every AI model is created equal - every model needs to be governed responsibly. AI models can also attract a juxtaposition of confidence, with concerns surrounding the opaque nature of input data and the resulting model outputs, as just one example. In practice, organizations of all sizes are left often struggling to explain how the AI tools that they use are working and how results have been determined – and find it difficult to highlight the risks involved to stakeholders too, impacting buy-in and optimal outcomes alike.

Indeed recent studies reflect the opportunity juxtaposition. On the one hand, IBM Institute for Business Value (IBV) research finds that 75% of CEO’s believe competitive advantage will depend on who has the most advanced Generative AI, supported by work by EY that finds 70% of CEOs are already accelerating their GenAI investments to maintain competitive advantage. Concerningly, however, the IBV study also finds that 61% of CEOs report lacking consistent standards in one or more areas of their strategic focus whilst EY’s research reflects some 68% of CEOs reporting that GenAI uncertainty is creating challenges for adoption.

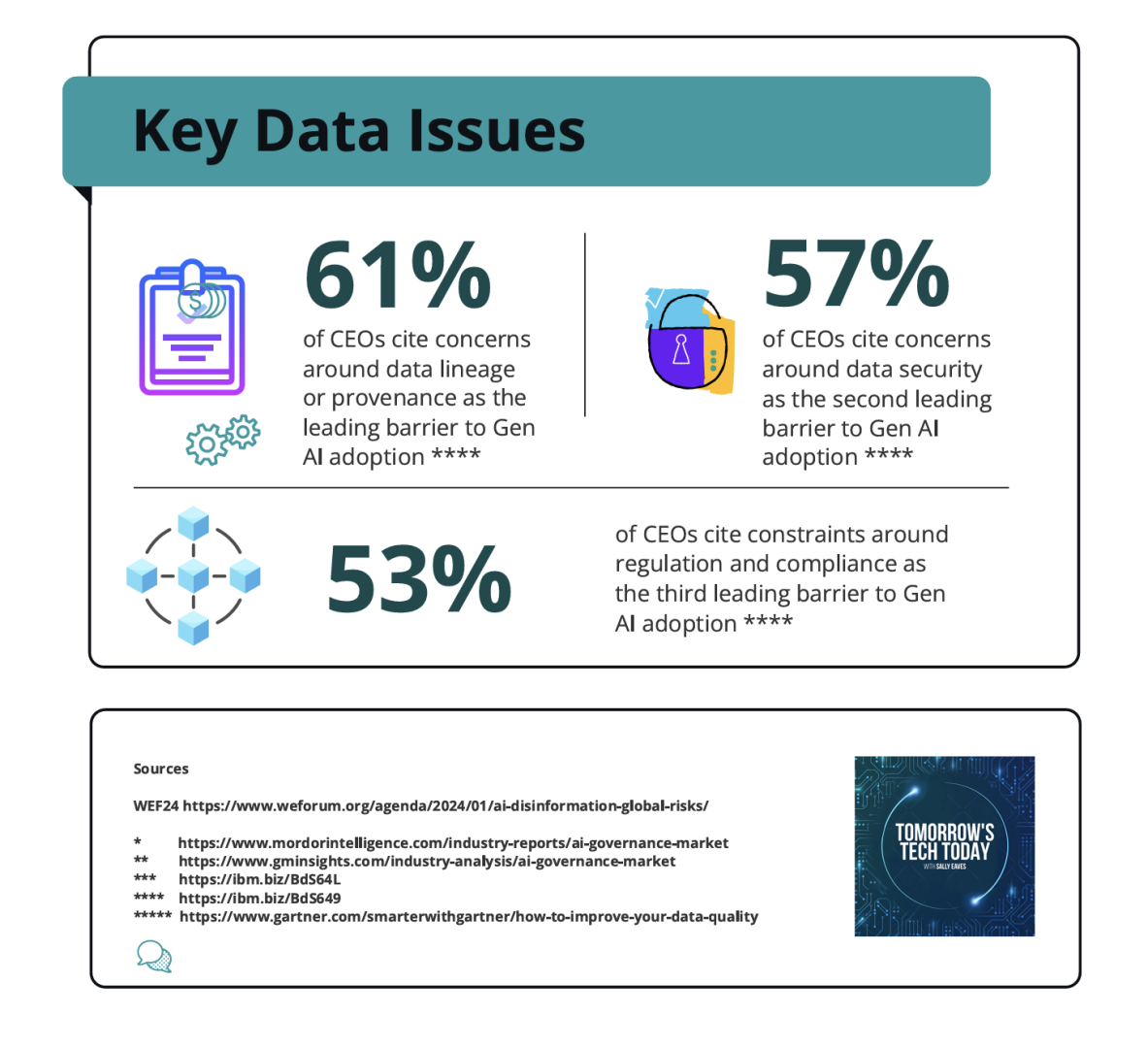

Additionally, an IBM Institute of Business Value 2023 study on CEO decision-making in the Age of AI identified concerns about the lineage or provenance of data as the number one barrier to generative AI adoption (61%), with fears, concerns and complexity around security and compliance continually raised across research and in-practice dialogue and experiences too. More insights on these important juxtapositions between intention, awareness and action regarding AI adoption and its governance can be explored in this original infographic.

Businesses are seeking ways to better understand how their AI models are making decisions. And this is where IBM watsonx.governance fits in! The latest release within watsonx, a comprehensive AI and data platform that includes watsonx.ai - a studio for foundation models, generative AI and machine learning and watsonx.data - a fit-for-purpose data store built on an open data lakehouse architecture. So what is watsonx.governance?

Read on to see how this development helps organizations make sure that their AI model results are accurate, transparent, fair and explainable, and that AI use is governed for the broader good, hosted on one unified platform governing both Generative AI and Predictive Machine Learning (ML) across the entire AI lifecycle.

AI governance refers to the defined set of policies, practices, processes and rules established to set guardrails to govern the use, development, deployment and management of AI tools and capabilities. The focus on organizational AI governance is fast moving to centre stage as large AI models are becoming a de facto business tool and a catalyst for innovation responsibility too.

Governance is critical to ensure that AI adoption is done responsibly with transparency and trust, mitigating bias and providing safe, secure, private and accountable outcomes. This is particularly important for Large Language Models (LLMs), which can generate content that can be difficult to distinguish from human-authored content, prone to hallucinations and capable of producing erroneous content. LLMs lack of ‘true’ comprehension and fact-checking capabilities requiring governance for the accountability required to ‘catch’ such errors in the generated content.

Generative AI requires high-quality data to train and generate accurate, actionable insights. Risks associated with the data used include knowledge of the origin, variability, verifiability and the quality of the training data. Lack of quality data can perpetrate bias, discrimination or misinformation, and a lack of transparency into the model development and deployment hinders the explain-ability of model outcomes.

Additionally, and especially in today’s dynamic exploratory phase, firms are deploying a combination of LLMs from various sources, as well as from the open source community, and both individuals and organizations alike are increasingly experimenting with the use of prompts. This is also a matter of privacy too. LLMs rely on extensive datasets for training and governance making it imperative to address concerns regarding the collection, storage and retention of this data.

In combination, this context creates a dynamic landscape of new opportunities alongside an expanded threat surface for risks. And this is set within a backdrop of growing and changing regulations and industry standards that can result in audits, fines and reputational damage. Relevant examples of legislation include the recent SEC Rulings, the White House AI Executive Order, findings from the recent UK AI Safety Summit, and the recently released EU AI Act. More of my thoughts on this are available in depth here.

Clearly, AI governance is an interrelated and global challenge and especially as organizations of all sizes increasingly adopt generative AI for an expanded range of applications. Organizations across industries need a toolset to proactively establish internal policies and guardrails for the use of AI to help embed responsibility, transparency and explain-ability into AI workflows.

It is critical then to anchor the significant Generative AI value proposition with a robust infrastructure purpose built for directing, managing and monitoring AI across the model lifecycle. This can also help ensure that those who want to experiment with generative AI models are able to, but in a way that does not place the organization at risk.

To ‘get governance right’, the detail really matters! And IBM’s recent general availability announcement of watsonx.governance does exactly that - giving you the capacity to create your own guardrails for using AI and managing the AI lifecycle in a way that works for your organization. This strengthens the ability to proactively detect and mitigate risk, manage regulatory requirements and address ethical concerns.

Watsonx.governance is designed to be a holistic one-stop-shop for businesses navigating how to deploy and manage both generative AI and ML models. Underpinned by a strong foundation of IBM’s AI governance technologies, watsonx.governance can help you operationalise AI with confidence, with 3 pillars of innovation embedded within the platform by design.

✅ Lifecycle governance: use automated tools and processes across to monitor and manage across the AI lifecycle for both generative AI and ML models.

· Govern large language (LLMs) built in watsonx.ai and for 3rd party LLMs built on 3rd party platforms including Amazon Bedrock, Microsoft Azure and Open AI. Deploy on cloud and on-premises

· Govern ML models built in 3rd party tools including AWS, Microsoft and Google, on cloud or on-premises.

🔒 Risk management: proactively monitor, detect and alert for risk based on pre-set thresholds. Monitor for fairness, bias, drift, and new generative AI metrics.

🔄Compliance: facilitate compliance with internal policies, industry standards and AI regulations worldwide. Use automated factsheets - a “nutrition label” for AI - to collect and document model metadata in support of audits and inquires.

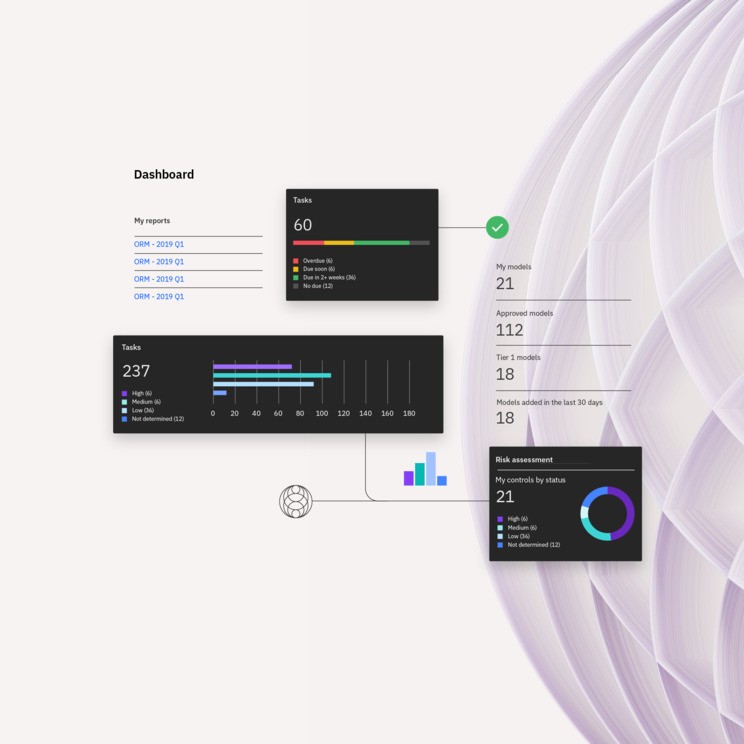

Amongst many stand-out features, I have picked out the model inventory functionality as a highlight. This can be compared to your organisations’ 🧭‘compass or map’🧭for Artificial Intelligence – organising models by stage and use case, identifying visually where they are within the AI lifecycle. This directly supports prompt engineers and data scientists including enhancing collaboration and highlighting investigative actions such as when a model threshold metric has been breached. This provides an ⚓anchor ⚓for the AI lifecycle across both Predictive ML and LLMs and helps bake-in AI process efficiency, repeatability and reliability – by design.

And reflecting on all the developments further, I personally believe there is a very strong reason for IBM having watsonx.governance as a distinct pillar within the watsonx platform – this is so much more than a dashboarding need or offering. As AI and its Generative AI variants becomes ever more core to intelligent, active and increasingly automated decision-making, especially at enterprise level, aspects such as ensuring AI explicability will be instrumental to driving optimal decisions, compliance, trust, value, and ultimately results. Ensuring adequate – but not over complex - layers of governance and feedback loops built in by design is key. Think for example of the role of today’s modern Workforce/People Analyst Data Expert – ensuring deeper insights beyond standard reporting to the data points used and transformer layer decisions is a difference-maker!

Watsonx.governance provides organizations with a comprehensive toolkit to manage risk, enhance transparency, and prepare for compliance with future AI-related regulations. It allows businesses to automate AI governance processes, monitor models, and take corrective actions, ultimately providing increased visibility AND actionability. The first release comes with includes 3 key features:

1. AI Transparency - Embedded model inventory and AI Factsheet capabilities

2. Monitoring and Alerts - Settable thresholds to provide auto-monitoring

3. The ability to govern both LLMs & Predictive ML – at the same time

Key features of watsonx.governance include automated risk assessment and mitigation, continuous monitoring and alerting, an audit trail for demonstrating compliance with regulations, and integration with a wide range of IBM and third-party AI tools. This helps organisations to implement processes – including approval sequences such as credit decisions – that mitigate the potential of bias. More broadly still, the improved governance also enhances accuracy for demand forecasting, improved process efficiency – and by consequence, improved productivity and satisfaction.

It does so by increasing visibility into AI models, leading to explainable AI outcomes. Watsonx.governance also automates the governance process – saving time and enabling teams to attain realistic goals in reducing operational risk, policy adherence and compliance challenges. Using the tool, teams can actively identify and reduce risks associated with models and convert AI regulations into actionable policies for automated implementation.

So, for any organisation in the spectrum of evaluating right through to implementing AI – and who isn’t!? I believe watsonx.governance is addressing escalating compliance needs as organisations, consumers, governments and ecosystem partners alike are increasingly seeking safety, security, explainability, transparency – and accountability - in their AI models.

Supporting this further still, IBM Consulting drawing on the combined resources of IBM and partners has also expanded its strategic expertise offerings to assist customers with navigating responsible AI scaling. Embedded in human-centric design principals, this holistic support covers organizational governance across people processes and technology - and automated model governance too. This encompasses areas such as cybersecurity threat mitigation, organizational culture, AI ethics board development, training and accountability.

And there’s more! Just available, you can now govern generative AI models built both within watsonx․ai an built on third-party platforms, including Amazon Bedrock, Microsoft Azure and Open AI. More on this here!

From tremendous productivity gains right through to advancing business outcomes, Generative AI can enable significant value – but that value must be unlocked. In today’s AI Age, data and its protection remains your differentiator! Organisations of all sizes need the capacity to monitor, manage and govern from anywhere, making AI governance a business-critical skill. I believe this is exemplified by the launch of IBM’s watsonx.governance.

And as final reflection today, I think this development is a tangible example of IBM’s long standing commitment to trust, open innovation and ecosystem partnership, something also demonstrated in the recent acquisition of Manta Software Limited a world leading data lineage platform to further advance and compliment capabilities within the trifecta of watsonx.ai, watsonx.data and watsonx.governance. This also marks IBM’s eighth acquisition up to the end of 2023. And further still, this ethos is embodied in the announcement that IBM has partnered with Meta and over 50 founding members worldwide to form the AI Alliance – another milestone to advancing the safe and responsible use of AI. Looking ahead, this ability to responsibly govern AI at scale will only become more essential for enterprises as new AI regulation takes hold across the world. More on this soon and meanwhile, please take a look at watsonx.governance for yourself - all feedback most welcome! 🗨️

Warmest wishes, Sally

A highly experienced chief technology officer, professor in advanced technologies, and a global strategic advisor on digital transformation, Sally Eaves specialises in the application of emergent technologies, notably AI, 5G, cloud, security, and IoT disciplines, for business and IT transformation, alongside social impact at scale, especially from sustainability and DEI perspectives.

An international keynote speaker and author, Sally was an inaugural recipient of the Frontier Technology and Social Impact award, presented at the United Nations, and has been described as the "torchbearer for ethical tech", founding Aspirational Futures to enhance inclusion, diversity, and belonging in the technology space and beyond. Sally is also the chair for the Global Cyber Trust at GFCYBER.

Dr. Sally Eaves is a highly experienced Chief Technology Officer, Professor in Advanced Technologies and a Global Strategic Advisor on Digital Transformation specialising in the application of emergent technologies, notably AI, FinTech, Blockchain & 5G disciplines, for business transformation and social impact at scale. An international Keynote Speaker and Author, Sally was an inaugural recipient of the Frontier Technology and Social Impact award, presented at the United Nations in 2018 and has been described as the ‘torchbearer for ethical tech’ founding Aspirational Futures to enhance inclusion, diversity and belonging in the technology space and beyond.

Leave your comments

Post comment as a guest