Comments

- No comments found

The tragedy of lost lives and economic recession caused from the Covid-19 crisis is likely to result in an acceleration of digital transformation and adoption of AI technology.

A number of articles and leading firms have made forecasts of accelerated transformation too and I refer to them with hyperlinks in the section below:

Source for image above Practical Ecommerce, Twilio Covid-19 Accelerates Retail’s Digital Transformation;

Summary observations are as follows:

Setting the Scene: The State of AI Today

It was reported that Venture Capital investments into AI related startups made a significant increase in 2018, jumping by 72% compared to 2017, with 466 startups funded from 533 in 2017.

PWC moneytree report stated that that seed-stage deal activity in the US among AI-related companies rose to 28% in the fourth-quarter of 2018, compared to 24% in the three months prior, while expansion-stage deal activity jumped to 32%, from 23%.

There will be an increasing international rivalry over the global leadership of AI. President Putin of Russia was quoted as saying that "the nation that leads in AI will be the ruler of the world". Billionaire Mark Cuban was reported in CNBC as stating that "the world's first trillionaire would be an AI entrepreneur".

Tim Dutton provided an overview of National AI strategies:

CBInsights reported that "The Chinese government is promoting a futuristic artificial intelligence plan that encompasses everything from smart agriculture and intelligent logistics to military applications and new employment opportunities growing out of AI."

"The US still dominates globally in terms of the number of AI startups and total equity deals. But it is gradually losing its global deal share to new hubs emerging outside the US"

Source for the 2 Images above: CBInsights Feb 2019 China Is Starting To Edge Out The US In AI Investment

Stanford Engineering reported in January 2019, that Venture Capitalist Kai-Fu Lee stated" If data is the new oil, China is the new OPEC." The article also noted the greater amount of government support and favourable regulatory environment towards AI.

"China’s advances in AI haven’t gone unnoticed by investors. Last year, 48 percent of the venture capital money directed to AI went to China, compared to 38 percent invested in U.S. ventures, Lee said."

In spite of the reports about the rise of China, I personally believe that the US and indeed Canada will remain important centres for R&D in AI with the likes of MIT (and Boston area), Stanford (and Silicon Valley) and the University of Toronto (alongside the Toronto AI startup scene) and many others continuing to play a key role as AI hubs with innovation of new products. However, there is a need for national governments and business leaders to understand the scale of change that is on its way. A great example that I recall is my Strategy professor at Business School explaining how the slide rule manufacturers were obsessed with each other and their current product portfolio resulting in each one making slide rulers with more polished chrome surfaces and as they focussed on the narrow vision of their frames (the analogy here is the vision being restructured to the frame of spectacles) they failed to observe the arrival of the calculator on the edge edge of the frame of their vision. The arrival of the calculator marked the end to a 350 year dominance of slide rulers as noted by Stanford University.

Another example that I recall is the personal story of a friend of mine, Scott Cohen, co-founder of the Orchard and now part of the senior management team at Warner Music Group, and tech evangelist, explained at a talk how the high street music stores who sold CDs were not interested in digital music and the arrival of the internet until it was too late and their market collapsed. I believe that a similar fate will await those firms that fail to understand the impact that 5G and AI will have upon their sectors over the next five years.

Once upon a time the CD was considered the industry standard across the music industry.

Today the mobile app with Machine Learning dominates.

The CD stores in the shopping mall and high street are gone and today Spotify and other digital music providers dominate. Bernard Marr explained in an article in Forbes "The Amazing Ways Spotify Uses Big Data, AI And Machine Learning To Drive Business Success."

The reason why there is a global race for AI can be seen in the market forecasts for the size of the market in AI by 2025. For example Tractica forecast for AI revenue Artificial Intelligence Software Market to Reach $118.6 Billion in Annual Worldwide Revenue by 2025 stating "More than 300 AI Use Cases Will Contribute to Market Growth Across 30 Industries with the Strongest Opportunity in the Telecommunications, Consumer, Automotive, Business Services, Advertising, and Healthcare Sectors".

Source for Image above: Tractica

Change on the way in Healthcare

There are forecasts that AI in Healthcare will undergo a dramatic shift for example MarketsAndMarkets forecast that the market size of AI in Healthcare will jump from $2Bn in 2018 to $36.1Bn in 2025. Healthcare is likely to be a major issue in the US Presidential elections of 2020 and also of an increasing matter of importance across all OECD and emerging market nations across the world through the next decade as challenges with funding, resourcing and timely delivery are set to increase. The ability to make sense of data alongside the cost pressures will mean that Machine Learning will make big in roads across medical imaging, drug discovery, robotic surgery and remote medical services.

Source for the images above: MarketsAndMarkets Artificial Intelligence in Healthcare – Global Forecast to 2025.

It was reported in article written by Angelica Mari in relation to the Healthcare service in England that the NHS would provide funds that rewarded the usage of AI with incentives to be given from April 2020.

Setting the Goals and Understanding the Machine Learning Process

Organisations are asking themselves about the goals and expected Return on Investment (ROI) from AI. Daniel Faggella in Emerj Artificial Intelligence Research sets out the business goals of organisations in "Machine Learning Marketing – Expert Consensus of 51 Executives and Startups".

Moreover, Daniel Faggella in Emerj Artificial Intelligence Research "The ROI of Machine Learning in Business: Expert Consensus" asked the question "What are the criterion needed for a company to derive maximal value from the application of Machine Learning in a business problem?" and set out the following three key points:

Sufficient data along with the necessity to pre-process and clean the data are the foundations for a successful Machine Learning project and need to be addressed before a Machine Learning project is embarked upon.

In addition picking the correct problem to address is important, else the organisation might lose a year or more tackling an issue that is not of much use to the business team nor the customers of the organisation. Greater collaboration and understanding between the commercial teams and the Data Science teams will in order to ensure that the organisation is selecting the correct problem to address with Machine Learning.

Furthermore, finding and nurturing Data Science talent will be a key differentiator for organisations that succeed over the next five years. Stacy Stanford in "Good News for Job Seekers With Machine Learning Skills: There is a Shortage of Talent" observed that " The expanding applications for AI have also created a shortage of qualified workers in the field. Although schools across the country are adding classes, increasing enrollment and developing new programs to accommodate student demand, there are too few potential employees with training or experience in AI."

The significance of the shortage of talent in a world that will be increasingly data driven is that governments, universities, schools and companies across the world need to reconsider the education system, including retraining opportunities for experienced staff.

A research report from McKinsey in "Artificial Intelligence: The time to act is now" set out the potential impact for AI to disrupt particular industry sectors observing "...the potential for disruption within an industry, which we estimated by looking at the number of AI use cases, start-up equity funding, and the total economic impact of AI, defined as the extent to which solutions reduced costs, increased productivity, or otherwise benefited the bottom line in a retrospective analysis of various applications. The greater the economic benefit, the more likely that customers will pay for an AI solution. Exhibit 2 shows the data that we compiled for 17 industries for AI-related metrics. "

Edge Computing

I believe the organisations who work out how to leverage Edge Computing and the roll out of 5G over the next few years will emerge as the next wave of global super stars.

Edge Computing is a distributed computing paradigm which brings computer data storage closer to the location where it is needed. Eric Hamilton explains "Edge Computing is still considered a new paradigm, despite its history. That said, it continues to address the same problem: proximity. Moving the computer workload closer to the consumer reduces latency, bandwidth and overhead for the centralized data center, which is why it is a growing trend in big data"

Rob van der Meulen "Edge Computing promises near real-time insights and facilitates localized actions."

"Around 10% of enterprise-generated data is created and processed outside a traditional centralized data center or cloud. By 2025, Gartner predicts this figure will reach 75%. For example, in the context of the Internet of Things (IoT), the sources of data generation are usually things with sensors or embedded devices."

Gartner's Top 10 Trends Impacting Infrastructure & Operations for 2019 features both AI and Edge Computing.

"According to Gartner, global AI derived business value will reach nearly $3.9 trillion by 2022." Gartner also observed that one may wonder:

Edge Computing enables a closer proximity interns of where workloads are based relative to a customer for solving a particular business issue. It entails the laws of physics and matters of economics and land that all contribute on the specific circumstances of when to use Edge Computing. The extract from Gartner below provides a good summary:

Edge computing can be used to reduce latency and will experience increasing growth to satisfy the demand for high-quality digital experiences moving forward

Gartner also noted that websites with 2 seconds of delayed loading will result in the loss of customers and emphasises the role that reduced latency will play in enhanced digital experiences with the trend to occur from 2020 to 2023. An article by Yoav Einav observed that Amazon found that every 100ms of latency cost them 1% in sales.

The 5th generation of mobile networks is referred to as 5G. It represents a major step forwards from the 4G LTE networks of today with the intention of enabling the connectivity between billions of connected devices and massive data that the Internet of Things (IoT) will entail. I prefer to refer to this era as an Intelligent Internet of Things, once we deploy AI onto the devices around us.

Source for image above: 5G use case families (source: ITU-R, 2015)

The performance levels for 5G will be focused on ultra low latency, lower energy consumption, large rates of data, and enormous connectivity of devices. The era of 5G, will be a world where cloud servers will continue to be used, and also one whereby we witness the rise in prominence of AI on the edge (on device) where the data is generated enabling real-time (or very near real time) responses from intelligent devices. 5G and Edge Computing with machine to machine communication will be of great importance to for autonomous systems with AI such as self-driving cars, drones, autonomous robots, intelligent sensors within the context of IoT.

In the initial stages 5G will operate alongside current 4G networks before shifting to dedicated networks in the future.

A number of market analysts predict that it will take until 2022 or later before we see the the real scaling and more exciting developments with 5G. IDC's inaugural forecast for the worldwide 5G network infrastructure market shows total 5G and 5G-related network infrastructure spending growing from approximately $528 million in 2018 to $26 billion in 2022.

Matthew Bohlson in InvestforIntel observed that "By 2021, the number of 5G connections is forecast to reach a figure of between 20 million and 100 million. Some estimates put the figure at 200 million."

Global 5G smartphone shipments to grow from 2 million units in 2019 to an impressive 1.5 billion in 2025, Source for Image below: Intel

It is not expected that Apple will launch the first 5G enabled iPhone until 2020, with Jacob Siegel stating "By the time the 2020 iPhone models land, presumably in September of that year, 5G will still be in its infancy."

5G is expected to achieve speeds 100 times faster on average than 4G with latencies as low as 1ms. To illustrate what this means, Patrik Kulp in Adweekobserves that "With 5G wireless service operating at peak speeds, you could theoretically download a full-length HD movie in the time it takes you to read this sentence."

Park May in EDN explains "To reduce response time, 5G uses a scalable orthogonal frequency-division multiplexing (OFDM) framework with different numerologies. Within a 1ms time duration, six separate slot configurations are available, e.g. 1, 2, 4, 8, 16, and 32 slots. The minimum size of a transport block could be reduced to a minimum of 0.03125ms based on the new configuration as shown in "

We can compare the latency of 5G to the current 4G networks with the 60.5ms to 40.2ms latency that those across cities in the US currently experience.

A report from Accenture forecast that the economic impact of 5G would amount to 3million jobs, $275 Billion of investment and $500Billion of annual GDP growth.

The report from Accenture also forecast that the economic benefits of 5G with smart cities would be substantial. For example a city around the same size as Chicago could expect to benefit from gaining 90,000 jobs and 14Bn of GDP.

Source for image above: Accenture strategy How 5G can help municipalities become smart cities.

The report from Accenture stated "Based on research into the benefits of adopting the next generation of wireless technology, we expect 5G could help create 2.2 million jobs, and approximately $420 billion in annual GDP, spread across small, medium and large communities in the US."

"Another of 5G’s contributions to generating jobs and economic growth will be providing the benefits of high-speed broadband to the 5% of Americans who currently do not have access. Because faster Internet connections allow users to utilize video applications for telecommuting, or participate in e-learning courses that give them additional skill sets or certifications, their employability and earning power increases, thus creating a more competitive workforce in different localities – which would, in turn, attract higher-paying jobs to these communities. If localities embrace 5G, and citizens who are not already online become adopters, we could see an additional $90 billion in GDP, and 870,000 in job growth."

An article published by Ericsson Transforming Healthcare with 5G notes that "Proponents also imagine 5G streamlining and expanding Healthcare services. Faster connections will make it easier to reach far-flung patients with limited hospital access through telehealth video services and remote-controlled equipment. Ambulances will be able to transmit real-time information on patients’ vital signs so hospitals can prepare for their arrival."

Source for the image above: Ericsson A healthy obsession with innovation

The article further notes the that the well being of the patient can be further enhanced by the deployment of live-in robots enabled by the enhanced connectivity of 5G and that in turn enable telemedicine to provide continuous critical care to the patient. Filippo Cavallo, Assistant Professor at the BioRobotics Institute is quoted: “5G can enable us to implement complex Healthcare services and improve the capability of robots to learn to recognize new objects and perform complex tasks. We can have robots to support in assisting elderly people, for example.”

The faster speeds of 5G will enable technologies such as AR and VR that struggle with latency to work alongside new standards such as JPEG XS and for autonomous vehicles and robots a step closer to entering the real world.

Leading Fintech influencer, Jim Marous (@JimMarous ) asked the question about how 5G will impact banking with Brett King (@BrettKing ) CEO of Moven responding that "5G will increase the expectation for low latency, real time engagement."

Another leading Fintech influencer Spiros Magaris (@SpirosMargaris) of Magaris Ventures stated" For me, it is perfectly clear that many promising business cases in the Fintech and Insurtech industry will be driven to a large degree by 5G, AI/ML and edge computing."

This view was echoed by another Fintech influencer, Xavier Gomez (@Xbond49) co-founder of invyo.io who explained that "AI and 5G technologies will enhance and augment people so as to enable gains in efficiency and the emergence of new opportunities across the financial sector for those who learn how to leverage these technologies in the near future"

In February 2019 it was reported that Ericsson, Australian service provider Telstra, and Commonwealth Bank of Australia are teaming up to explore 5G edge computing use cases and network capabilities for the financial sector by testing end-to-end banking solutions over 5G.

Penny Crossman in the American Banker reported on how 5G could shape the future of banking with the following observations: “5G will begin to unlock some interesting things that we've dreamt about or imagined for some time,” said Venturo, the chief innovation officer at U.S. Bank. “5G is exponentially more powerful than 4G; it has such low latency and such high bandwidth that for a lot of applications, it will make a lot of sense to use 5G instead of Wi-Fi.”

"And 5G could support the use of virtual reality in branches, Venturo suggested." The use cases here would be for using visual aids to assist in explaining complex subjects and financial products to customers. Furthermore, the article also noted the potential for 5G mobile to enable pop-up branches wit Abhi Ingle, senior vice president of digital, distribution and channel marketing at AT&T stating “Think of taking the physical branch to where the crowds are, featuring all the services that a brick-and-mortar store could...Yet they could be turned on and turned off in a simple manner.”

The payments sector will be hugely transformed for a frictionless experience without the need to carry cash or even plastic. For example it has been reported by Shannon Liao in the Verge in March 2019 that using one's face to enter a subway may become the norm in the future with a local subway operator in Shenzhen (China’s tech capital) testing facial recognition subway access, powered by a 5G network with the fare automatically deducted from a linked payment method.

It is submitted that payment by face will become an increasingly common method for payment over the course of the next five years. For example in it was reported in January 2019 that China's first facial recognition payment-based shopping street opened in Wenzhou with Yang Peng, VP of Ant Financial, stating that "Alipay has upgraded the payment system using a 3D structured light camera and can ensure accuracy of 99.99 percent."

Image above: "A customer has her face scanned by a facial recognition system to pay for her purchase at a store in the century-old neighboring streets, Wuma Street and Chan Street, in Wenzhou city, East China's Zhejiang province, on Jan 16, 2019. [Photo/IC]"

In terms of the insurance sector there is likely to be a major impact driven by the arrival of autonomous cars (reduced accidents meaning less claims), enhanced security at homes and commercial properties (reduced theft and other losses from faulty equipment) due to enhanced security cameras that can detect intruders with Deep Learning on the camera, and smarter home systems and predictive analytics on commercial premises to reduce incidents of faulty appliances and equipment.

Robotic Surgery and 5G

France24 reported in February 2019 that a "Doctor performs first 5G surgery in step towards robotics dream". The article noted that a doctor in Spain conducted the world's first 5G-powered telementored operation. "During the operation the 5G connection had a lag time of just 0.01 seconds, compared to the 0.27-second latency period with the 4G wireless networks..."

In March 2019 Geek.com reported on the World’s First 5G-Powered Remote Brain Surgery Performed in China whereby the surgeon, Dr. Ling Zhipei, manipulated the instruments in Beijing from Sanya City, in Hainan, located about 1,864 miles (3,000 kilometers) away, with a computer using a 5G network powered by China Mobile and Huawei.

CNN reported in April 2019 that Verizon launches first 5G phone you can use on a 5G network in US. LightReading observed on the 14th May that "Verizon has announced it will launch at least 20 more 5G cities in 2019, while Sprint says it will start its first 5G markets in the coming weeks."

"Twenty cities are announced now for 2019, with more to come. Markets announced include Atlanta, Boston, Charlotte, Cincinnati, Cleveland, Columbus, Dallas, Des Moines, Denver, Detroit, Houston, Indianapolis, Kansas City, Little Rock, Memphis, Phoenix, Providence, San Diego, Salt Lake City and Washington DC."

"Sprint, meanwhile, is readying to launch its first 5G markets in the coming weeks. Atlanta, Chicago, Dallas and Kansas City are supposed to be the first 5G markets, while Houston, Los Angeles, New York, Phoenix and Washington DC will follow by the end of June."

On the 30th May 2019 the The Financial Times reported that EE will be the first the launch a 5G network in the UK on 30 May. Vodafone is following on 3 July. O2 and Three expect to launch their networks later this year.

"The UK is one of the first countries in Europe to start rolling out 5G but Switzerland is the furthest along with 227 areas, according to the tracking firm Ookla. There are also limited areas in Finland, Italy, Poland and Spain."

"5G networks are also up and running in... Argentina, South Africa, Qatar, United Arab Emirates, China, South Korea and Australia."

Dr Anna Becker, CEO of Endotech.io and PhD in AI explained that "5G is a necessary step though it is still in very early stages. This also applies to Machine Learning for IoT as we still need to build the infrastructure to enable efficient machine to machine communication. There are hundreds of tasks that we can optimize, personalize and eliminate thanks to embedded Machine Learning using machine to machine communication and intelligent sensors and whilst I am an optimist, it will take time for the adoption. Insurance and Finance sectors will definitely be affected but it will also take time due to attempts to digest the technological upgrades needed. In areas that entail daily routines, B2C will start adopting it and it will flourish much quicker."

5G & Autonomous Vehicles

Image Above Editorial Credit Steve Lagreca / Shutterstock.com, Mercedes F 015 Concept car at the North American International Auto Show (NAIAS)

Autonomous cars are expected to start making their presence felt in the mid 2020s with Grand View Research predicting that " The global self‑driving cars and trucks market size is expected to be approximately 6.7 thousand units in 2020 and is anticipated to expand at a CAGR of 63.1% from 2021 to 2030. "

Source for Image Above Grand View Research

Bijan Khosravi writes in the Forbes Article "Autonomous Cars won't work until we have 5G" and that "Autonomous cars will become a reality, but it won’t happen until 5G data networks are ubiquitous. The current 4G network is fast enough for us to share status updates or request rides, but it doesn’t have the capability to give cars the human-like reflexes that might have prevented the Uber accident."

"Self-driving vehicles are just one of the incredible technologies that will be unlocked by 5G. Virtual reality, smart cities and Artificial Intelligence all sit on the cusp of major breakthroughs—they just need the data network to catch up."

The data processing requirements for autonomous vehicles to replicate human reflexes are substantial with Dr Joy Laskar, the CTO of Maja systems, quoted as stating that an autonomous car "with an advanced Wi-Fi connection, it will take 230 days to transfer a weeks-worth of data from a self-driving car.."

Patrik Nelson in Network World stated that "Intel forecast that just one autonomous car will use 4,000 GB of data/day and that self-driving cars will soon create significantly more data than people—3 billion people’s worth of data"

Image above Network World, Intel

The article explained that the reason for the deluge of data is due to the number of sensors on the car with the radar alone expected to result in 10 to 100kps and just the cameras forecast to generate between 20 to 40Mbs. Therefore, Intel forecast that each autonomous car will generate the equivalent data as 3,000 people and 1 million autonomous cars to generate the same data as 3 billion people.

The use cases of autonomous cars will include helping the elderly maintain mobility. Theodora Lau (@psb_dc ) explained that "AI combined with IoT will play critical roles in enhancing the lives of older adults as more chose to live independently at home through their later years in life. Such emerging technologies can help assist their wellbeing and reduce their isolation."

An article in Automotive news Europe observed that Renault unveiled an autonomous EZ-Pod concept with the intention of being used as transportation for elderly shoppers or dropping off children at school.

Image above Renault EZ-Pod, Source Automotive News Europe

Machine Learning is also being applied to 3D printing. Purdue university are increasing the precision and consistency of 3D printing with a new AI-powered software tool. An ongoing issue with 3D printing has always been accuracy, especially when it comes to parts that need to fit together with extreme precision. The new technology addresses this downfall.

This has applications for many industries, such as aerospace, where exact geometric dimensions are crucial to ensure reliability and safety,” Sabbaghi assistant professor of statistics at Purdue University,

It was reported in April 2019 that Intellegens, a spin out from the University of Cambridge, developed a Machine Learning algorithm for 3D printing new alloys. Business Weekly reported in May 2019 that "In a research collaboration between several commercial partners and the Stone Group at the University of Cambridge, the Alchemite™ Deep Learning algorithm was used to design a new nickel-based alloy for direct laser deposition, without the need for expensive, speculative experiments – saving the team involved an estimated 15 years of research and in the region of $10m in R&D expenditure."

The vision is for end-to-end production that comprises AI with 3D printing alongside current manufacturing methods resulting in exciting opportunities to obtain gains in efficiency and customer satisfaction.

Machine Learning will play a key role in reducing the risk of errors across instal scale 3D printing. An article in MIT by Zach Win "A 3-D printer powered by machine vision and Artificial Intelligence" observed that "Objects made with 3-D printing can be lighter, stronger, and more complex than those produced through traditional manufacturing methods. But several technical challenges must be overcome before 3-D printing transforms the production of most devices."

"Commercially available printers generally offer only high speed, high precision, or high-quality materials. Rarely do they offer all three, limiting their usefulness as a manufacturing tool. Today, 3D printing is used mainly for prototyping and low-volume production of specialized parts."

"Now Inkbit, a startup out of MIT, is working to bring all of the benefits of 3D printing to a slew of products that have never been printed before — and it’s aiming to do so at volumes that would radically disrupt production processes in a variety of industries."

"The company is accomplishing this by pairing its multimaterial inkjet 3-D printer with machine-vision and machine-learning systems. The vision system comprehensively scans each layer of the object as it’s being printed to correct errors in real-time, while the machine-learning system uses that information to predict the warping behavior of materials and make more accurate final products."

It is expected that 3D printing combined with Machine Learning will massively disrupt the manufacturing and retail sectors over the course of the next five years. One example is in relation to spare parts where PWC expect that Machine Learning and 3D printing will play a dominant role over the next few years.

The Future of Retail

The retail sector is one that is set to be transformed completely in the period to 2025. Bricks & Mortar retailers are suffering heavily in the USA, UK and many other parts of the world. In the US there has been the chapter 11 filing for Sears, and it has recently been reported that JC Penny faces a challenging outlook in 2019. The FT reported that "Falling sales at big US department stores spark sell-off" with the likes of Nordstrom and Kohl facing challenges. In the UK many large retailers have fallen into severe trouble or disappeared for example Houser of Fraser, Debenhams and Select Fashion.

The danger for the retail sector is that the outlook for retailers continues to look challenging with many locked into their existing legacy systems and including inventory management systems and cultural practices that are less relevant to the consumers of today who are used to the convenience and efficiency of online and mobile. Those retailers that do survive and flourish into the 2020s will be those that adopt AI and use edge computing and 5G in an intelligent manner to enhance the customer journey and personalise the experience. Amazon is making major in roads and is even now moving into bricks and mortar stores. The Amazon Go stores that opened in the US utilised Deep Learning and allowed for a shopping experience that is free from queuing. It was announced on the 4th June 2019 that Amazon was opening 10 Clicks & Mortar stores in the UK.

Moreover, Amazon has understood the importance of being data driven and has been able to leverage AI and recommendation systems to grow. Blake Morgan reported on "How Amazon Has Reorganized Around AI And Machine Learning" with "AI also playing a huge role in Amazon’s recommendation engine, which generates 35% of the company’s revenue."

Jim Marous expressed a similar vision in the Financial Brand for physical banking to adopt advanced technology alongside the physical network known as bricks & clicks to increase digital integration.

The bricks & mortars retail stores that survive in the next 5 years will be those that adopt digital technology including AI to become bricks & clicks stores whereby the store is communicating with and responding to the needs of the customer. AI combined with mobile and AR will enable mass personalisation at scale.

A press release entitled "Gartner Says 100 Million Consumers Will Shop in Augmented Reality Online and In-Store by 2020" observed how AR and VR will rely upon 5G to change the customer experience inside and outside retail stores. The 5G mobile network technology represents an opportunity to accelerate the adoption of AR and VR inside stores.

“Gartner expects that the implementation of 5G and AR/VR in stores will transform not only customer engagement but also the entire product management cycle of brands,” said Sylvain Fabre, senior research director at Gartner. “5G can optimize warehouse resources, enhance store traffic analytics and enable beacons that communicate with shoppers’ smartphones.”

I believe that future retail experience by the mid 2020s will be less about walking around shelves and railings, and more towards bricks & mortar stores adopting advanced digital technologies with stores holding less physical inventory within the store itself. The retailers who will continue to exist five years from now will be the ones that transition towards the best in class for customer experience and a frictionless experience (whilst keeping internal costs down) with the application of AR and VR combined with Machine Learning, for example with smart mirrors, and in store entertainment. The ability to understand the customer and respond to their needs in terms of product offerings and combinations of products will become the norm.

For example imagine future store where a shopper entering a store that has built around digital technology that enables them to have their individual size measured and to select a particular size and for the customer to sit in a digital lounge area enjoying a cup of coffee whilst viewing the entertainment on a VR headset whilst their item is produced for them in their particular size.

In May 2019 CBInsights published an incisive report" The Future Of Fashion: From Design To Merchandising, How Tech Is Reshaping The Industry" and explained the potential impact of technologies such as AR, VR, 3D printing and 3D design alongside AI to disrupt the sector.

Source for Image Above: CBInsights, The Future Of Fashion: From Design To Merchandising, How Tech Is Reshaping The Industry

The wider adoption of AI will assist retailers in understanding the needs of their customer and to provide the level of customer experience that is paramount to their future success. Change in practices that enhance the customer experience alongside the adoption of intelligent technology instore will be key for retailers such as the likes of the John Lewis group who own the Waitrose supermarket chain to rebound from their recent 99% fall in profits.

AI will be increasingly on the edge with intelligent devices around us and one of AI actively participating in the global economy beyond the social media giants. It is highly unlikely that the world in 2025 will be one about Terminator machines and Skynet as we will not have reached AGI by then. For example in the Venturebeat article entitled "Geoffrey Hinton and Demis Hassabis: AGI is nowhere close to being a reality" observes that "At the current pace of change, analysts at the McKinsey Global Institute predict that, in the US alone, AI will help to capture 20-25 percent in net economic benefits (equating to $13 trillion globally) in the next 12 years."

This will be the era where Machine Learning will enable us to make sense out of the big data that is being generated. It will be a data driven world in which autonomous systems will have emerged into the world around us from self-driving cars, autonomous drones, robots, and AR and VR combined with Machine Learning will have become a common User Interface across multiple industries. It will also be world where those organisations that succeed will be the ones that understand the importance of capturing and cleaning data, Data Science capabilities and developing the skills and talents of their staff to understand the commercial applications of Machine Learning and in turn to enable enhanced customer experiences and efficiently automate repetitive tasks whilst developing new products that the era of 5G and AI will enable.

Breakthroughs in research will occur whereby Deep Neural Networks will require less data for training them than they have done in the past. For example as noted in an earlier article that I published, Jonathan Frankle Michael Carbin of MIT CSAIL published The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks with the summary by Adam Conner-Simons in Smarter training of neural networks with the MIT CSAIL project showing that neural nets contain "subnetworks" 10x smaller that can just learn just as well - and often faster.

The team’s approach isn’t particularly efficient now - they must train and “prune” the full network several times before finding the successful subnetwork. However, MIT professor Michael Carbin says that his team’s findings suggest that, if we can determine precisely which part of the original network is relevant to the final prediction, scientists might one day be able to skip this expensive process altogether. Such a revelation has the potential to save hours of work and make it easier for meaningful models to be created by individual programmers and not just huge tech companies.

Furthermore, in another development, Josh Tenenbaum of MIT led a team to create a program known as neuro-symbolic concept learner (NS-CL) that leaped about the world in a similar manner to how a child may learn through talking and looking (although in a more simplistic manner).

The paper entitled The Neuro-Symbolic Learner: Interpreting Scenes, Words, and Sentences form Natural Supervision is a joint paper between MIT CSAIL, MIT Brain Computer Science, MIT-IBM Watson AI Lab and Google DeepMind.

Will Knight in the Technology Review observed that:

"More practically, it could also unlock new applications of AI because the new technology requires far less training data. Robot systems, for example, could finally learn on the fly, rather than spend significant time training for each unique environment they’re in."

“This is really exciting because it’s going to get us past this dependency on huge amounts of labeled data,” says David Cox, the scientist who leads the MIT-IBM Watson AI lab.

Geoff Hinton published research paper entitled " Dynamic Routing Between Capsules" in 2017 and this is an area of ongoing research. Aryan Misra provides a useful overview in an article published in Jan 2019 in TowardsDataScience "Capsule Networks: The New Deep Learning Network" with the following summary "Capsule nets are still in a research and development phase and not reliable enough to be used in commercial tasks as there are very few proven results with them. However, the concept is sound and more progress in this area could lead to the standardization of Capsule Nets for Deep Learning image recognition."

I restate the my opinion that wrote in an earlier article about how AI will impact your daily day lives in the 2020s.

Every single sector of the economy will be transformed by AI and 5G in the next few years. Autonomous vehicles may result in reduced demand for cars and car parking spaces within towns and cities will be freed up for other usage. It maybe that people will not own a car and rather opt to pay a fee for a car pooling or ride share option whereby an autonomous vehicle will pick them up take them to work or shopping and then rather than have the vehicle remain stationary in a car park, the same vehicle will move onto its next customer journey. The Financial Times reported that Credit Suisse forecast that new car sales would one materially impacted by autonomous cars by the end of the next decade (just slightly beyond the focus on this blog but worth noting).

Hanish Batia in an article entitled "Connected Car Opportunity Propels Multi-Billion-Dollar Turf War" in Counterpoint in 2018 noted that "Over the next five years, number of cars equipped with embedded connectivity will rise by 300%."

Source for Image above: Counterpoint

The interior of the car will use AR with Holographic technologies to provide an immersive and personalised experience using AI to provide targeted and location-based marketing to support local stores and restaurants. Machine to machine communication will be a reality with computers on board vehicles exchanging braking, speed, location and other relevant road data with each other and techniques such as multi-agent Deep Reinforcement Learning maybe used to optimise the decision making by the autonomous vehicles. Deep Reinforcement Learning refers to Deep learning and Reinforcement Learning (RL) being combined together. This area of research has potential applications in Fintech, Healthcare, IoT and autonomous systems such as robotics and has shown promise in solving for complicated tasks that require decision making and in the past had been considered as too complex for a machine. Multi-agent reinforcement learning seeks to enable agents that interact with each other the ability to learn collaboratively as they adapt to the behaviour of other agents. Furthermore, object detection using CNNs will also occur on the edge in cameras too (autonomous systems and also security cameras for intruder detection).

Edge Computing hardware will continue to grow with the likes of Nvidia offering the Jetson TX2, Xavier and more recently announcing the Jetson Nano. In March 2019 it was announced that Google’s Edge TPU Debuts in New Development Board. Furthermore software frameworks for Edge Computing will also continue to grow for example in March 2019 it was announced that Google launched TensorFlow Lite 1.0 for mobile and embedded devices. These trends will continue in both hardware and software frameworks thereby continuing the deployment of AI at the Edge (on device).

I agree with Andrew NG (@AndrewYNg) that we are not on the way to a new AI winter, in particular with the amount of data being generated only set to increase as we move to a world of Edge Computing and 5G with many connected devices requiring Machine Learning to make sense of the deluge of data.

Andrew NG stated on 17th August 2018 that "We need a Goldilocks Rule for AI:"

"- Too optimistic: Deep learning gives us a clear path to AGI!"

"- Too pessimistic: DL has limitations, thus here's the AI winter!"

"- Just right: DL can’t do everything, but will improve countless lives & create massive economic growth."

This video by NVIDIA demonstrating the Jetson TX1 on a drone and allowing it to navigate through a forest without GPS is an example of Deep Learning on the Edge and what we will become accustomed to during the course of the next 5 years.

Source for the video: https://www.youtube.com/watch?v=4_TmPA-qw9U

Vodafone reported in 2018 that it had conducted the first holographic phone call in the UK and their graphics shown below provide a good example about the potential of 5G to transform the customer experience and the Healthcare sector.

The video below shows the holographic phone call with 5G, see: Vodafone making the UK’s first holographic phone call over 5G

Source for the video: https://youtu.be/Ilq2qtFHTf8

For more see the Vodofone media centre website.

AI Research

For those interested in research papers that provide insights into the areas of Machine Learning that I believe will be important over the next few years, I suggest looking at the following below:

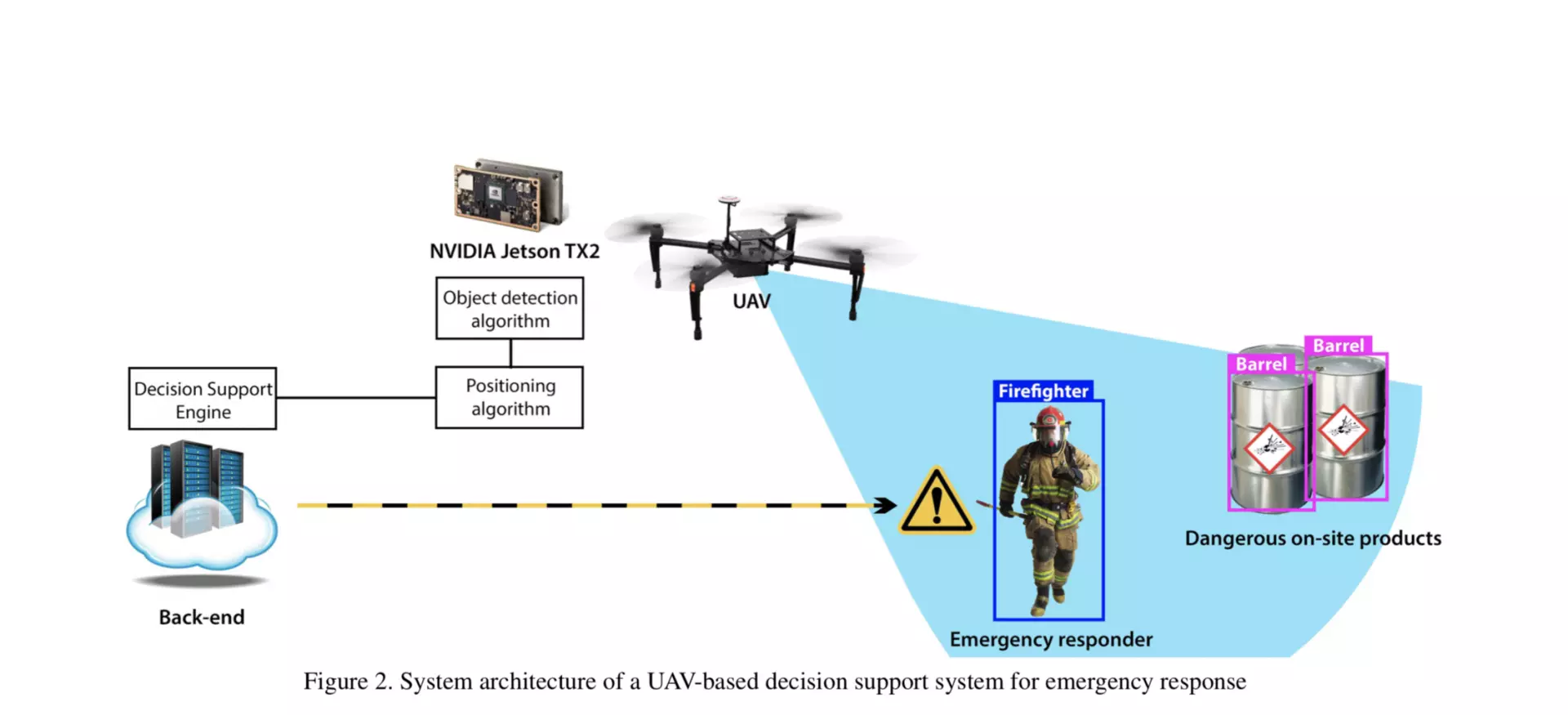

Examples of Deep Learning on the Edge include using Convolutional Neural Networks (CNNs) on a drone is provided by Tijtgat et al. Embedded Real-Time Object Detection for a UAV Warning System using the YOLOv2 object detection algorithm running on an NVIDIA Jetson TX2. Tijtgat et al. explain that "An autonomous UAVcaptures video data that the on-board hardware processes. In the example, a ’Firefighter’ and two ’Barrel’ instances are detected and their position is calculated. This information is relayed to the decision support system, that generates an alert if the firefighter gets too close to the dangerous products." A CNN is type of Deep Neural Network that uses convolutions to extract patterns from the input data in a hierarchical manner. It’s mainly used in data that has spatial relationships such as images.

Source for image above: Tijtgat et al. Embedded Real-Time Object Detection for a UAV Warning System

Further developments with Deep Reinforcement Learning

As noted above, autonomous vehicles will need to make decisions on the vehicle itself as the danger of latency whilst waiting for replies from a remote cloud server would not allow for safe real-time decision making by the vehicle. Techniques such as Deep Reinforcement Learning on the vehicle will play a key role.

Tampuu et al. "Multi agent cooperation and competition with Deep Reinforcement Learning" observed that "In the ever-changing world biological and engineered agents need to cope with unpredictability. By learning from trial-and-error an animal, or a robot, can adapt its behavior in a novel or changing environment. This is the main intuition behind reinforcement learning. A Reinforcement Learning agent modifies its behavior based on the rewards it collects while interacting with the environment. By trying to maximize these rewards during the interaction an agent can learn to implement complex long-term strategies."

Kendall et al. (researchers at wayve.ai) published research in 2018 Learning to drive in a day stating" We demonstrate the first application of Deep Reinforcement Learning to autonomous driving. From randomly initialised parameters, our model is able to learn a policy for lane following in a handful of training episodes using a single monocular image as input. We provide a general and easy to obtain reward: the distance travelled by the vehicle without the safety driver taking control. We use a continuous, model-free Deep Reinforcement Learning algorithm, with all exploration and optimisation performed on-vehicle."

Source for image above: Kendal et al. team from wayve.ai, Learning to Drive in a Day

Talpaert et al. 2019 "Exploring applications of Deep Reinforcement Learning for real-world autonomous driving systems" provide a useful overview of the tasks in autonomous driving systems, reinforcement learning algorithms and applications of DRL to AD systems.

Source for the image above: Talpaert et al. 2019 "Exploring applications of Deep Reinforcement Learning for real-world autonomous driving systems"

Machine to machine communication will be of key importance for self driving cars as will the ability to co-ordinate and collaborate and a great deal of research is been undertaken on applications of Deep Reinforcement Learning and Edge Computing in relation to self-driving cars. The pathway to getting autonomous vehicles to coexist with human drivers is an area of active research.

Bacchiani et al. in 2019 published fascinating research "Microscopic Traffic Simulation by Cooperative Multi-agent Deep Reinforcement Learning" whereby they observed that "An interesting challenge is teaching the autonomous car to interact and thus implicitly communicate with human drivers about the execution of particular maneuvers, such as entering a roundabout or an intersection. This is a mandatory request since the introduction of self-driving cars onto public roads is going to be gradual, hence human and self-driving vehicles have to cohabit the same spaces." Their results were encouraging and also they set out suggestions for future work towards designing a more efficient network architecture and research in this field will be important for enabling autonomous vehicles to hit our roads and safely coexist with human drivers in the 2020s.

Multi-Agent Deep Reinforcement Learning will also play a role in the way that our cities will be run. For example Chu et al. in 2019 propose "Multi-Agent Deep Reinforcement Learning for Large-scale Traffic Signal Control" with research based upon the large real-world traffic network of Monaco city, under simulated peak-hour traffic dynamics.

GANs

In 2014 Ian Goodfellow et al. introduced the name GAN in a paper that popularized the concept and influenced subsequent work. Examples of the achievements of GANs include the generation of faces in 2017 as demonstrated in a paper entitled "" This Person Does Not Exist: Neither Will Anything Eventually with AI."

GANs are part of the Neural Network family and entail unsupervised learning. They entail two Neural Networks, a Generator and a Discriminator, that compete with one and another in a zero-sum game.

The training involves an iterative approach whereby the Generator seeks to generate samples that may trick the Discriminator to believe that they are genuine, whilst the Discriminator seeks to identify the real samples from the samples that are not genuine. The end result is a Generator that is relatively capable at producing samples relative to the target ones. The method is used to generated visual images such as photos that may appear on the surface to be genuine to the human observer.

An example of how GANs will help with AI on the device in Healthcare is provided by Zhou et al. in a paper entitled "Image Quality Improvement of Hand-held Ultrasound Devices with a Two-stage Generative Adversarial Network". The authors note that the development of portable ultrasound devices has become a popular area of research. However, the limited size of portable ultrasound devices usually degrades the imaging quality, which reduces the diagnostic reliability. Zhou et al. explained "To overcome hardware limitations and improve the image quality of portable ultrasound devices, we propose a novel Generative Adversarial Network (GAN) model to achieve mapping between low-quality ultrasound images and corresponding high-quality images. The results confirm that the proposed approach obtains the optimum solution for improving quality and offering useful diagnostic information for portable ultrasound images. This technology is of great significance for providing universal medical care."

One key challenge for wider adoption of AR is content generation, for example within the retail sector a particular the challenge is to reconstruct images from 2D to 3D. The challenge to accurately automate the reconstruction of 3D representations from 2D images for areas such as apparel where the item is deformable rather than solid will take additional research and time.

However, there is progress in the research area of 3D reconstruction that may prove helpful for AR across various sectors and also assist with 3D reconstruction for autonomous robots and cars, for example Michalkiewicz et al. presented Deep Level Sets: Implicit Surface Representations for 3D Shape Inference noting "Existing 3D surface representation approaches are unable to accurately classify pixels and their orientation lying on the boundary of an object. Thus resulting in coarse representations which usually require post-processing steps to extract 3D surface meshes. To overcome this limitation, we propose an end-to-end trainable model that directly predicts implicit surface representations of arbitrary topology by optimising a novel geometric loss function."

Source for the image above: Michalkiewicz et al. Deep Level Sets: Implicit Surface Representations for 3D Shape Inference

Pontes et al. published a novel approach in Image2Mesh: A Learning Framework for Single Image 3D Reconstruction and noted "A challenge that remains open in 3D Deep Learning is how to efficiently represent 3D data to feed deep neural networks...Such high quality 3D representation and reconstruction as proposed in our work is extremely important, especially to unlock Virtual and Augmented Reality applications."

It is suggested that future developments in GANs alongside other Deep Learning methods will play an important role in Computer Vision and AR in the period to 2025.

Imtiaz Adam is a Hybrid Strategist and Data Scientist. He is focussed on the latest developments in artificial intelligence and machine learning techniques with a particular focus on deep learning. Imtiaz holds an MSc in Computer Science with research in AI (Distinction) University of London, MBA (Distinction), Sloan in Strategy Fellow London Business School, MSc Finance with Quantitative Econometric Modelling (Distinction) at Cass Business School. He is the Founder of Deep Learn Strategies Limited, and served as Director & Global Head of a business he founded at Morgan Stanley in Climate Finance & ESG Strategic Advisory. He has a strong expertise in enterprise sales & marketing, data science, and corporate & business strategist.

Leave your comments

Post comment as a guest