Comments

- No comments found

Artificial intelligence (AI) is disrupting a multitude of industries.

This article is a response to an article arguing that an AI Winter maybe inevitable.

However, I believe that there are fundamental differences between what happened in the 1970s (the fist AI winter) and late 1980s (the second AI winter with the fall of Expert Systems) with the arrival and growth of the internet, smart mobiles and social media resulting in the volume and velocity of data being generated constantly increasing and requiring Machine Learning and Deep Learning to make sense of the Big Data that we generate.

For those wishing to see a details about what AI is then I suggest reading an Intro to AI, and for the purposes of this article I will assume Machine Learning and Deep Learning to be a subset of Artificial Intelligence (AI).

AI deals with the area of developing computing systems that are capable of performing tasks that humans are very good at, for example recognising objects, recognising and making sense of speech, and decision making in a constrained environment.

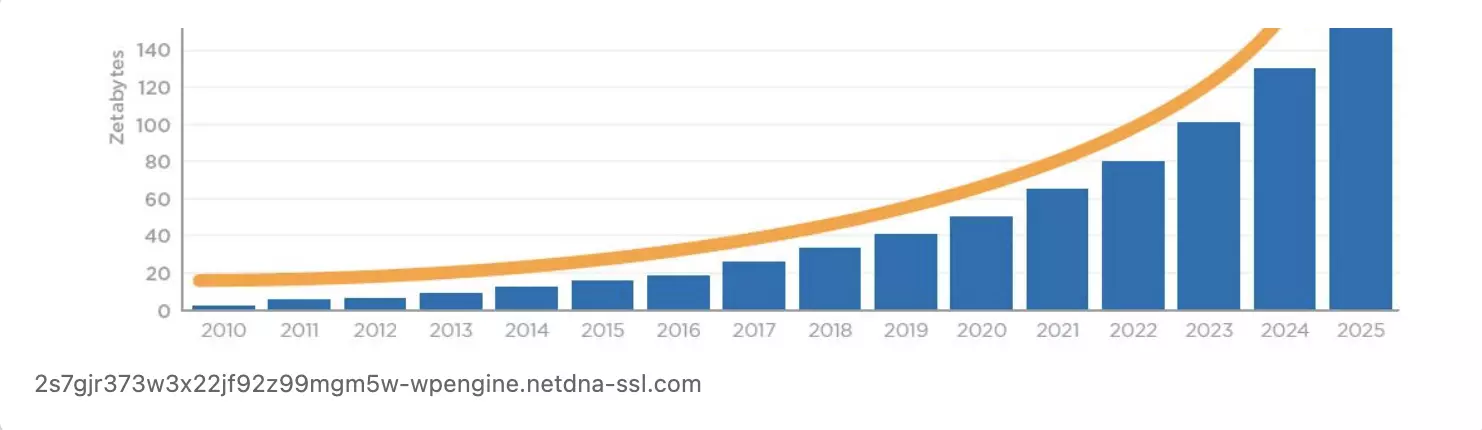

The rapid growth in Big Data has driven much of the growth in AI alongside reduced cost of data storage (Cloud Servers) and Graphical Processing Units (GPUs) making Deep Learning more scalable. Data will continue to drive much of the future growth of AI, however, the nature of the data and the location of its interaction with AI will change. This article will set out how the future of AI will increasingly be alongside data generated at the edge of the network (on device) closer to the user. This will have the advantage that latency will be lower and 5G networks will enable a dramatic increase in device connectivity with much greater capacity to connect IoT devices relative to 4G networks.

The 8th edition of Data Never Sleeps by Domo illustrates how "data is constantly being generated in ad clicks, reactions on social media, shares, rides, transactions, streaming content, and so much more. When examined, this data can help you better understand a world that is moving at increasing speeds."

AI has been applied effectively in the Digital Marketing space in particular where Digital footprints have been created and allow for Machine Learning algorithms to learn from historical data to make predictions of the future.

The growth in data has been a key factor in the growth of AI (Machine Learning and Deep Learning) over the past decade and will be an ongoing theme in the 2020s as one consequence of covid crisis has been to accelerate digital transformation and hence digital data will continue to grow.

Much of the data is being generated today is via the social media giants, tech majors and commerce giants and the Machine Learning or Deep Learning algorithms are used to generated personalised recommendations and content for the user. If the recommendations contain an error then there is no injury or death and no complex issues about liabilities and damages. However, when we seek to scale Deep Learning into areas such as healthcare and autonomous systems such as autonomous cars, drones and robots, then the real-word issues become more complex and the issues set out above have to be addressed.

If AI is set to enter increasing sectors of the economy and into our homes and places of work (be that home, the office, a healthcare facility or a factory for example) then we'll need to develop AI with the capabilities of causality and transparency too whilst ensuring data security and privacy safeguards.

Moreover, I believe that we are on the verge of experiencing technology convergence between AI, 5G and the IoT with AI increasingly on the Edge of the network (on the device). This in turn is creating demand for AI research with innovation for areas that will extract more from less such as Neural Compression (see below) and also innovation with hybrid AI techniques that will lead to Broad AI, an area in between Narrow AI (ANI) and Artificial General Intelligence (AGI).

Instead of Zoom and other 2D video calls that we've become accustomed to during the Covid crisis in 2020, we'll be using Holographic 3D calls with 5G enabled Glasses in a few years.

Furthermore, going forwards a great deal of the data generated will be from Internet of Things (IoT) connected devices on the Edge of the network. With real-time data and analytics being increasingly important.

This will lead to demand for innovation and new techniques in AI and Deep Learning as we increasingly work with data that is closer to where it is being generated, nearer to the user, and also as autonomous systems will need to deal with dynamic environments in a real-time environment.

This need to enable near real-time analytics and responses will lead to AI Deep Learning techniques that are more efficient with smaller data sets. Furthermore, this will also be benefits from a climate sustainability perspective.

The area of Neural Network Compression, including with Pruning techniques such as the one highlighted by the Lottery Ticket Hypothesis will be increasingly important.

An example is shown in the video hosted by Clarafai featuring a Facebook AI Research engineer, Dr Michaela Paganini.

Increasingly we will find ways to squeeze more out of Neural Networks by making them more efficient and hence enable them to work efficiently in IoT and Edge use case environments.

Decentralised Data: Federated Learning and Differential Privacy

Much of the data that we will be working with in the era of Edge Computing and 5G networks will be decentralised and distributed data. Decentralised data has also been a challenge in healthcare today with many hospitals and other healthcare providers holing data in local silos and concerned about data sharing due to privacy regulations such as GDPR or HIPAA. Indeed data security is a key area of corporate governance and issue that needs careful consideration when seeking to leverage AI technology to certain sectors.

Federated Learning with Differential Privacy will be a key area to expand Machine Learning into areas such as Healthcare, Financial Services including banking and insurance.

Transformers have been revolutionising the field of Natural Language Processing (NLP) over recent years with the likes of BERT, GPT-2 and more recently GPT-3.

In 2020 we also started to see Transformers making an impart in Computer Vision with the likes of the DETR model produced by Facebook AI Research that combined Convolutional Neural Networks with Transformers with Self-Attention Mechanism to streamline the process.

Furthermore, in October an ICLR paper was released entitled "An image is worth 16*16 words" that demonstrated Transformer network to outperform even the most advanced Convolutional Neural Network in image recognition. The paper received huge enthusiasm in the AI research community with Synced noting that "...the paper suggests the direct application of Transformers to image recognition can outperform even the best convolutional neural networks when scaled appropriately. Unlike prior works using self-attention in CV, the scalable design does not introduce any image-specific inductive biases into the architecture."

A key extract from the paper is set out below.

"While the Transformer architecture has become the de-facto standard for natural language processing tasks, its applications to computer vision remain limited. In vision, attention is either applied in conjunction with convolutional networks, or used to replace certain components of convolutional networks while keeping their overall structure in place. We show that this reliance on CNNs is not necessary and a pure transformer applied directly to sequences of image patches can perform very well on image classification tasks. When pre-trained on large amounts of data and transferred to multiple mid-sized or small image recognition benchmarks (ImageNet, CIFAR-100, VTAB, etc.), Vision Transformer (ViT) attains excellent results compared to state-of-the-art convolutional networks while requiring substantially fewer computational resources to train."

One key aspect with Transformer models will be the potential to reduce computational cost relative to a Convolutional Neural Network and also the ability to apply Neural Network Compression in the form of pruning to NLP Transformer models.

Carbin et al. authored a paper entitled "The Lottery Ticket Hypothesis for Pre-trained BERT Networks" where they argued that "As large-scale pre-training becomes an increasingly central paradigm in Deep Learning, our results demonstrate that the main lottery ticket observations remain relevant in this context." The key here is pointed out by MIT CSAIL "Google search's NLP model BERT requires massive processing power and the new paper suggests that it would often run just as well with as little as 40% as many neural nets" (subnetworks).

Reducing the computational cost of Deep Learning is important for environmental sustainability and also for scaling Deep Learning across the Edge and IoT microcontrollers have a very limited resource budget, especially memory (SRAM) and storage (Flash).

Lin et al. explained in a paper entitled "MCUNet: Tiny Deep Learning on IoT Devices" that

"The on-chip memory is 3 orders of magnitude smaller than mobile devices, and 5-6 orders of magnitude smaller than cloud GPUs, making deep learning deployment extremely difficult."

Daniel Ackerman in MIT news in an article entitled "System brings Deep Learning to Internet of Things devices" explains the research work stating that "The system, called MCUNet, designs compact neural networks that deliver unprecedented speed and accuracy for deep learning on IoT devices, despite limited memory and processing power. The technology could facilitate the expansion of the IoT universe while saving energy and improving data security." Kurt Keutzer, who was not involved in the work and is a computer scientist at the University of California at Berkeley added that MCUNet could “bring intelligent computer-vision capabilities to even the simplest kitchen appliances, or enable more intelligent motion sensors.”

The article also notes that MCUNet may enhance IoT device more security. “A key advantage is preserving privacy,” says Han. “You don’t need to transmit the data to the cloud.”

"Analyzing data locally reduces the risk of personal information being stolen — including personal health data. Han envisions smart watches with MCUNet that don’t just sense users’ heartbeat, blood pressure, and oxygen levels, but also analyze and help them understand that information. MCUNet could also bring deep learning to IoT devices in vehicles and rural areas with limited internet access."

Moreover, the carbon footprint of MCUNet is also slim.

The article quotes Han as stating “Our big dream is for green AI ... adding that training a large neural network can burn carbon equivalent to the lifetime emissions of five cars. MCUNet on a microcontroller would require a small fraction of that energy."

“Our end goal is to enable efficient, tiny AI with less computational resources, less human resources, and less data,” says Han.

Further breakthroughs in the area of AI research in 2020 have included the rise of Neuro Symbolic AI that combines Deep Neural Networks with Symbolic AI with the work of IBM Watson and MIT CSAIL. Shristri Deoras in India Analytics magazine observed that researchers were gushing over it and observed that "They used CLEVRER to benchmark the performances of neural networks and neuro-symbolic reasoning by using only a fraction of the data required for traditional deep learning systems. It helped AI not only to understand casual relationships but apply common sense to solve problems."

Microsoft Research published Next-generation architectures bridge gap between neural and symbolic representations with neural symbols explaining that "In neurosymbolic AI, symbol processing and neural network learning collaborate. Using a unique neurosymbolic approach that borrows a mathematical theory of how the brain can encode and process symbols.."

"Two of these new neural architectures—the Tensor-Product Transformer (TP-Transformer) and Tensor Products for Natural- to Formal-Language mapping (TP-N2F)—have set a new state of the art for AI systems solving math and programming problems stated in natural language. Because these models incorporate neural symbols, they’re not completely opaque, unlike nearly all current AI systems. We can inspect the learned symbols and their mutual relations to begin to understand how the models achieve their remarkable results."

Another field showing promise in the field of AI is the area of Neuroevolution. Areas where Neurevoltion is being applied and generating excitement include robotics and gaming environments. It is an area of active research with an example of recent interesting research being produced by Rasekh and Safi-Esfahani entitled EDNC: Evolving Differentiable Neural Computers " shows that both proposed encodings reduce the evolution time by at least 73% in comparison with the baseline methods."

On another note relating to breakthrough AI research DeepMind recently announced that they had solved the challenge of protein folding as noted by Nature in an article entitled "‘It will change everything’: DeepMind’s AI makes gigantic leap in solving protein structures" with the article noting that "The ability to accurately predict protein structures from their amino-acid sequence would be a huge boon to life sciences and medicine. It would vastly accelerate efforts to understand the building blocks of cells and enable quicker and more advanced drug discovery."

The achievement by DeepMind illustrates that we are able to achieve real-world impact with cutting edge AI research such as Deep Reinforcement Learning.

It is ironic that DeepMind released the research results in close proximity to the article suggesting that a new AI winter is inevitable (in part due to a view of Moore's law reaching a limit) as a year ago a VC informed me that there was no way that we would solve protein folding without quantum computing capabilities as currently computing capabilities were insufficient and that this would take at least one decade or more as we were hitting the limits of Moore's law and again needed Quantum Computing capabilities to solve such complex tasks.

Moreover, if one refers to the limitations of Alpha Go, the Deep Mind algorithm that beat the world Go Champion Lee Sidol in 2016, as evidence of the onset of an AI winter because Alpha Go can only play one game, then one should also refer to its successor model (and successor to Alpha Go Zero) AlphaZero that Jennifer Oullette authored an article about entitled "Move over AlphaGo: AlphaZero taught itself to play three different games" with Jennifer Oullette observing that "AlphaZero, this program taught itself to play three different board games (chess, Go, and shogi, a Japanese form of chess) in just three days, with no human intervention." See also Multi-task Deep Reinforcement Learning with PopArt.

However, true multitasking at scale and on highly unrelated tasks by Deep Neural Networks remains a challenge (however, see the reference to generative replay below).

Research work is also being undertaken in relation to Neural Compression with Deep Reinforcement Learning. For example Zhang et al. authored research entitled "Accelerating the Deep Reinforcement Learning with Neural Network Compression" with the authors stating that "The experiments show that our approach NNC-DRL can speed up the whole training process by about 10-20% on Atari 2600 games with little performance loss."

Furthermore, AI researchers are increasingly taking inspiration from biology and the human brain and this is an area where efficiency has long been optimised by evolution. Science Daily reported that "The brain's memory abilities inspire AI experts in making neural networks less forgetful." The article states that "Artificial intelligence (AI) experts at the University of Massachusetts Amherst and the Baylor College of Medicine report that they have successfully addressed what they call a "major, long-standing obstacle to increasing AI capabilities" by drawing inspiration from a human brain memory mechanism known as "replay."

The research referenced above is significant as the authors argue that they can solve for catastrophic memory loss that has prevented Deep Neural Networks from truly multitasking in a genuinely scaled manner without forgetting how to perform other tasks (that may be more unrelated to the initial tasks that it learned).

The research paper by van de Ven et al. was published in Nature Communications and entitled"Brain-inspired replay for continual learning with artificial neural networks" and states the following

"Artificial neural networks suffer from catastrophic forgetting. Unlike humans, when these networks are trained on something new, they rapidly forget what was learned before. In the brain, a mechanism thought to be important for protecting memories is the reactivation of neuronal activity patterns representing those memories. In artificial neural networks, such memory replay can be implemented as ‘generative replay’, which can successfully – and surprisingly efficiently – prevent catastrophic forgetting on toy examples even in a class-incremental learning scenario. However, scaling up generative replay to complicated problems with many tasks or complex inputs is challenging. We propose a new, brain-inspired variant of replay in which internal or hidden representations are replayed that are generated by the network’s own, context-modulated feedback connections. Our method achieves state-of-the-art performance on challenging continual learning benchmarks (e.g., class-incremental learning on CIFAR-100) without storing data, and it provides a novel model for replay in the brain."

The above research may provide groundbreaking results in the future if it can be replicated and scaled as this would truly solve for the catastrophic memory loss issue.

We won't suddenly arrive at AGI and I am on record as stating that we will not achieve AGI this decade. However, we will move into the era of Broad AI during the 2020s and may look back in the future to realise that 2020 was the year not only of the Covid tragedy but also the year in which we made the initial concrete steps of leaving the era of Narrow AI and stepping towards Broad AI with the research breakthroughs in Transformers, Neuro Symbolic AI, Neural Compression (to reduce the computational cost of Deep Learning that limits its scaling in real-world environments) and solving for Catastrophic Memory Loss.

In conclusion the next few years will be one of great excitement with the arrival of AR enabled glasses with AI on the device and a number of the research breakthroughs that are being made this year will start to make way into the production environment resulting in exciting new opportunities.

And the AIIoT enabled by 5G networks will change how we live and work.

The technological convergence will drive the demand for broad AI with innovations in Deep Learning with hybrid AI (Neuro Symbolic AI and Neuroevolutonary AI) and Neural Compression to make Deep Neural Networks less computational costly and scalable across the Edge (on device).

It is true that we will move away from a number of the current AI models and the manner in which we develop models. A number of the AI startups that are heavily locked into the current way of doing things will fall away and new ones will take over and scale with the new, more efficient techniques that may effectively scale across broader areas of the economy and beyond social media and ecommerce.

AGI will still take time to arrive and maybe that is not a bad thing as I would argue that we are not ready for it as a society, with Tay the chatbot being a good example of what can go wrong. However, the 2020s will be the era of technology convergence between AI, IoT and 5G, Neural Compression and the rise of Broad AI with hybrid AI techniques and Transformers.

These are the techniques that will be needed to enable autonomous vehicles and other amazing innovations to truly work in the real-world.

This moment in time reminds me of the era in 2013/2014 when many in the Machine Learning world where still yet to appreciate and understand the impact of AlexNet and the potential and rise of Deep Learning in Computer Vision and then NLP. However, around 2022 /2023 I believe that the laws of supply and demand will result in the innovations in AI and technology convergence to drive a lot of the rise of next generation AI techniques with intelligence devices around us to improve key areas such as healthcare, financial services, retail, smart cities, autonomous networks and social communications.

Enlightened investors including Venture Capitalists seeking to invest in AI going forwards may wish to focus on the next generation that may scale across the sectors of the economy. A helpful summary of technology convergence and the opportunity is provided by Sierra Ventures (a Silicon Valley firm that has invested $1.9 billion into tech) partner Ben Yu in The Convergence of 5G and AI: A Venture Capitalist’s View.

Imtiaz Adam is a Hybrid Strategist and Data Scientist. He is focussed on the latest developments in artificial intelligence and machine learning techniques with a particular focus on deep learning. Imtiaz holds an MSc in Computer Science with research in AI (Distinction) University of London, MBA (Distinction), Sloan in Strategy Fellow London Business School, MSc Finance with Quantitative Econometric Modelling (Distinction) at Cass Business School. He is the Founder of Deep Learn Strategies Limited, and served as Director & Global Head of a business he founded at Morgan Stanley in Climate Finance & ESG Strategic Advisory. He has a strong expertise in enterprise sales & marketing, data science, and corporate & business strategist.

Leave your comments

Post comment as a guest