Comments

- No comments found

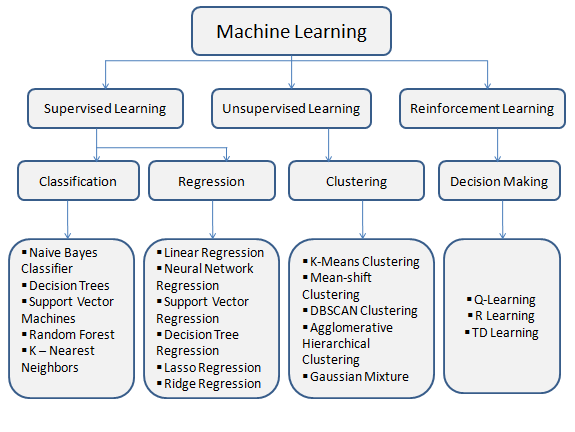

Machine learning has undoubtedly emerged as a transformative technology, reshaping industries and driving innovation across the globe.

From personalized recommendations on streaming platforms to cutting-edge medical diagnostics, AI-powered machine learning algorithms have revolutionized the way we interact with technology and make decisions. However, as these powerful algorithms become more pervasive in our daily lives, they also raise significant ethical challenges that cannot be ignored.

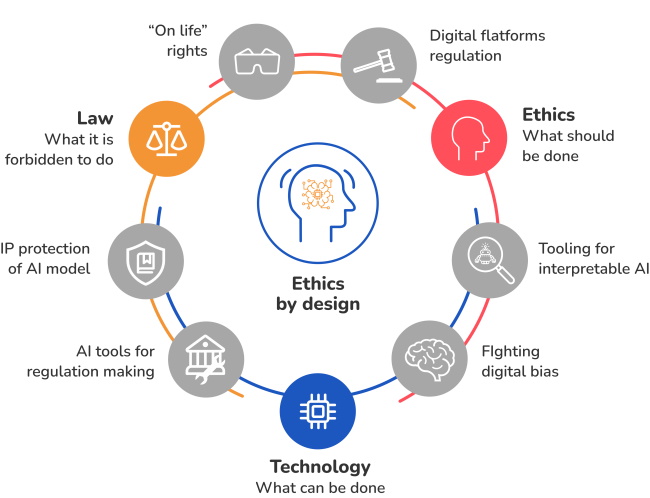

Striking the right balance between technological advancement and ethical responsibility is crucial to ensure the benefits of machine learning are maximized while minimizing its potential risks.

One of the most pressing ethical dilemmas in machine learning is algorithmic bias. Machine learning models are trained on vast datasets that reflect real-world data, but they can inadvertently inherit biases present in that data. As a result, AI systems may perpetuate societal prejudices and discrimination, amplifying racial, gender, or other biases present in historical data.

Consider a scenario where a hiring algorithm is trained on historical employment data. If that data shows a preference for certain demographics, the AI model may inadvertently perpetuate hiring decisions that favor those groups, further exacerbating existing inequalities. Such bias in AI systems can have far-reaching consequences, affecting opportunities, access to services, and even the criminal justice system.

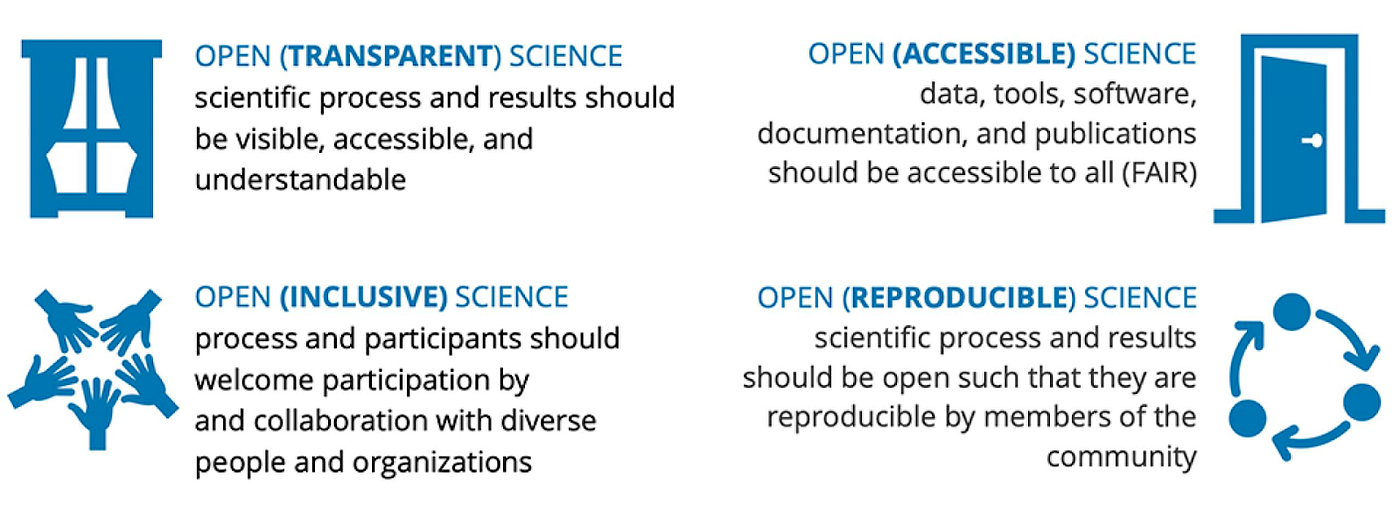

To address this challenge, researchers and developers must adopt robust testing and validation processes to identify and mitigate bias in machine learning models. Implementing fairness-aware algorithms and incorporating diverse and representative datasets can help create AI systems that are more equitable and inclusive.

Machine learning algorithms rely heavily on data - vast amounts of it - to make accurate predictions and decisions. This raises concerns about individual privacy and data protection. As AI systems gather and analyze personal data, questions arise about who has access to that data, how it is used, and how individuals can maintain control over their information.

For example, smart home devices and virtual assistants are increasingly common, collecting data on users' habits and preferences. While this data can lead to improved user experiences, it also raises privacy concerns, particularly if the data falls into the wrong hands or is used for manipulative purposes.

To address these concerns, privacy-focused machine learning techniques, such as differential privacy, have been developed to enable data analysis while safeguarding individual privacy. Policymakers must also play a crucial role in establishing regulations and guidelines to protect consumers' data rights and ensure transparency in data collection and usage practices.

The complexity of some machine learning models, particularly deep neural networks, can make it challenging to understand how they arrive at specific decisions or predictions. The lack of transparency and explainability in AI algorithms can lead to a lack of trust and undermine user confidence in these systems.

For instance, consider a medical diagnosis made by an AI-powered system. If the algorithm cannot explain its reasoning behind the diagnosis, healthcare professionals may hesitate to rely solely on the AI's recommendation, potentially compromising patient care.

To address this issue, researchers are actively working on techniques to make machine learning models more interpretable. Explainable AI methods, such as attention mechanisms and model visualization, can provide insights into the decision-making process of AI systems. By improving transparency and explainability, we can foster greater trust and accountability in machine learning algorithms.

Machine learning's ability to automate complex tasks and decision-making processes has significant advantages. However, it also introduces ethical challenges related to the potential displacement of human jobs and the impact on the workforce.

As AI and automation continue to advance, certain job roles may become obsolete or significantly reduced, leading to unemployment and economic disruptions. Striking a balance between embracing automation for productivity gains and ensuring the workforce's stability becomes essential.

To address these challenges, a proactive approach is needed. Governments, organizations, and educational institutions must collaborate to implement reskilling and upskilling programs to equip the workforce with skills relevant to the evolving job landscape. Embracing a human-centric approach to AI can help alleviate the negative impact on workers and promote a more inclusive and sustainable future.

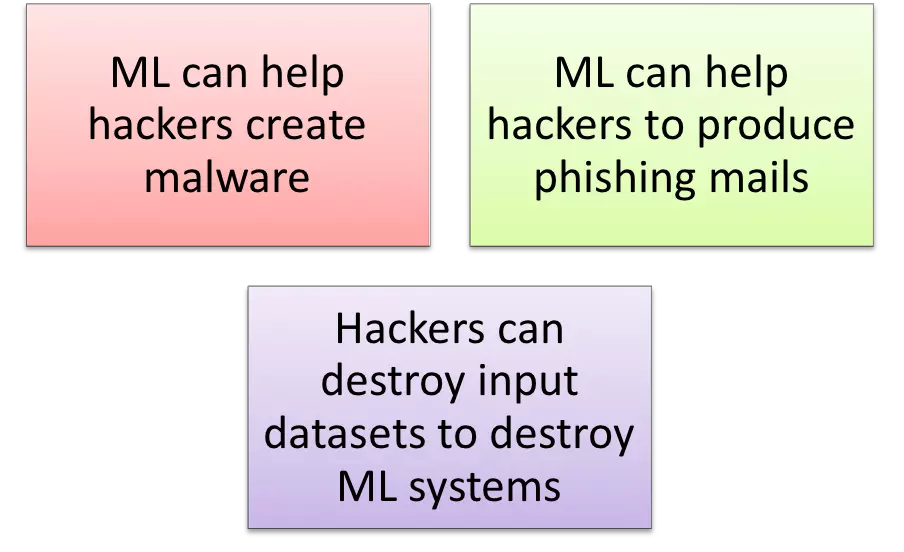

With great power comes great responsibility. The immense potential of machine learning also opens the door to misuse and malicious intent. From deepfake technology used for disinformation campaigns to AI-powered social engineering attacks, there are serious concerns about how AI can be weaponized.

Addressing this ethical dilemma requires a multi-faceted approach. Technological safeguards, such as adversarial testing and verification, can help identify and prevent AI systems from being

Source: Medium

Machine learning is a groundbreaking technology that promises to revolutionize industries and improve our lives significantly. However, to fully harness its potential and mitigate its risks, addressing the ethical dilemmas is imperative. As we forge ahead into an AI-driven future, striking the right balance between technological advancement and ethical responsibility will be essential to ensure that machine learning becomes a force for good, benefiting individuals and society as a whole. Embracing a human-centric approach, prioritizing fairness and transparency, and safeguarding against misuse are key pillars in building a responsible and ethical AI ecosystem. By doing so, we can pave the way for a future where machine learning empowers us while upholding our shared values and principles.

Leave your comments

Post comment as a guest