Comments

- No comments found

In the realm of artificial intelligence (AI) and machine learning, the notion of a "black box" has long been a concern.

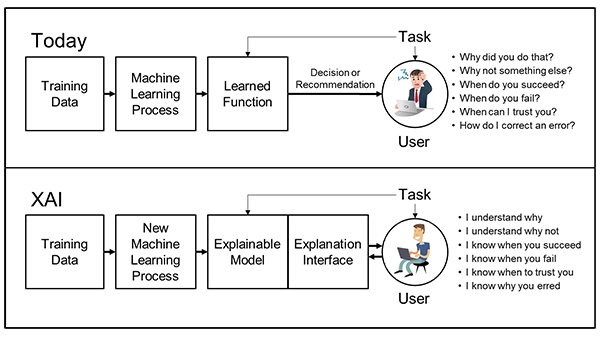

As AI systems become more sophisticated, they often make decisions that are difficult to understand or explain. Enter the concepts of Explainable AI (XAI) and Interpretable Machine Learning, two groundbreaking approaches that aim to shed light on the inner workings of AI models, making their decisions transparent and comprehensible to both experts and non-experts alike.

Traditional AI models, particularly deep neural networks, have been criticized for their opacity. These models can provide accurate predictions, but the underlying logic behind their decisions remains obscure. This lack of transparency poses significant challenges, especially in critical domains where decisions impact human lives, such as healthcare, finance, and law enforcement.

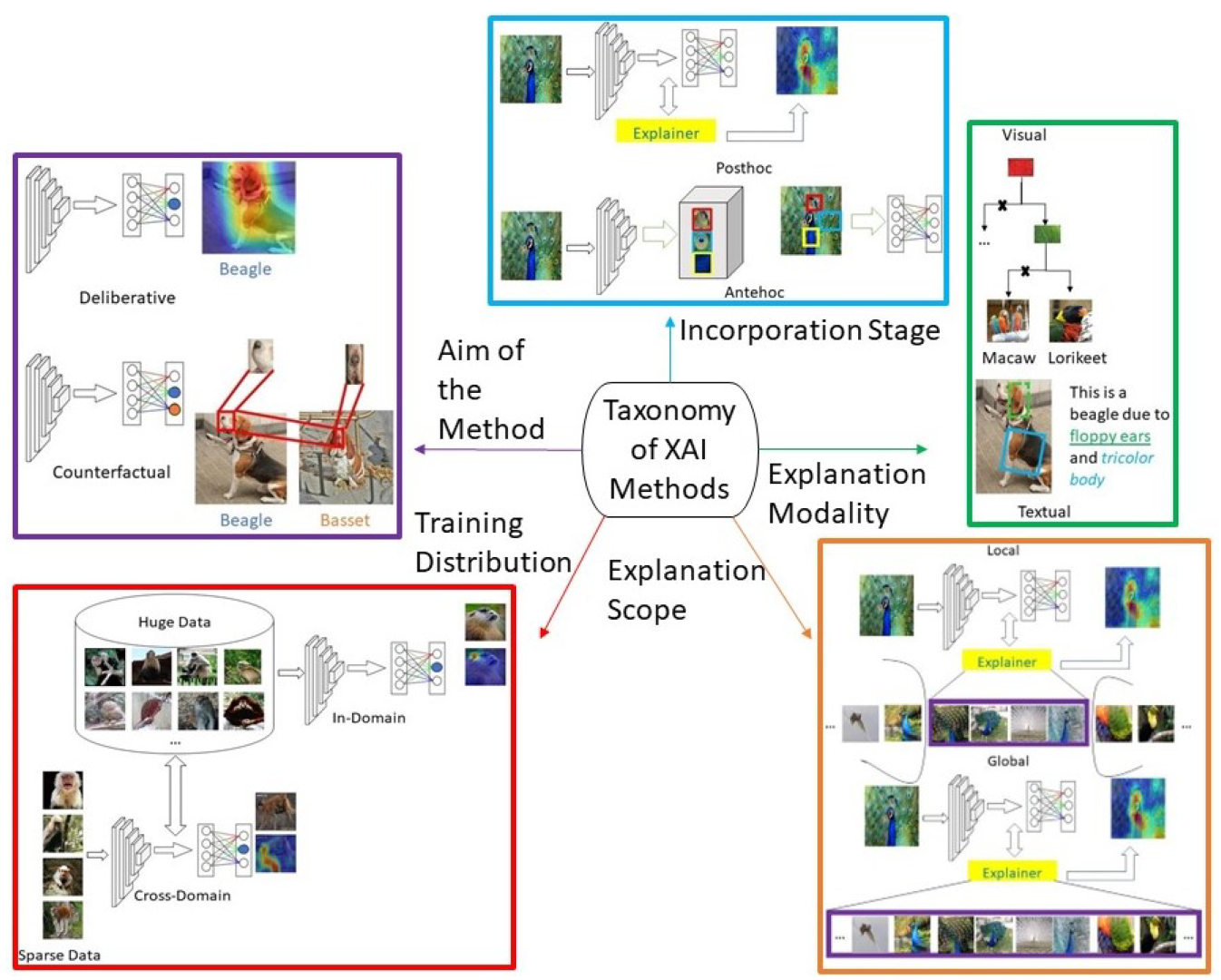

Explainable AI, often referred to as XAI, is a paradigm that prioritizes transparency and interpretability in AI models. The goal is to provide human users with understandable explanations for why an AI system arrived at a particular decision. XAI techniques range from generating textual explanations to highlighting relevant features and data points that influenced a decision.

Interpretable Machine Learning takes a similar approach, focusing on designing models that are inherently understandable. Unlike complex deep learning models, interpretable models are designed to provide clear insights into their decision-making process. This is achieved by using simpler algorithms, transparent features, and intuitive representations of data.

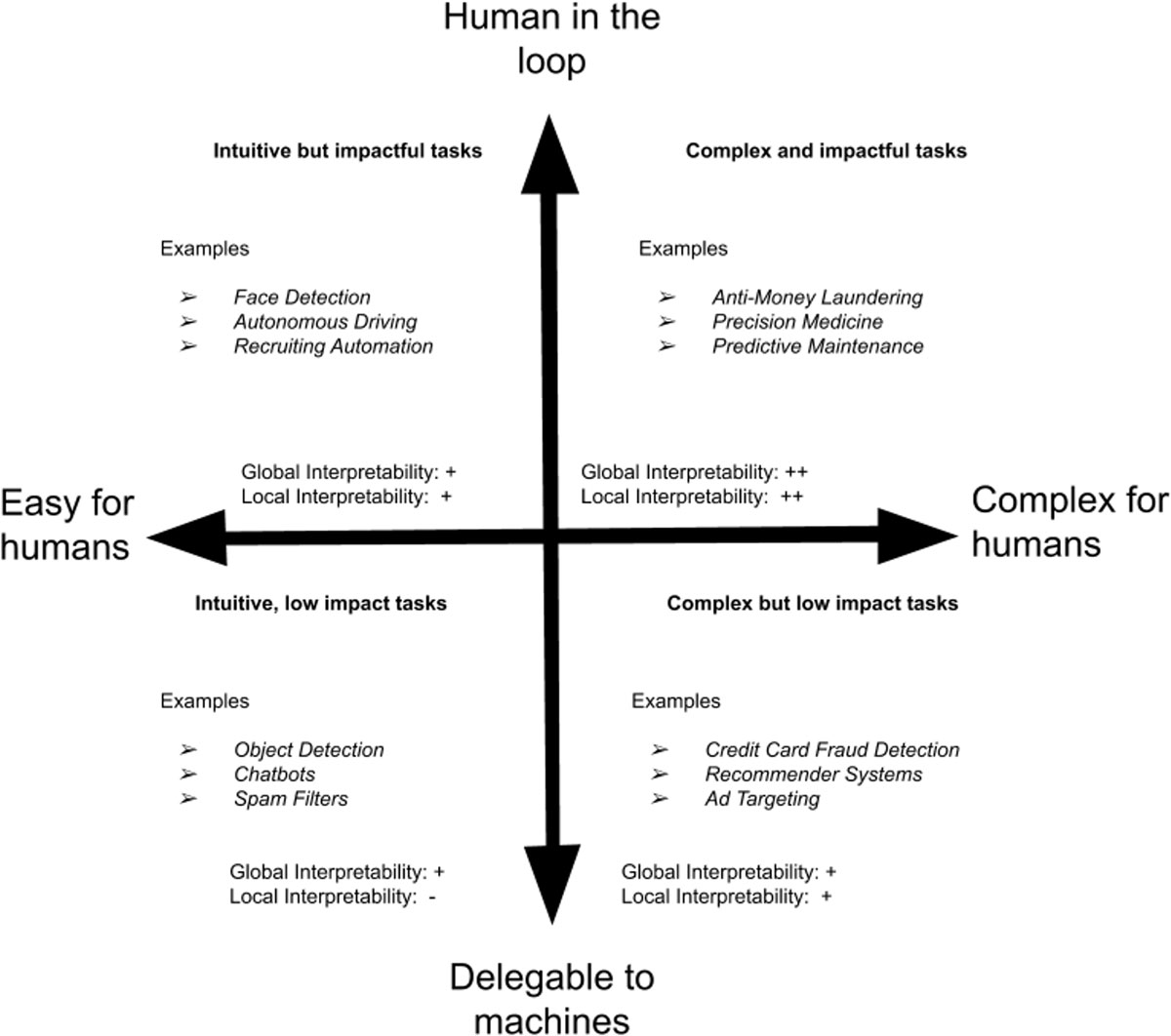

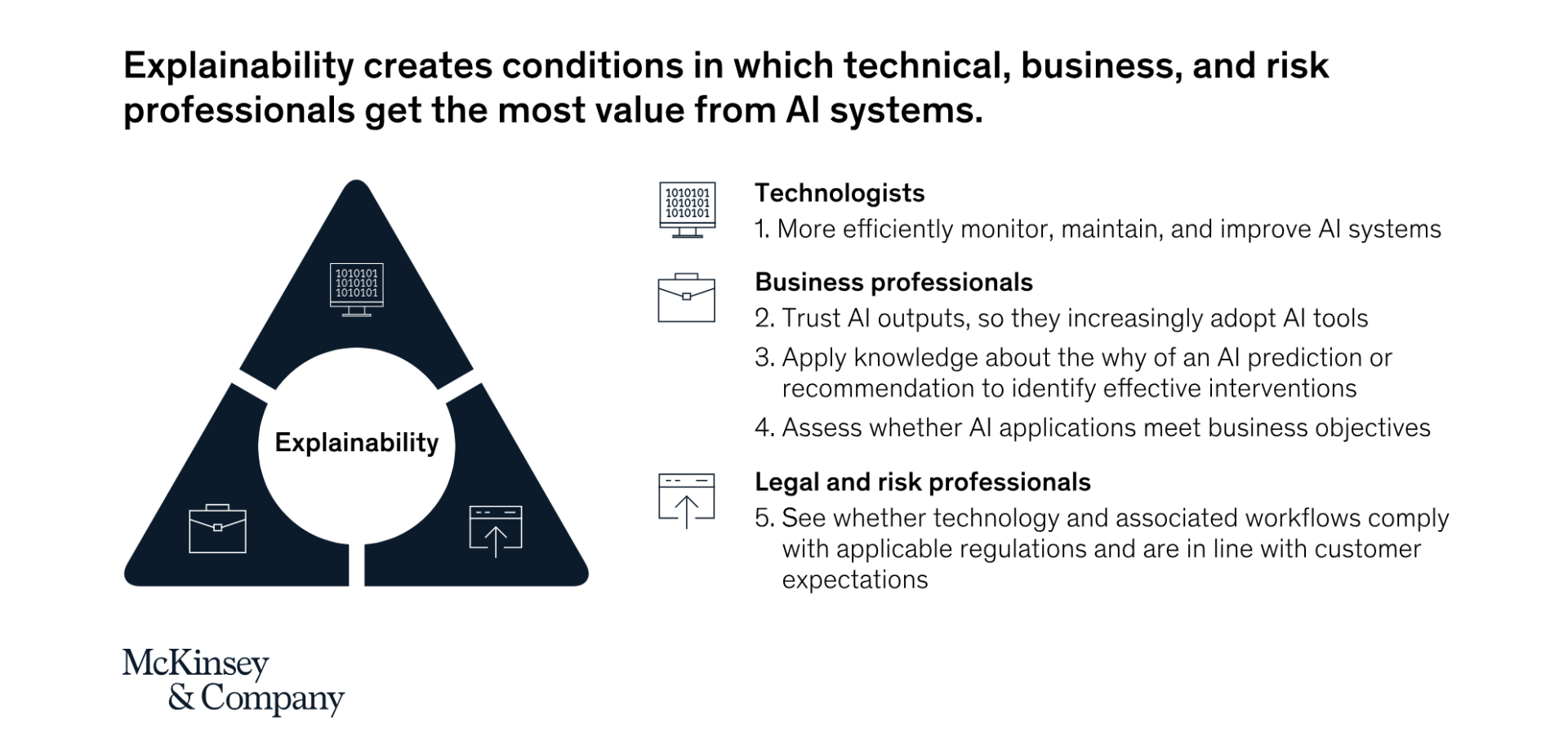

Explainable AI and interpretable machine learning are particularly relevant in domains where decision justifications are crucial. In healthcare, for example, doctors need to understand why an AI system recommended a specific treatment. In finance, analysts need to comprehend the factors driving investment predictions. Moreover, these concepts play a pivotal role in ensuring fairness, accountability, and compliance in AI systems.

While there's a push for transparency, it's important to strike a balance between model complexity and interpretability. Highly interpretable models might sacrifice predictive accuracy, while complex models might provide accurate predictions but lack transparency. Researchers and practitioners are working to find the sweet spot where models are both accurate and explainable.

Explainable AI and interpretable machine learning are dynamic fields, with ongoing research to develop better techniques and tools. Researchers are exploring ways to quantify and measure interpretability, creating standardized methods for evaluating model transparency. Implementation of XAI in real-world applications requires collaboration between domain experts, data scientists, and ethicists.

Explainable AI and Interpretable Machine Learning are catalysts for creating trustworthy and accountable AI systems. As AI becomes integrated into our daily lives, the ability to understand and justify AI decisions is paramount. These approaches offer the promise of illuminating the black box, ensuring that AI's potential is harnessed while maintaining human understanding and control. As researchers continue to push the boundaries of transparency, the future of AI will likely be characterized by models that not only make accurate predictions but also empower users with insights into how those predictions are made.

Leave your comments

Post comment as a guest