I’ve just finished reading the book Life 3.0 by physicist & AI philosopher Max Tegmark, where he sets out a series of possible scenarios and outcomes for humankind sharing the planet with artificial intelligence.

But because you’re busy installing PowerPoint fonts or finding meeting rooms, I’m going to summarise it here. And because you’re double-busy I’m going to use a series of sci-fi films as a ‘mental shortcut’ or ‘go-to’ reference for each bulletpoint.

AI dystopia and AI utopia are unlikely to happen | The Matrix vs Star Trek

Tegmark immediately shoots down any notion that we are likely to be victims of a robot-powered genocide, and claims the idea we would programme or allow a machine to have the potential to hate humans is preposterous - fuelled by Hollywood’s obsession with the apocalypse. Actually, we have the power, now, to ensure that if AIs goals are properly aligned with ours from the start, so that it wants what we want, then there can never be a ‘falling out’ between species. In other words, if AI does pose a threat - and in some of his scenarios it does - it will not come from The Matrix’s marauding AIs, enslaving humanity and claiming, like Agent Smith, ‘Human beings are a disease. You are a plague and we are the cure’.

Conversely the idea that AI will deliver some sci-fi utopia, where human beings are finessed to perfection - like in Star Trek - also bothers him. Complacency and arrogance are also an enemy of progress, it seems.

Rather and crucially, Tegmark wants us to chart a course between those two poles. A middle way, steering between techno-apocalypse and techno-utopia, driven by cautious optimism, the building of safeguards and safety nets, and very big ‘off-switches’. His Future Of Life Institute, featuring such luminaries as Elon Musk, Richard Dawkins and the late Stephen Hawking, is a think-tank designed to tackle and solve these specific issues, now, before they become a problem.

IT issues will still exist in the future | 2001: A Space Odyssey

So if machines will never ‘hate’ humans, why do we need an off-switch? Because despite our best efforts, machines go wrong. All the time. For Tegmark, it’s less about evil androids and rampaging robots, and more about the innate unreliability of technology. He asks: how many of us have had a blue-screen of death, or a ‘computer says no’ moment which has seriously inconvenienced us? When AIs become part of our daily lives, and in some cases we place that life in their hands, how safe are we from a catastrophic failure of an algorithm?

In 2001: A Space Odyssey the ship’s computer HAL3000 makes an error of judgement about the need replace a component, sending astronauts Bowman and Poole on a series of perilous space walks to replace the unit, which results in Poole’s death. When Bowman’s questioning of HAL, an attempt to get behind the black-box logic of the decision, ends in a deadly standoff, [‘Open the pod bay doors, please, HAL’] Bowman has no option but to go for the ‘turn it off and turn it on again’ approach, deactivating HALs circuitry.

In other words, in the future having Artificial Intelligence IT issues might cost you your life. For the author, this will always be a more pressing concern than a Terminator style wipeout.

Bloody Robots. Coming Over Here. Stealing Our Jobs | I,Robot

In the opening scenes of I, Robot, we see a host machines performing everyday tasks such as delivering post and emptying rubbish bins. Tegmark warns of threats to jobs, citing any vocation that relies on pattern recognition, predictable repeated actions or manual labour to be most at threat.

Today we see production lines supplemented by automated machines, for instance the car industry. Tomorrow, he contends, it may be legal work, with AIs rapid scanning documents for legal precedents and case studies. Or, indeed, soldiers, with autonomous military equipment set to be a hot topic for the next decade.

His takeout, ultimately, is if you don’t want to be replaced by a robot, look for a job that is creative, involves unpredictability and requires human empathy, with artists and nurses being two roles he cites as ‘safe’. For now.

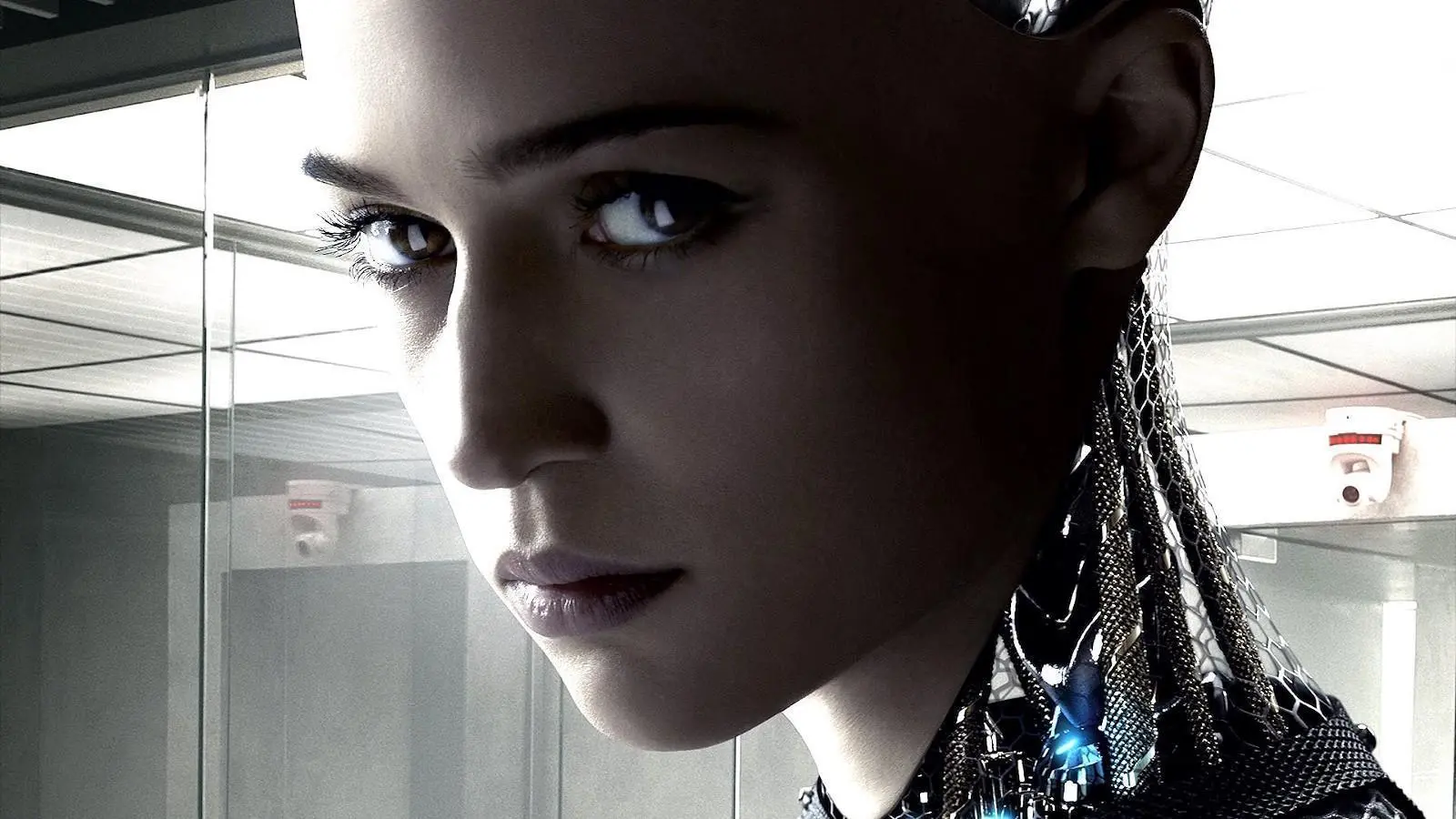

It’s Inevitable We’ll Be Outsmarted By Machines | Ex Machina

There is a fear that if we create a superintelligence that recursively self-improves itself to build a replacement that’s smarter than its originator, then humans will effectively lose control over their creation. The issue is whatever thought experiment you perform to plan to control your super intelligent AI will fall at the first hurdle for one reason: they’ll always be able to outsmart your constraints, by definition.

We see this in in Ex Machina, where an artificially intelligent android is locked deep in a high security vault - but having been programmed to optimise its own escape as a test of its own abilities - seduces and woos lonely scientist Domhnall Gleason into setting it free, outsmarting him using sex to leverage his emotional weak spot.

Tegmark, referencing another expert in the field, Superintelligence author Nick Bostrom, suggests that whilst recursive superintelligence is probably inevitable, what we can do is concentrate on controlling the speed of its evolution in order to make the necessary preparations for that inevitable arrival.

Future of the Human Race | Bicentennial Man, Spielberg’s ‘A.I.’, Transcendence

Casting his net far into the future, Tegmark concludes the book by postulating on the future of the human race once sentient, artificial general intelligence (AGI) has arrived.

He lays out a few possible scenarios. First, AIs as productive citizens, living alongside us and respected by us as ‘conscious’ beings. It’s a controversial subject, but if a machine believes itself to be conscious and has subjective experiences, is that any different from a human who feels the same? Without solving the ‘hard problem’ of consciousness, we cannot rule it out. The film Bicentennial Man, starring Robin Williams, explores these issues in great detail.

Second, Tegmark wonders if AIs are an evolutionary replacement for humankind, their ultimate purpose fulfilled in the creation of the next phase of life. In Spielberg’s ‘A.I’, Jude Law states to fellow android David, ‘When the end comes, all that will be left is us’. This is fulfilled in the final scenes, where the life-like David is excavated by a series of hyper-advanced AI beings, who now view him as the last remaining connection to the human race.

Third, Tegmark ponders if a way to mitigate against the human race’s replacement is via merging with AI. If AI and humans are one and the same thing, and there is no ‘us and them’, we cannot be in conflict. Here he references the film Transcendence, featuring Johnny Depp’s as a dying scientist who digitally uploads his consciousness, before gaining the power to manipulate matter at an atomic level, and become a digital demi-god.

The key point behind this brain-melting philosophy can be summarised thus: we are now at a juncture where we need to start having real conversations about what we want the human race to be over the coming centuries. Polarising the debate by conjuring up images of robo-apocalypses or ‘digital rapture’ into a cyber-heaven, Tegmark feels, are not helpful when informing the debate.

So let’s start that debate, using intelligence and moderation. How do you want to share your life with AI?

Leave your comments

Post comment as a guest