Comments

- No comments found

The emergence of artificial intelligence (AI) has revolutionized various fields, ranging from manufacturing to healthcare and national security.

As the use and proliferation of AI continue to expand in societies worldwide, new challenges arise that require effective and responsible regulation of this powerful technology. The recent G7 Summit is a prime example of how advanced economies are worried about the fast pace of this technology, and the proposed Global Partnership of AI including Hiroshima Process of AI was a confirmation of this concern.

Countries are grappling with the need to strike a delicate balance: promoting innovation and reaping the benefits of AI while ensuring that its deployment remains ethical, transparent, and aligned with societal values. The regulation of AI encompasses a broad spectrum of considerations, including data privacy, algorithmic fairness, accountability, and the impact of AI on employment and social dynamics.

This article delves into the crucial question of how countries can effectively regulate AI to harness its potential while mitigating risks and safeguarding public interest. By exploring key principles, regulatory approaches, and international collaborations, we aim to shed light on the path toward responsible and impactful AI regulation.

By fostering a comprehensive and forward-thinking regulatory environment, countries can not only address the challenges posed by AI but also foster trust, innovation, and sustainable development. Through proactive and inclusive regulation, countries can harness the potential of AI to drive economic growth, enhance public services, and ultimately create a future that benefits all of humanity.

Countries can take several actions to regulate AI effectively. Here are some key actions:

National AI strategy: Develop a comprehensive national AI strategy that outlines the country's vision, objectives, and priorities regarding AI development, deployment, and regulation. This strategy should address both the opportunities and challenges associated with AI, including national security considerations.

Robust cybersecurity measures: Strengthen cybersecurity capabilities to protect critical infrastructure, data, and AI systems from cyber threats. Enhance monitoring, detection, and response mechanisms to promptly identify and mitigate potential attacks or breaches.

Regulations and standards: Establish clear legal frameworks, regulations, and standards that govern the development, deployment, and use of AI technologies. These regulations should address issues such as data privacy, bias mitigation, transparency, and accountability.

Risk assessment and impact analysis: Conduct comprehensive risk assessments and impact analyses of AI technologies to identify potential vulnerabilities, risks, and unintended consequences. Regularly evaluate the national security implications of AI systems and take appropriate measures to mitigate risks.

International cooperation and partnerships: Foster international cooperation and partnerships to address shared challenges and ensure harmonization of AI regulations and standards. Collaborate with other countries, international organizations, and industry stakeholders to exchange best practices, information, and intelligence.

Talent development and education: Invest in AI talent development and education to build a skilled workforce capable of understanding, developing, and effectively managing AI technologies. This includes training professionals in AI ethics, security, and governance.

Ethical guidelines and principles: Establish clear ethical guidelines and principles for the development and use of AI in national security contexts. Ensure adherence to ethical principles, such as transparency, fairness, human control, and respect for human rights, throughout AI deployments.

Red teaming and testing: Conduct rigorous testing, validation, and red teaming exercises to identify potential vulnerabilities and weaknesses in AI systems. Assess AI systems' robustness, resilience, and potential for adversarial attacks.

Technology assessment and foresight: Establish mechanisms for continuous technology assessment and foresight to monitor AI advancements, emerging risks, and potential national security implications. Stay updated on AI-related research, trends, and developments to anticipate and respond effectively to evolving threats.

Public-private collaboration: Encourage collaboration between governments, academia, industry, and civil society to address AI-related national security challenges collectively. Foster public-private partnerships to leverage expertise, resources, and knowledge for developing effective AI governance frameworks.

Independent oversight and accountability: Establish independent oversight bodies or mechanisms to ensure accountability and transparency in AI deployments for national security purposes. These bodies can provide checks and balances, conduct audits, and address any concerns related to AI systems' compliance with regulations and ethical guidelines.

Foster industry standards and best practices: Governments can collaborate with industry stakeholders to establish standards and best practices for the development and use of AI. These standards can promote fairness, transparency, and accountability in AI systems and encourage responsible behavior among AI developers and users.

Encourage public-private partnerships: Governments can foster collaborations between the public and private sectors to develop AI regulations and guidelines. This partnership can leverage the expertise of both sectors to create effective and balanced regulatory frameworks that address the needs of various stakeholders.

Invest in research and development: Governments can allocate resources to support research and development efforts in AI regulation. This investment can enable the development of advanced tools, methodologies, and technologies that assist in monitoring, assessing, and regulating AI systems.

Enhance public awareness and education: Governments can launch awareness campaigns and educational initiatives to inform the public about AI and its implications. This can help citizens understand the benefits, risks, and ethical considerations associated with AI and make informed decisions regarding its use.

Continuously update regulations: Given the rapid evolution of AI, countries should regularly review and update their regulations to keep pace with technological advancements. This flexibility ensures that regulations remain relevant, adaptive, and effective in addressing emerging challenges.

By implementing these measures, countries can better protect themselves against potential risks and challenges associated with AI technologies, ensuring that national security interests are safeguarded while maximizing the benefits AI can offer.

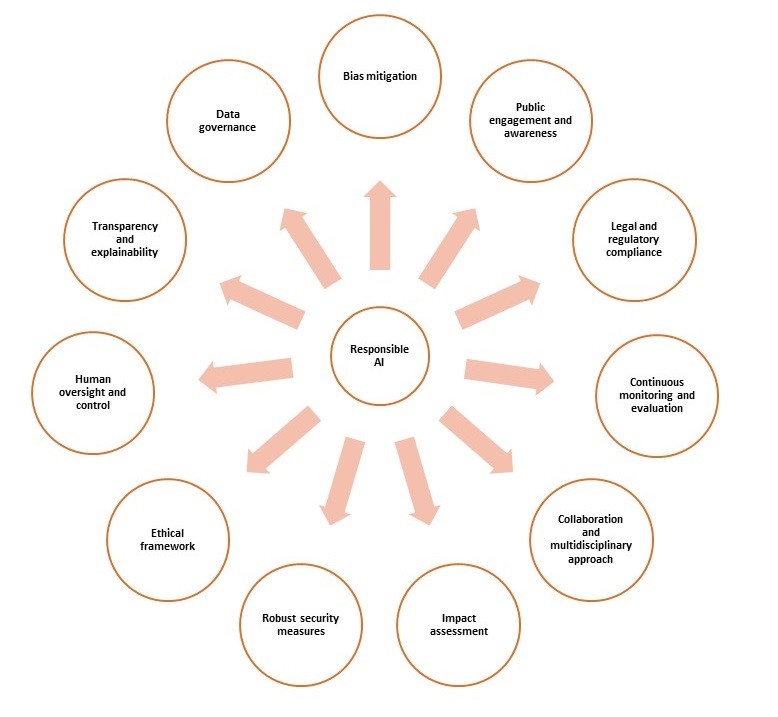

Developing responsible AI involves implementing ethical considerations and safeguards throughout the entire lifecycle of AI systems. Here are some key principles and practices for creating responsible AI:

Ethical framework: Establish an ethical framework that guides the development and deployment of AI systems. This framework should prioritize fairness, transparency, accountability, and respect for human rights.

Data governance: Ensure the responsible collection, storage, and usage of data. Promote data privacy and protection measures, including obtaining informed consent and anonymizing or de-identifying sensitive data.

Bias mitigation: Mitigate biases in AI systems by carefully curating and diversifying training datasets. Regularly evaluate and audit AI models for potential biases, and take corrective actions to address any identified biases.

Transparency and explainability: Strive for transparency in AI algorithms and decision-making processes. Make efforts to explain how AI systems arrive at their conclusions or recommendations, allowing for human comprehension and oversight.

Human oversight and control: Maintain human involvement and oversight in critical decision-making processes. Ensure that humans can intervene and override AI systems when necessary to prevent harmful outcomes or to correct errors.

Robust security measures: Implement strong cybersecurity measures to protect AI systems from unauthorized access, manipulation, or exploitation. Regularly update and patch AI systems to address security vulnerabilities.

Impact assessment: Conduct thorough impact assessments to evaluate potential risks and consequences of AI deployment. Assess the societal, economic, and environmental impacts of AI systems before deployment, and mitigate any identified risks.

Collaboration and multidisciplinary approach: Foster collaboration among AI developers, policymakers, ethicists, social scientists, and other stakeholders. Encourage interdisciplinary discussions and involve diverse perspectives to address the societal implications of AI.

Continuous monitoring and evaluation: Regularly monitor and evaluate AI systems for ethical implications, performance, and unintended consequences. Implement mechanisms for ongoing feedback, assessment, and improvement of AI models and algorithms.

Legal and regulatory compliance: Adhere to relevant laws, regulations, and international norms related to AI development and deployment. Engage in policy discussions and contribute to the establishment of legal frameworks that govern the responsible use of AI.

Public engagement and awareness: Promote public awareness and understanding of AI technologies and their implications. Encourage public dialogue, transparency, and engagement in shaping AI policies and practices.

By following these principles and practices, developers and stakeholders can work towards creating responsible AI systems that prioritize human well-being, fairness, and societal benefits while minimizing risks and unintended consequences.

Regulating AI requires a comprehensive and collaborative approach that balances innovation with ethical considerations. By establishing clear legal frameworks, fostering responsible AI practices, promoting education and awareness, and encouraging international cooperation, countries can shape the future of AI in a manner that benefits society while safeguarding human values and interests.

Ahmed Banafa is an expert in new tech with appearances on ABC, NBC , CBS, FOX TV and radio stations. He served as a professor, academic advisor and coordinator at well-known American universities and colleges. His researches are featured on Forbes, MIT Technology Review, ComputerWorld and Techonomy. He published over 100 articles about the internet of things, blockchain, artificial intelligence, cloud computing and big data. His research papers are used in many patents, numerous thesis and conferences. He is also a guest speaker at international technology conferences. He is the recipient of several awards, including Distinguished Tenured Staff Award, Instructor of the year and Certificate of Honor from the City and County of San Francisco. Ahmed studied cyber security at Harvard University. He is the author of the book: Secure and Smart Internet of Things Using Blockchain and AI.

Leave your comments

Post comment as a guest