Comments

- No comments found

While AI-powered devices and technologies have become essential parts of our lives, machine intelligence may still contain areas wherein drastic improvements could be made.

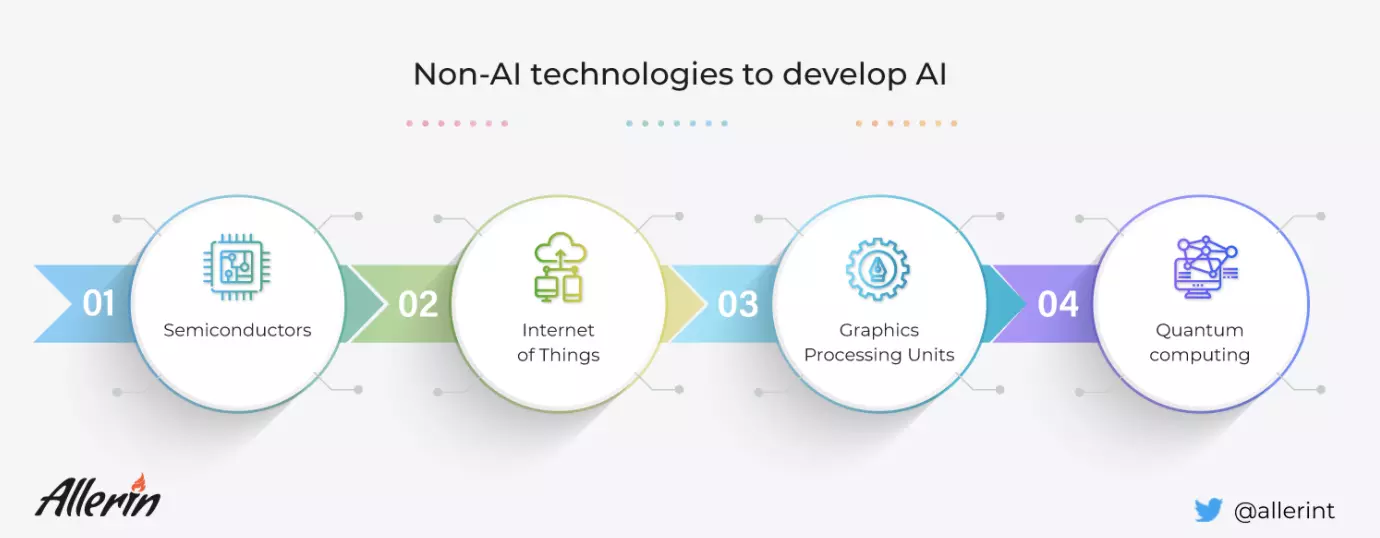

To fill these metaphorical gaps, non-AI technologies can come in handy.

Artificial intelligence (AI) is an ‘emerging computer technology with synthetic intelligence.’ It is widely accepted that the applications of AI we see in our daily lives are just the tip of the iceberg with regards to its powers and abilities. The field of artificial intelligence needs to constantly evolve and keep developing to eliminate the common AI limitations. Usually, AI consists of the following subfields (others, like cognitive computing, are also commonly included, but the ones below are nearly omnipresent across all AI systems):

Non-AI technologies that make AI more advanced (or, at the very least, reduce AI limitations) generally enhance one of these components or positively influence its input, processing, or output capacity.

The co-existence of semiconductors and AI systems in the same space is fairly common. Several companies manufacture semiconductors for utility in AI-based applications. Specialized programs are implemented in established semiconductor companies to create AI chips or embed AI technology in their product lines. One of the prominent examples of such organizations’ involvement in the AI field is NVIDIA, whose Graphics Processing Units (GPU) containing semiconductor chips are heavily used in data servers to carry out AI training.

Structural modifications in semiconductors can improve the data usage efficiency in AI-powered circuits. Changes in semiconductor design can increase data movement speed in and out of AI’s memory storage systems. Apart from the increased power, memory systems can be made more efficient too. With semiconductor chips' involvement, there are several ideas to improve the various data usage aspects of AI-powered systems. One such idea involves sending data to and from neural networks only when needed (instead of constantly sending signals across a network). Another progressive concept is the usage of non-volatile memory in AI-related semiconductor designs. As we know, non-volatile memory chips continue to hold saved data even without power. Merging non-volatile memory with processing logic chips can create specialized processors which meet the increasing demands of newer AI algorithms.

Although AI application demands can be met by making design improvements in semiconductors, there are certain production issues that can be caused by them too. AI chips are generally bigger than the standard ones due to their massive memory requirements. As a result, semiconductor companies will need to spend more to manufacture them. So, creating AI chips does not make a lot of economic sense for them. To resolve this issue, a general-purpose AI platform can be used. Chip vendors can enhance these types of AI platforms with input/output sensors and accelerators. Using these resources, manufacturers can mold the platforms depending on the changing application requirements. The flexible nature of general-purpose AI systems can be cost-efficient for semiconductor companies and greatly reduce AI limitations. General-purpose platforms are the future of the nexus between AI-based applications and improved semiconductors.

The introduction of AI in IoT improves both their functionalities and resolves their respective shortcomings seamlessly. As we know, IoT encompasses several sensors, software and connectivity technologies to enable multiple devices to communicate and exchange data with each other and other digital entities over the internet. Such devices can range from everyday household objects to complex organizational machines. Basically, IoT reduces the human element from several interconnected devices that observe, ascertain and understand a situation or their surroundings. Devices such as cameras, sensors and sound detectors can record data on their own. This is where AI comes in. Machine learning has always required its input dataset sources to be as broad as possible. IoT, with its host of connected devices, provides wider datasets for AI to study.

To extract the best out of IoT's vast reserves of data for AI-powered systems, organizations can build custom machine, learning models. Using IoT's abilities to gather data from several devices and presenting it in an organized format on sleek user interfaces, data experts can efficiently integrate it with the machine learning component of an AI system. The combination of AI and IoT works well for both systems, as an AI attains large amounts of raw data for processing from its IoT counterpart. In return, AI quickly finds patterns of information to collate and present valuable insights from the unclassified masses of data. AI's ability to intuitively detect patterns and anomalies from a set of scattered information is supplemented by IoT's sensors and devices. With IoT to generate and streamline information, AI can process a host of details linked to varied concepts such as temperature, pressure, humidity, and air quality.

Several mega-corporations in recent years have successfully deployed their own respective interpretations of the AI and IoT combination to gain a competitive edge in their sector and resolve AI’s limitations. Google Cloud IoT, Azure IoT and AWS IoT are some of the renowned examples of this trend.

With AI’s growing ubiquity, GPUs have transformed from mere graphics-related system components to an integral part of the deep learning and computer vision processes. In fact, it is widely accepted that GPUs are the AI equivalent of CPUs found in regular computers. First and foremost, systems require processor cores for their computational operations. GPUs generally contain a larger number of cores compared to standard CPUs. This allows these systems to provide better computational power and speeds for multiple users across several parallel processes. Moreover, deep learning operations handle massive data amounts. A GPU's processing power and high bandwidth can accommodate these requirements without breaking into a sweat.

GPUs can be configured to train AI and deep learning models (often simultaneously) due to their powerful computational abilities. As specified earlier, greater bandwidth gives GPUs the requisite computing edge over regular CPUs. As a result, AI systems can allow the input of large datasets, which can overwhelm standard CPUs and other processors, to provide greater output. On top of this, GPU usage does not utilize a large chunk of memory in AI-powered systems. Usually, computing big, diverse jobs involves several clock cycles in standard CPUs as its processors complete jobs sequentially and possess a limited number of cores. On the other hand, even the most basic GPU comes with its own dedicated VRAM (Video Random Access Memory). As a result, the primary processor's memory will not be weighed down by small and medium-weight processes. Deep learning necessitates the need for large datasets. While technologies such as IoT can provide a wider spectrum of information and semiconductor chips can regulate data usage across AI systems, GPU provides the fuel in terms of computational power and larger reserves of memory. As a result, the use of GPUs limit AI’s limitations regarding processing speeds.

On the surface, quantum computing resembles traditional computing systems. The main difference is the usage of a unique quantum bit (also known as a qubit) which allows information within a quantum computing processor to exist in multiple formats at the same time. Quantum computing circuits execute tasks similar to regular logical circuits with the addition of quantum phenomena such as entanglement and interference to boost their calculation and processing to supercomputer levels.

Quantum computing allows AI systems to attain information from specialized quantum datasets. To achieve this, quantum computing systems use a multidimensional array of numbers called quantum tensors. These tensors are then used to create massive datasets for the AI to process. To find patterns and anomalies within these datasets, quantum neural network models are deployed. Most importantly, quantum computing enhances the quality and precision of AI’s algorithms. Quantum computing eliminates the common AI limitations in the following ways:

As we can clearly see, the development of AI can be brought about by either increasing the volume of its input information (through IoT), improving its data usage (through semiconductors), increasing its computing power (through GPUs) or simply improving every aspect of its operations (through quantum computing). Apart from these, there may be several other technologies and concepts that could become a part of AI’s evolution in the future. More than six decades after its conception and birth, AI is more relevant than ever in nearly every field today. Wherever it goes from here, AI’s next evolutionary phase promises to be intriguing.

Naveen is the Founder and CEO of Allerin, a software solutions provider that delivers innovative and agile solutions that enable to automate, inspire and impress. He is a seasoned professional with more than 20 years of experience, with extensive experience in customizing open source products for cost optimizations of large scale IT deployment. He is currently working on Internet of Things solutions with Big Data Analytics. Naveen completed his programming qualifications in various Indian institutes.

Leave your comments

Post comment as a guest