Comments

- No comments found

Generative AI is not politically neutral.

The popularity of generative AI has grown substantially in 2023.

Recent research suggests that the data and training used to develop AI models can be skewed towards liberal or left-leaning perspectives, due to the prevalence of such perspectives in the tech industry and in the sources of data that are commonly used to train AI models. This can result in AI models that reflect these biases and generate outputs that are skewed in a similar direction.

This has serious implications for the ethical use of these models and for the creation of fair and inclusive AI systems.

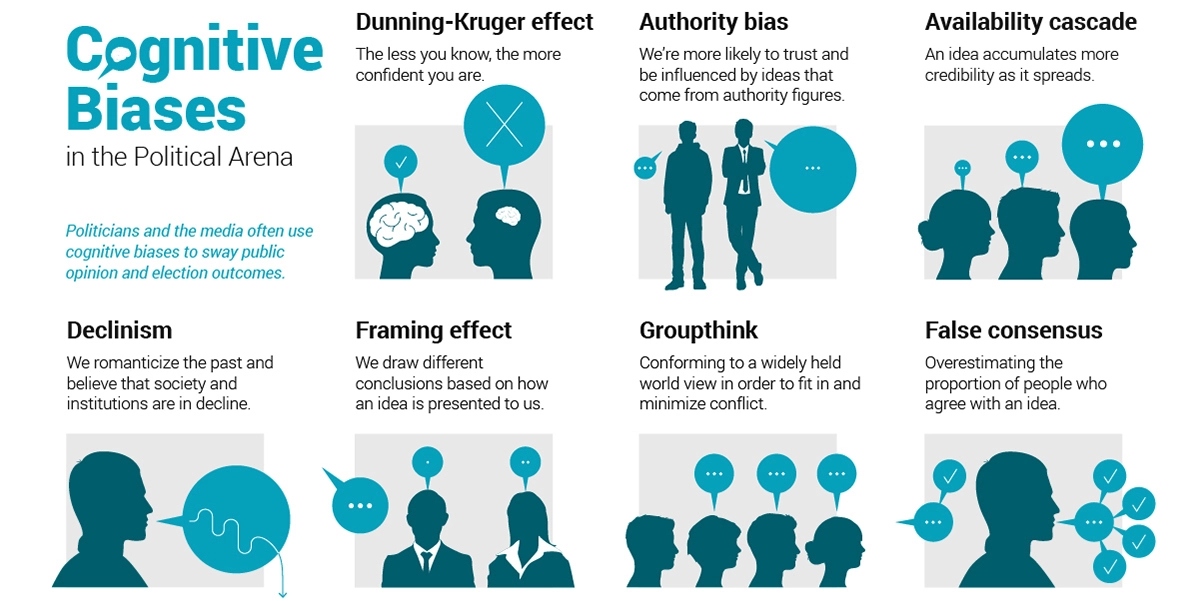

Political bias refers to a systematic inclination or prejudice in favor of or against a particular political perspective, ideology, party, group, or individual. It can manifest in various forms, such as media bias, censorship, and discrimination, and can impact public opinion and decision-making processes. Political bias can also be found in algorithms and AI systems, if they are trained on biased data or if they reflect the personal or societal biases of their creators. The impact of political bias in AI can be far-reaching, as these systems can be used to influence public opinion and make important decisions, and it is crucial to address and prevent political bias in AI to ensure fairness and impartiality.

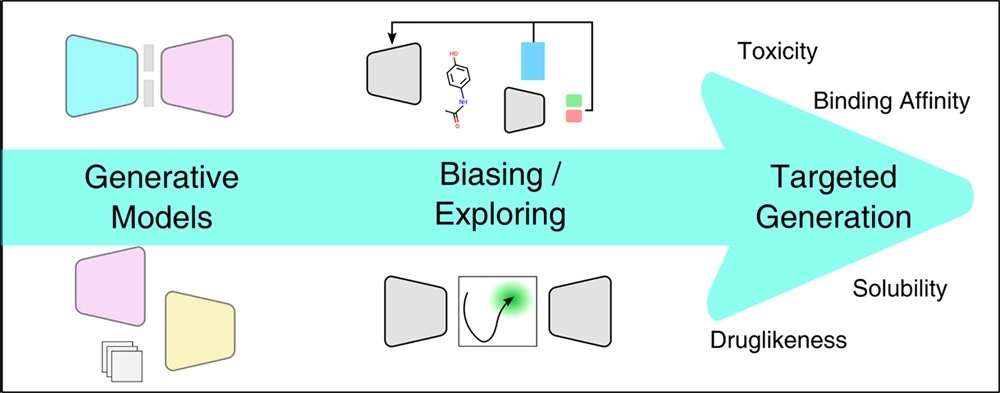

Generative AI models can be biased because they learn from the data and training they receive, and if the data or training is biased, the model can reflect that bias in its outputs. Additionally, even if the training data is unbiased, the design and development of the model itself can reflect the biases and personal perspectives of the creators. For example, the choice of what types of outputs to generate, what parameters to optimize, or what ethical considerations to take into account can all introduce bias into the model.

Moreover, since these models often generate outputs based on patterns in the training data, they can perpetuate existing biases and stereotypes, even if those biases were not explicitly included in the training data. For instance, if a generative AI model is trained on data that has a disproportionate representation of certain demographics or perspectives, it may generate outputs that are biased towards those groups and ignore the experiences and perspectives of others.

Therefore, it is important to address and prevent political bias in generative AI to ensure that these systems are fair and impartial, and to promote the responsible use of AI in society.

Preventing political bias in generative AI is crucial to ensuring that these systems are used ethically and to promote fairness and inclusivity in AI.

There are several steps that can be taken to mitigate the risk of bias:

The data used to train these systems should be diverse and reflective of a wide range of perspectives and experiences. This can help ensure that the AI model does not perpetuate existing biases and stereotypes.

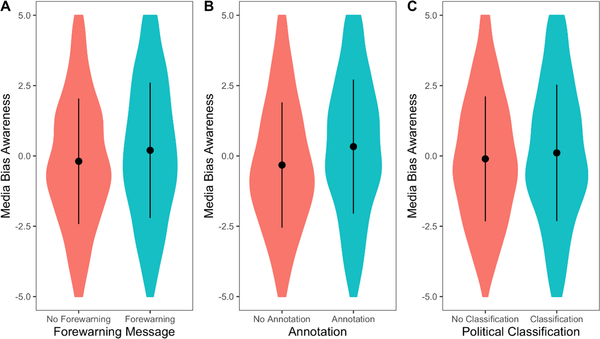

It is important to regularly audit the outputs generated by these models to ensure that they do not perpetuate harmful biases and stereotypes. This can involve conducting tests on the outputs and checking them against a set of ethical and fairness standards.

AI researchers have developed various techniques for mitigating bias in machine learning systems, such as adversarial training, counterfactual data augmentation, and fair representation learning. These techniques can be applied to generative AI models to reduce the risk of political bias in the outputs.

AI researchers should engage with experts from various fields, including ethics, politics, sociology, and diversity, to ensure that the models are developed with a broader perspective and with a focus on fairness and inclusivity.

The development and use of generative AI models should be guided by an ethical and accountability framework that outlines the responsibilities of the developers and users, and that establishes standards for the development and deployment of these systems.

The responsible development and use of generative AI requires collaboration between AI researchers, policymakers, and society as a whole. By taking these steps to prevent political bias in generative AI, we can ensure that these systems are used ethically and to promote fairness and inclusivity in AI.

Preventing political bias in generative AI is crucial to ensuring that these systems are used ethically and to promote fairness and inclusivity in AI. While it is a challenging problem, there are steps that can be taken to mitigate the risk of bias, such as diversifying the training data, regularly auditing the outputs, and implementing bias mitigation techniques. The responsible development and use of generative AI requires collaboration between AI researchers, policymakers, and society as a whole.

Leave your comments

Post comment as a guest