Comments

- No comments found

Artificial Intelligence (AI) has emerged as a transformative force.

It's impacting various aspects of our lives, from healthcare and transportation to finance and entertainment.

While AI brings immense potential for innovation and progress, it also raises critical ethical, legal, and societal concerns. As AI technologies continue to advance, it becomes increasingly imperative to establish regulatory frameworks to govern their development, deployment, and use.

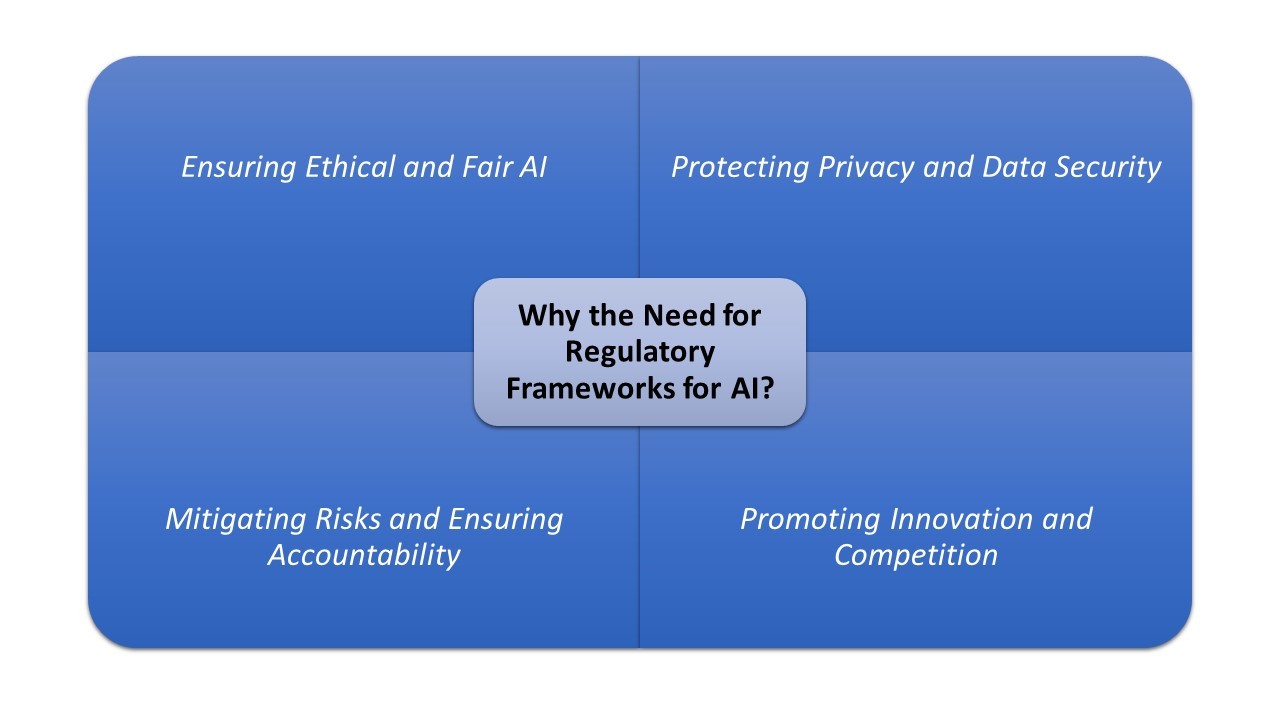

Ensuring Ethical and Fair AI

One of the primary reasons for establishing regulatory frameworks for AI is to ensure that AI systems are developed and used ethically and fairly. AI algorithms can inadvertently perpetuate biases, discriminate against certain groups, and lead to unfair treatment. Without proper regulations, there is a risk of AI exacerbating existing societal inequalities. Regulatory frameworks can set guidelines for developers and users to ensure that AI systems adhere to ethical principles, respect human rights, and promote fairness and justice.

Protecting Privacy and Data Security

AI systems often rely on vast amounts of data to function effectively. This data can include personal information, which, if mishandled, can result in privacy breaches and data security concerns. Regulatory frameworks can establish strict standards for data protection and privacy in AI applications, ensuring that individuals' rights are safeguarded. Compliance with these rules can help build trust between AI developers and the public, fostering greater acceptance and adoption of AI technologies.

Mitigating Risks and Ensuring Accountability

AI can introduce various risks, including safety concerns in autonomous systems, job displacement, and potential misuse in harmful applications. Regulatory frameworks can require AI developers to implement safety measures, conduct risk assessments, and adhere to transparency and accountability principles. These rules can help minimize the negative impacts of AI and hold responsible parties accountable for any harm caused by AI systems.

Promoting Innovation and Competition

While regulations are essential for AI, they must strike a balance that encourages innovation and competition. Overly restrictive rules can stifle technological advancements, preventing the development of beneficial AI applications. Therefore, regulatory frameworks should be designed to foster a thriving AI ecosystem that promotes innovation while addressing potential risks and ethical concerns.

To establish effective regulatory frameworks for AI, certain key rules must be in place. These rules should address various aspects of AI development, deployment, and use:

Transparency and Explainability: AI systems should be transparent, and their decision-making processes should be explainable to users. This rule ensures that individuals can understand how AI algorithms make decisions and hold developers accountable for any biases or errors.

Bias Mitigation: Regulations should require AI developers to address and mitigate biases in their algorithms, especially those that could result in discrimination against protected groups. Bias assessment and auditing processes should be part of AI development.

Data Privacy and Security: Stringent rules should govern the collection, storage, and use of data in AI systems to protect individuals' privacy and data security. Compliance with data protection regulations, such as GDPR, should be mandatory.

Ethical Guidelines: Regulatory frameworks should establish ethical guidelines for AI development and use, emphasizing principles like fairness, non-discrimination, and respect for human rights. Violations of these guidelines should result in penalties.

Accountability and Liability: Rules should clarify the responsibilities of AI developers, users, and other stakeholders in case of AI-related harm or errors. Establishing liability mechanisms is essential to ensure accountability.

Safety Standards: For AI systems with physical components or those involved in critical functions (e.g., autonomous vehicles and medical devices), safety standards and certifications should be mandated to prevent accidents and ensure public safety.

Certification and Compliance: AI products and services should undergo certification processes to verify their compliance with regulatory rules. Non-compliant AI systems should not be allowed in the market.

User Consent: Users should have the right to provide informed consent before interacting with AI systems, especially those that collect personal data or make significant decisions affecting individuals' lives.

International Collaboration: AI regulations should encourage international collaboration and alignment to ensure consistent global standards, as AI knows no geographical boundaries.

While regulatory frameworks are designed to benefit society as a whole, certain entities are more likely to be adversely affected by stringent AI regulations. These entities include:

AI Developers and Companies: AI developers and companies may face increased compliance costs and administrative burdens when adhering to strict regulatory requirements. This could slow down the development and deployment of AI technologies and hinder their competitiveness in the global market.

Startups and Small Businesses: Smaller AI startups and businesses may struggle to navigate complex regulatory frameworks, diverting resources from innovation and growth. Regulatory compliance can be especially challenging for companies with limited financial and human resources.

Research and Development: Stringent regulations may limit the scope and pace of AI research and development efforts. Researchers may encounter additional hurdles when conducting experiments or collecting data for AI projects.

Innovation: Excessive regulations could stifle innovation by discouraging experimentation and risk-taking in AI development. Companies may become overly cautious, leading to missed opportunities for breakthroughs in AI technology.

Access to AI Technologies: If regulations drive up the cost of AI development, there is a risk that access to AI technologies could be restricted, particularly for smaller organizations and underprivileged communities. This could exacerbate existing digital divides.

International Competitiveness: Overly burdensome regulations can put AI companies in a disadvantageous position compared to those in regions with more permissive rules. This could lead to a shift in AI leadership from one region to another.

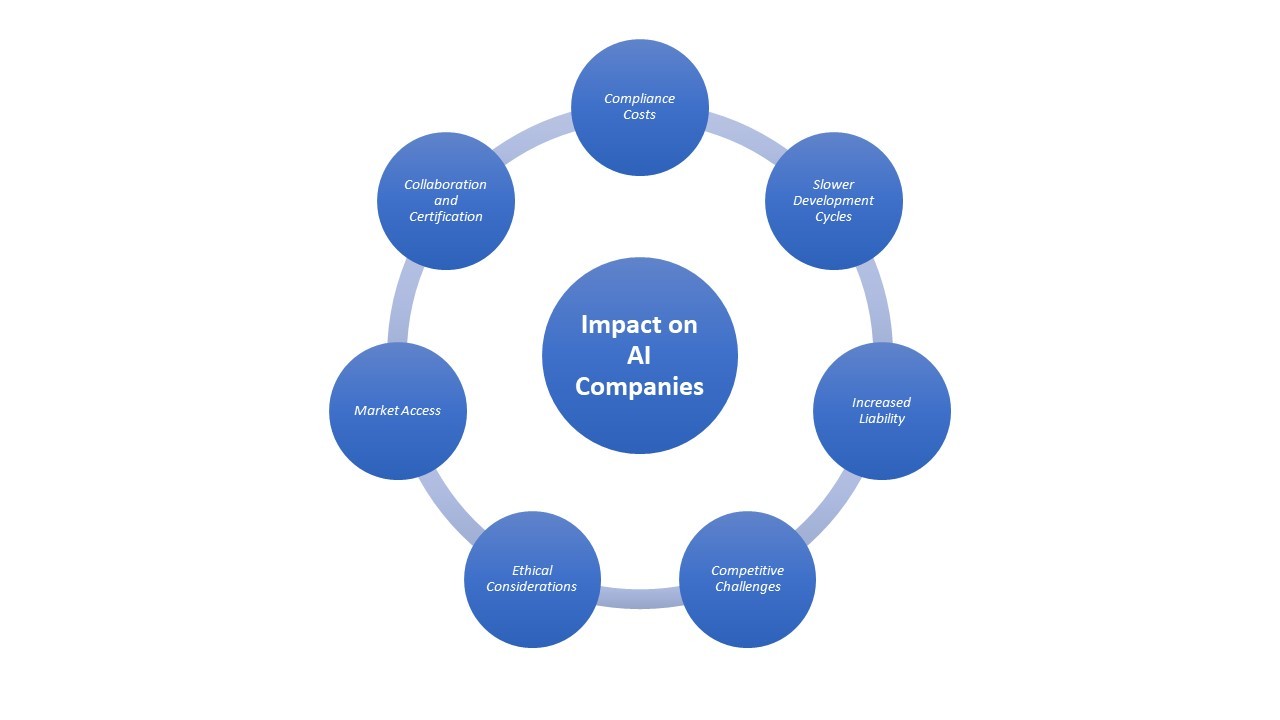

The impact of regulatory frameworks on AI companies can vary depending on the specific rules and their enforcement. Here are some potential effects:

Compliance Costs: AI companies will need to allocate resources to ensure compliance with regulatory requirements. This includes hiring legal experts, data privacy specialists, and compliance officers. These costs can be substantial, especially for smaller companies.

Slower Development Cycles: Rigorous regulations may slow down the development and deployment of AI products and services. Companies may need to conduct extensive testing and auditing to meet regulatory standards, potentially delaying time-to-market.

Increased Liability: Regulatory frameworks may hold AI companies liable for any harm caused by their products or services. This could lead to legal challenges and financial consequences for non-compliance.

Competitive Challenges: Companies operating in regions with more lenient regulations may gain a competitive advantage by releasing AI products faster and at lower costs. This could lead to market imbalances and shifts in AI leadership.

Ethical Considerations: Regulations that emphasize ethical principles may require AI companies to invest in ethical AI research and development, ensuring that their products align with societal values. While this is a positive development, it may also increase costs and development timelines.

Market Access: Some AI companies may find it challenging to access international markets due to differing regulatory standards. They may need to adapt their products to comply with multiple sets of rules, which can be resource-intensive.

Collaboration and Certification: AI companies will need to collaborate with regulatory bodies and undergo certification processes to demonstrate compliance. This can be time-consuming and may require additional investments in infrastructure and documentation.

The establishment of regulatory frameworks for artificial intelligence is of paramount importance in our rapidly evolving digital landscape. These rules are essential for ensuring ethical and fair AI, protecting privacy and data security, mitigating risks, and promoting accountability. While they are designed to benefit society, they may pose challenges for AI developers, startups, and small businesses. To strike the right balance, regulatory frameworks should be carefully crafted to foster innovation while addressing potential harms and ethical concerns. Collaboration between governments, industry stakeholders, and the public is crucial in shaping effective AI regulations that promote responsible AI development and use. As AI continues to shape our world, regulatory frameworks will play a pivotal role in shaping the future of this transformative technology.

Ahmed Banafa is an expert in new tech with appearances on ABC, NBC , CBS, FOX TV and radio stations. He served as a professor, academic advisor and coordinator at well-known American universities and colleges. His researches are featured on Forbes, MIT Technology Review, ComputerWorld and Techonomy. He published over 100 articles about the internet of things, blockchain, artificial intelligence, cloud computing and big data. His research papers are used in many patents, numerous thesis and conferences. He is also a guest speaker at international technology conferences. He is the recipient of several awards, including Distinguished Tenured Staff Award, Instructor of the year and Certificate of Honor from the City and County of San Francisco. Ahmed studied cyber security at Harvard University. He is the author of the book: Secure and Smart Internet of Things Using Blockchain and AI.

Leave your comments

Post comment as a guest