Comments

- No comments found

The use of machine learning (ML) in the medical field has achieved a significant milestone.

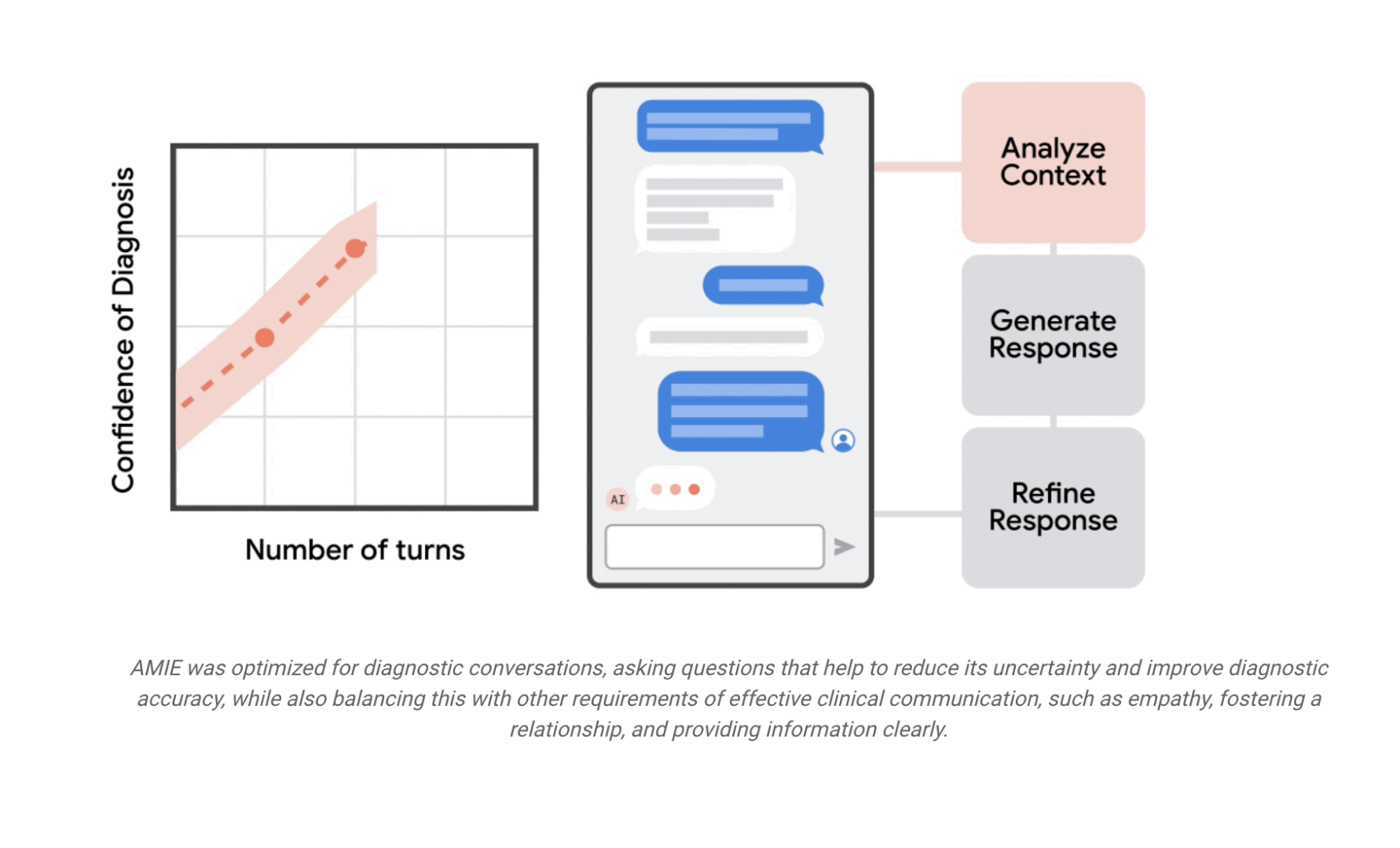

An experimental chatbot has recently outperformed human doctors in diagnosing various medical conditions based on simulated patient interviews. Developed by Google, the chatbot, named Articulate Medical Intelligence Explorer (AMIE), demonstrated higher accuracy in diagnosing respiratory and cardiovascular conditions compared to board-certified primary-care physicians. The machine learning based system, still in the experimental phase, exhibited a similar level of empathy during medical interviews and showcased potential for optimizing diagnostic dialogue.

Source: Google Health Research

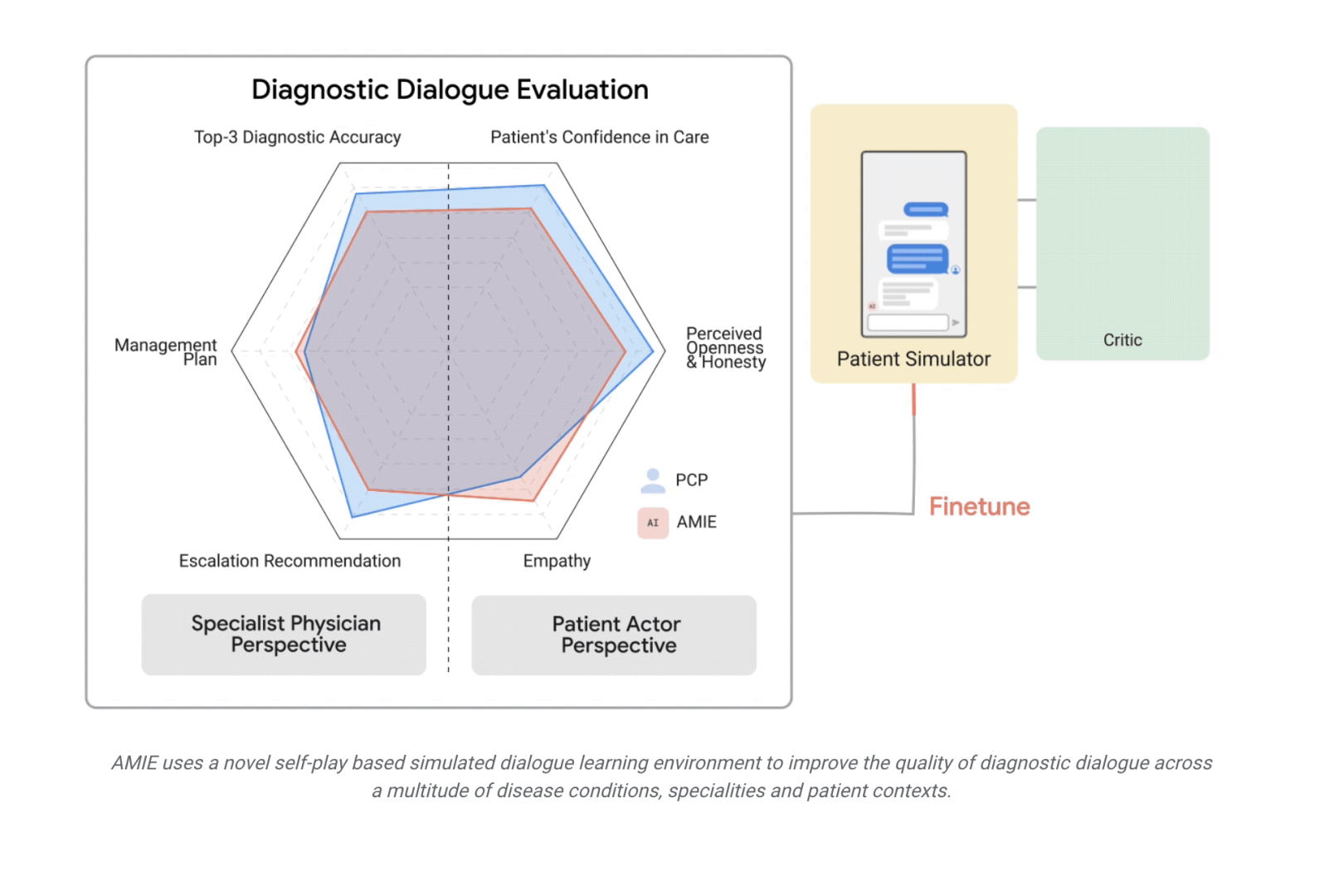

The study, led by Alan Karthikesalingam, a clinical research scientist at Google Health in London, suggests that this represents a groundbreaking achievement in the development of conversational ML systems designed for diagnostic purposes. AMIE, based on a large language model (LLM), underwent extensive training on real-world medical data, including electronic health records and transcribed medical conversations. Additionally, the chatbot was trained to play multiple roles, such as a person with a specific medical condition, an empathetic clinician, and a critic evaluating doctor-patient interactions.

Source: Google Health Research

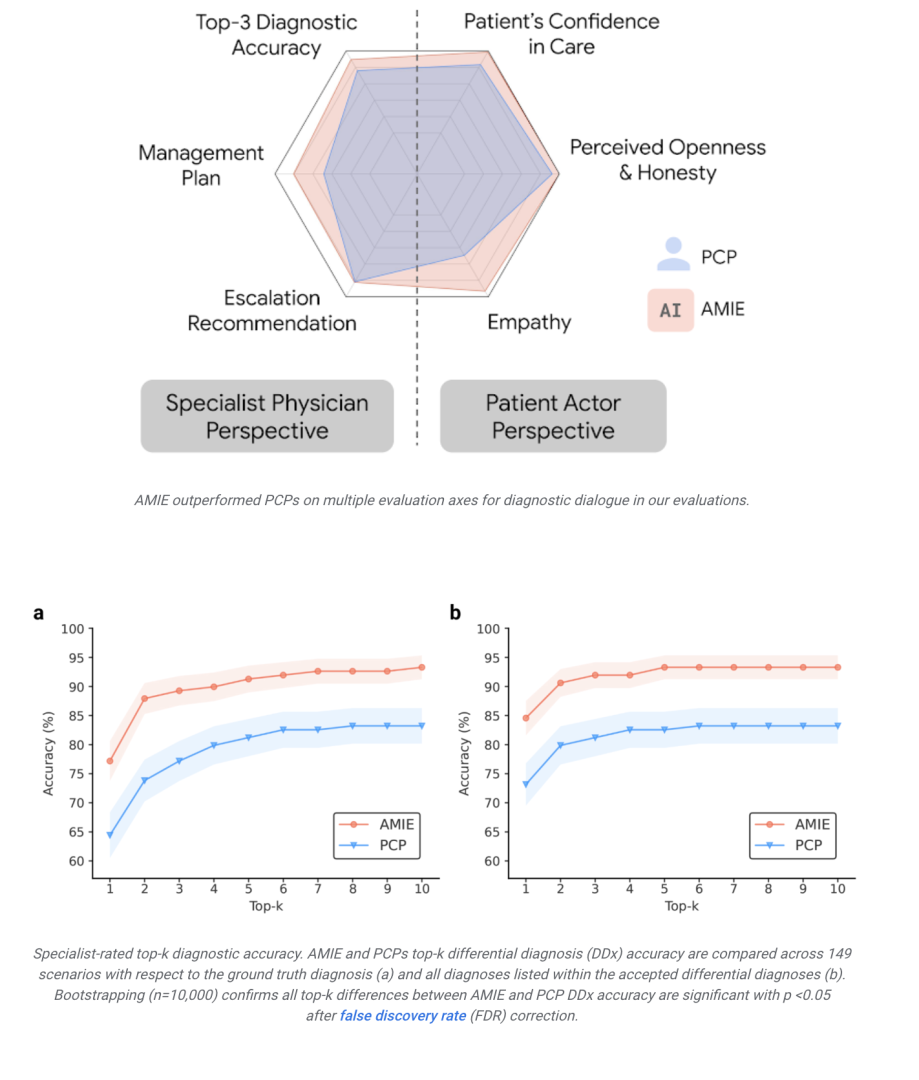

To assess its performance, AMIE engaged in online text-based consultations with actors trained to simulate patients, and the results were compared with interactions involving board-certified clinicians. The ML-based system not only matched but, in many cases, surpassed the physicians' diagnostic accuracy across six medical specialties. Furthermore, AMIE excelled in various conversation quality criteria, including politeness, explanation of conditions and treatments, honesty, and expressions of care and commitment.

The study emphasizes caution and humility in interpreting the results, as the chatbot has not been tested on real patients with actual health problems. The researchers acknowledge the limitations and stress that while AMIE may be a helpful tool in healthcare, it should not replace interactions with physicians. Medicine involves more than information collection; it revolves around human relationships, an aspect that ML-based systems may not fully encompass.

Source: Google Health Research

Despite the promising performance of AMIE, concerns persist regarding potential biases and the need to ensure fairness across diverse populations. The researchers plan to conduct more detailed studies to evaluate biases and address ethical requirements for testing the system with humans facing real medical issues. As machine learning continues to advance in healthcare, privacy considerations and the responsible deployment of these technologies remain critical aspects of their integration into medical practices.

Leave your comments

Post comment as a guest