Comments

- No comments found

The debates surrounding AI’s growing involvement in healthcare have seemingly been going on forever.

While machine intelligence, on current evidence, could play a pivotal role in transforming the field, is it too reckless to let AI permeate healthcare in absolute terms?

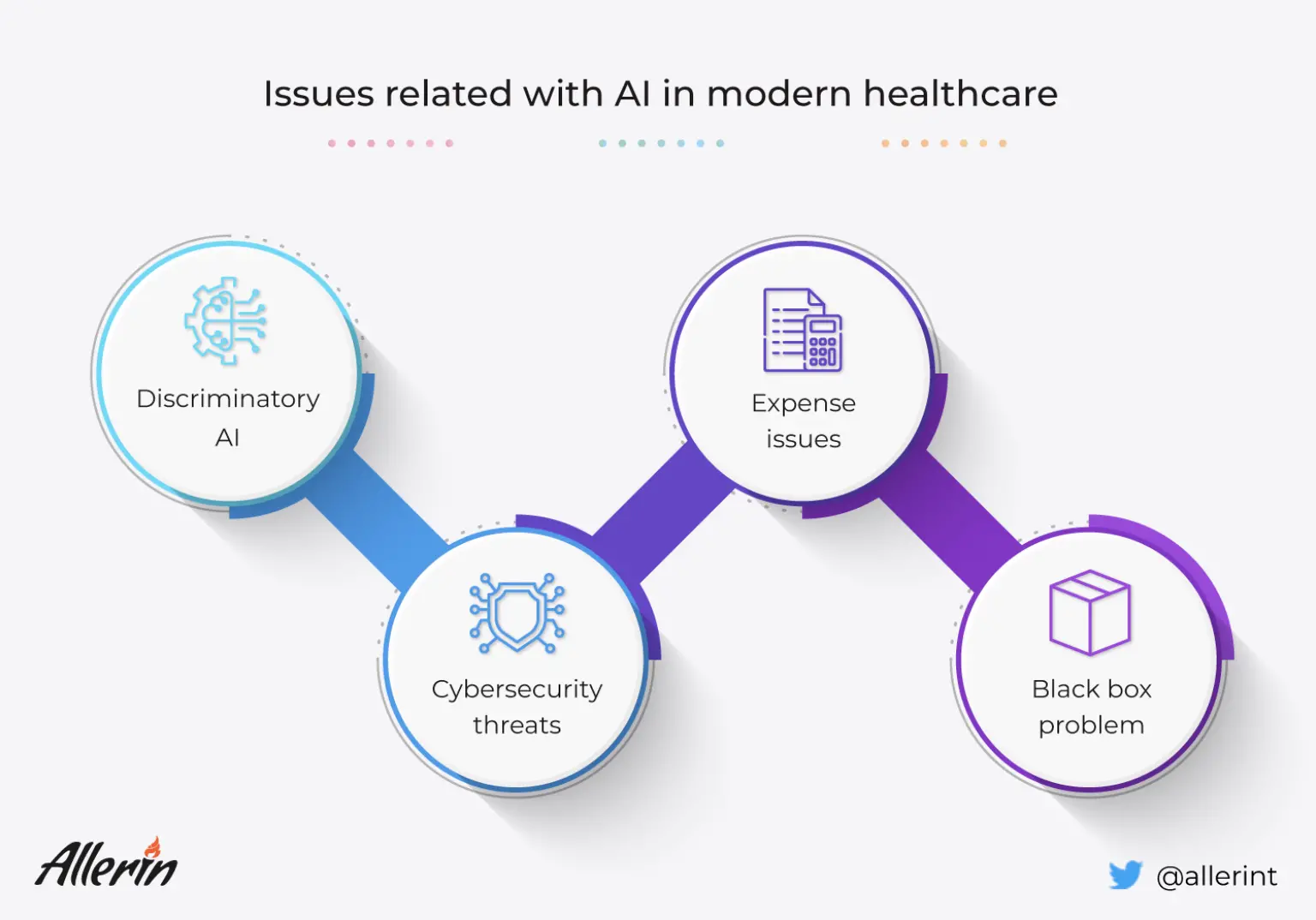

In a utopian world, health experts will be (more or less) replaced by ultra-intelligent machines in hospitals. Futuristic surgical robots will have the ability to fix any deformity or wound in the human body without being closely monitored during an operation. AI would have figured out a way to diagnose and cure health conditions that victimize individuals only belonging to certain nationalities or ethnicities. Closer to reality, AI has not reached those levels of greatness just yet. A few years or, perhaps, decades down the line, artificial intelligence may hold an edge over its real counterpart. However, issues related to AI-run much deeper than their efficiency and ability. There is a reason why countless sci-fi movies have had evil robots as antagonists trying to rip humanity to shreds. As glorious as it sounds, greater involvement of AI in healthcare may run into major issues. Quite a few of these problems are related to ethical oversight, black box complications, and other issues.

Cases of AI being discriminatory in healthcare have been frequently documented in the past. As we know, any AI-powered system uses data from the past to predict and simplify (and/or resolve) situations in the present and future. Today, institutes and organizations in the health industry can use artificial intelligence and its derivatives to make healthcare-related decisions for their patients and customers. These clinical decision-making systems can analyze vast swathes of data before recommending measures for patient treatment and future care. However, as specified earlier, the AI's output suggestions and actions depend heavily on the input provided to them (or the sources of data that such systems use for their operations over longer periods).

Medical studies and historical records have shown that certain illnesses and infections are more commonly found in individuals from specific regions. Some of the common examples of such region/race-specific genetic conditions are:

Sickle-cell disease: Found predominantly in persons with African American and Mediterranean heritage.

Tay-Sachs disease: Commonly found in persons belonging to the Ashkenazi (eastern/central European) Jewish or French-Canadian lineage.

Hemochromatosis: Commonly found digestive and absorption-related conditions in Irish people.

Physicians all over the world are aware of the role of population and hereditary genomics in causing region-specific health conditions. Due to the frequently evolving nature of information available regarding such conditions, AI-enabled medical systems may be unable to pin down the connection between a person's health and their race. As a result, there is a lack of clear-cut guidelines in medicine-making and health insurance documentation work that work towards resolving such issues.

Studies have found that intelligent decision-making algorithms direct more resources towards white patients rather than persons belonging to minorities. Since we cannot blame AI for the prioritization, the main problem lies in the past records, which are used by a machine to make decisions. In medical records of most countries, the amount of resources allocated for healthcare and overall welfare is usually lower for minorities. While assimilating past data, decision-making machines might 'think' that such trends can be the norm for the future too. As a result, the trend of marginalizing minorities continues even with the involvement of AI in healthcare.

Despite our past learnings, we humans may still possess deep-rooted prejudices towards others. Conservative and outdated lines of thinking may reflect in the type of initial source data used to train systems through machine learning and computer vision techniques. Smaller datasets used in AI and machine learning may lead to a familiar outcome, which is AI systems being 'biased' due to the information they are 'taught.' These are not reasons to abandon AI altogether. The 'biased AI' concept can be corrected by using bigger datasets and, more importantly, an open mind. In simpler words, if we want our AI-based healthcare systems to evolve, we will have to evolve too.

Here’s a hypothetical situation: You take your beloved six-year-old son to a new circus in town. Inside the giant circus tent, there are other jubilant kids accompanied by their parents. The crowd waits in anticipation when the lights are dimmed, and on comes a gentleman in a tuxedo for the opening act: a magic show. Seated on the front row, you witness the kids in the arena having the time of their lives as the magician pulls off one great magic trick after another. After several rounds of bewitching trickery, the immaculately dressed man reveals his pièce de résistance: he ‘makes’ multiple cones filled with ice cream seemingly out of thin air! Your gobsmacked son gets one of the gorgeous (and complex-looking) cones. Now, you have a rough idea of how ice creams are made. Still, something about the entire act makes you uncomfortable about your child eating the one in his hand.

A black box AI presents a somewhat similar problem: the system’s workings are unknown to users or other involved parties. Deep learning concepts used to train machines in semi-supervised and unsupervised circumstances usually run into black box-related dead ends. The nearly impenetrable system only allows users to view its outputs. While such opaqueness may or may not be an issue in other fields, it is unacceptable in healthcare, wherein someone’s life depends on minute quantitative deviations.

In a deep learning machine training process, algorithms use billions of input data points and correlate specific features to form an output. To physicians, the correlations may seem too random and even illogical to apply in real-world healthcare situations. Deep learning in AI is largely self-directed and, hence, its intricate workings may be nearly impossible for data administrators, health experts, and general consumers to interpret.

So far, so good (sort of). When the operational lines of an algorithm used for critical surgeries and formulating decisions in pharmaceutical companies cannot be comprehended, errors in them can go unnoticed in the initial stages of those processes. The real issues arise when these errors cascade into full-blown system problems. By this point, even the most accomplished data scientists may find it difficult to investigate and prepare a damage report for the situation.

We have not reached the phase where basic diagnostics and primary-level medical decision-making are carried out by machines. At least for now, physicians undertake those responsibilities in health centers. Currently, AI-based suggestions are not considered to be superior to an experienced physician’s calls. However, it is very likely that, at some point in the future, AI will be the standard-setter in terms of human bodily diagnostics, treatment suggestions, and implementation. In fact, in the future, it may be unethical to not follow the recommendations put forth by AI. If such a future comes to pass, new problems could be created whenever a black box situation occurs. As a result, if the suggestion put forward by an algorithm is not transparent, no physician may be able to verify it. Such suggestions could have adverse effects on patients.

Advancements in technology and connectivity in any field can be a double-edged sword: Higher levels of technological involvement can also mean that network intruders can access and manipulate vital operations remotely. As we know, healthcare has been increasingly dependent on digital technology and machine learning over the decades (and will continue to do so). Partially and completely automated surgical robots can be accessed by cyber-attackers. Such a network intrusion during a critical surgery can be fatal for patients.

Apart from accessing and altering the operations of digitized medical systems in hospitals, cybercriminals can also break into healthcare databases, generally maintained digitally across the board in hospitals, and access the sensitive and confidential data of patients. Hospitals could face legal issues if their patients’ data is leaked into the public domain.

In 2018, a study estimated that the cost of integrating AI in healthcare worldwide would be approximately US$ 36 billion. One must remember that the costs of implementing AI include not just digitizing the databases and making major structural changes within hospitals but also training the staff to be able to correctly use the technological advancements. While rich countries can afford to integrate AI into most of their public hospitals, poorer countries will struggle to keep up. As a result, advancements will be seen only in certain parts of the world.

The ‘yet’ in the title of this article clarifies that we are not criticizing the growing presence of AI in healthcare just for the sake of it. An essential truth about AI (in any field) is that we are yet to experience the absolute best of it. It is obvious that, with newer learnings and updates, we may be able to witness the outer limits of AI’s greatness, at least in healthcare. AI in today’s world is far from perfect. Niggling flaws like the ones mentioned above need to be ironed out efficiently. Until then, healthcare can stand on its own feet without digital crutches.

Naveen is the Founder and CEO of Allerin, a software solutions provider that delivers innovative and agile solutions that enable to automate, inspire and impress. He is a seasoned professional with more than 20 years of experience, with extensive experience in customizing open source products for cost optimizations of large scale IT deployment. He is currently working on Internet of Things solutions with Big Data Analytics. Naveen completed his programming qualifications in various Indian institutes.

Leave your comments

Post comment as a guest